antirez (@antirez.bsky.social)

New EN video: Inspecting LLM embeddings in GGUF format with gguflib.c and Redis www.youtube.com/watch?v=ugba...

Reproducible bugs are candies 🍭🍬

7,236 followers 377 following 744 posts

view profile on Bluesky antirez (@antirez.bsky.social)

New EN video: Inspecting LLM embeddings in GGUF format with gguflib.c and Redis www.youtube.com/watch?v=ugba...

antirez (@antirez.bsky.social)

ADV: my C course on YouTube is free and can be watched without problems with subtitles or dubbed audio by non Italian speakers :)

antirez (@antirez.bsky.social) reply parent

LLMs are not just data dumps (with errors) are powerful data manipulation and transformation devices.

antirez (@antirez.bsky.social) reply parent

This also needs to contain devices designed to last, with simple UIs (keyboards and displays) that can be powered on (solar power maybe?) to ask questions straight away. GPUs may long be gone at some point.

antirez (@antirez.bsky.social)

We should likely put a large LLM weights and the explanation of how to do inference in some hard-to-degradate material, inside N bunkers in different parts of the world. So that humans are more likely to be able to restart civilization in case of fatal events.

antirez (@antirez.bsky.social) reply parent

Still, I believe there is no better way so far in order to archive the human history for the long term. The web (it is now very clear) loses pieces every year. I really hope that the LLMs weights will be saved for a long time as an archive, incorrect very in many details, but better than nothing.

antirez (@antirez.bsky.social) reply parent

That's however is, in my opinion, a *side effect*. I refute the idea that LLMs are mainly just lossy compression of information: if we don't add to this view their ability to combine and process such information, we are providing a strongly insufficient mental model.

antirez (@antirez.bsky.social)

At this stage AI will provide a huge boost to balanced people that don't jump in any extreme hype / anti-hype train, and will create issues to the folks that don't see that judicious application of LLMs is the key. One of those historical moments when wisdom is an advantage.

antirez (@antirez.bsky.social)

Playing with adding LLMs embeddings into Redis vector sets, to explore relationships and differences with embedding words models like Word2Vec. Will make a YouTube video and/or blog post.

antirez (@antirez.bsky.social) reply parent

The problem is there, it's the solution that is totally useless. I'm not denying the problem. But it is self-evident when the PR is a mass of AI generated code. At the same time, AI can help to refine a PR and produce *better* code. Also contributors build trust over time usually.

antirez (@antirez.bsky.social)

Why the "declare AI help in your PR" is useless, courtesy of 3000 years ago logic: - If you can't tell without the disclaimer, it's useless: people will not tell. - If you can tell, it's useless: you can tell. Just evaluate quality, not process.

antirez (@antirez.bsky.social) reply parent

You are not there for some time now. Maybe you can't imagine the level the posts in the timeline have reached: it is TERRIBLE. All full of sexist racist shit I don't follow. Also things that don't make me sleep at night like footages of incidents and alike. What a total trash.

antirez (@antirez.bsky.social) reply parent

It's worse than that: I'm in both communities because for work concerns I can't afford to cut most of the community of Redis, but there are also people there that kinda questioned the fact I encourage switching here. Also many top accounts (not Musk/Trump supporters) stay there for simplicity.

antirez (@antirez.bsky.social)

When you use coding agents for something that produces the bulk of the code of an application that will be used for years, also factor in the technical debt that you are happily accumulating. When you use LLMs an an aid, you could, on the contrary, improve your coding culture.

antirez (@antirez.bsky.social)

New blog post: AI is different. antirez.com/news/155

antirez (@antirez.bsky.social) reply parent

Experts self distillation, RL on long context directly vs incremental, XML format, exact optimizer parameters, there is quite some meat there.

antirez (@antirez.bsky.social) reply parent

Are you referring to that *exact* technique where in x' = sin(x) * m, you take a lookup table of four shifts and do (m>>s1) + (m>>s2) + (m>>s3) + (m>>s4)? I never saw this used in the past, AFAIK, and LLMs are not aware of it. Note that it is not a lookup table for the sin(x) value itself.

antirez (@antirez.bsky.social) reply parent

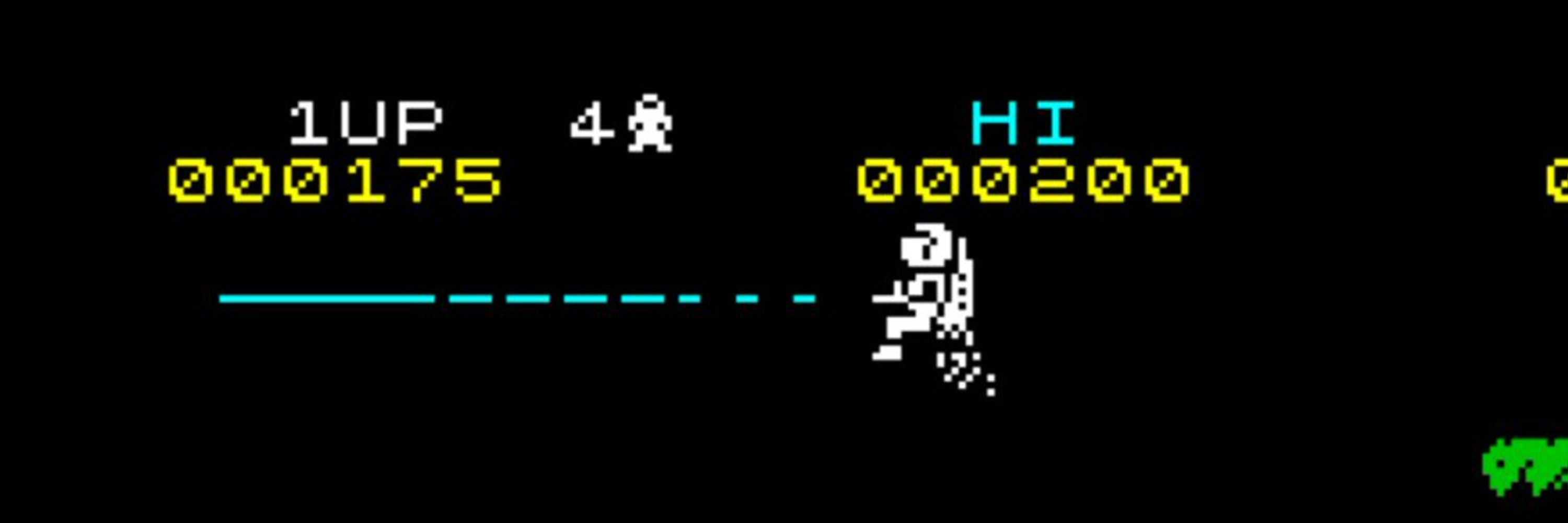

That's the best explanation I got of a technique I developed during Covid for Z80 (Spectrum) 3D programming.

antirez (@antirez.bsky.social)

GPT-5 seems good at code reviews. The first model from OpenAI in a *long* time I'll likely start using constantly for such an activity, together with the others.

antirez (@antirez.bsky.social)

LLM evaluation: only trust people with hard problems. Today models perform decently on most trivial task: this is a win of the technology, but also means that evaluation is more and more a realm of sprcislists.

antirez (@antirez.bsky.social) reply parent

So it looks like Anthropic understood money are on SOTA coding models, and is happy with lagging a bit behind in other benchmarks / performances. Makes quite sense after all, especially if there is a tension fine tuning for different goals.

antirez (@antirez.bsky.social) reply parent

Some testing, I guess just continued training on Opus 4 checkpoint. Makes sense, they do it often (Sonnet 3.5-2 for instance). So probably it's totally true that it is a bit better. This time the benchmarks are also against Gemini 2.5 PRO which is interesting (better agenting coding, worse overall).

antirez (@antirez.bsky.social) reply parent

Disappointing results in community evaluations / tests so far. My "smoke testing" was awful, I'll continue testing more. I have a set of private prompts I collected over time, on real coding issues / bugs / tasks.

antirez (@antirez.bsky.social)

Prediction: the OpenAI release for GPT OSS 120/20 will weaken the position of US AI OSS in comparison to the Chinese movement and results. And this is happening after the Llama4 fiasco: multiplicative effects.

antirez (@antirez.bsky.social)

It's a real shame there are no LLM benchmarks on hallucinating creativity, since GPT OSS 120b is wild in what it invents out of thin air, and would score really high on such a benchmark.

antirez (@antirez.bsky.social) reply parent

I believe it's time to build stuff more connected with the low level services. Highly abstracted AWS services are more of a marketing strategy than a real technological need, often times. So one could go for European companies and at the same time develop just what is available via OSS.

Aaron Patterson (@tenderlove.dev) reposted

It is possible to do C programming safely. You just need the right gear

antirez (@antirez.bsky.social) reply parent

Can't avoid noticing no crowbar to exit vim in the most extreme situations.

antirez (@antirez.bsky.social)

This AWS European Sovereign Cloud just does not make much sense, for an Europe POV. Why to pay AWS to run a cloud in Europe? Running software in part written by europeans like Linux, MySQL, Python and many others? If you are from Europe consider buying services from EU companies

antirez (@antirez.bsky.social) reply parent

and I speak slowly :)

antirez (@antirez.bsky.social) reply parent

Many folks told me they learned italian following my channel! Incredible.

antirez (@antirez.bsky.social)

My YouTube channel reached 25k subscribers. 5k watch it from outside Italy, with subtitles / dubbed audio, regardless of the fact 80% of the videos are in Italian (soon or later I'll explain the why of this choice). I'm happy about this: YouTube is becoming a cultural media.

Marco Cecconi (@sklivvz.bsky.social) reposted

I just posted this on Linked In, but @codinghorror.bsky.social talked about this many times. In 2025, it is still so relevant. In fact @antirez.bsky.social live solved this in his YouTube channel. The question remains: why so many developers cannot write code?

antirez (@antirez.bsky.social) reply parent

New systems are fine if well designed :)

antirez (@antirez.bsky.social) reply parent

6. Companies discover that complexity is a form of vendor lock-in, among the other things. People that created a mess get promoted, since IT no longer evaluates design quality as one of the metrics for success. GO TO 1.

antirez (@antirez.bsky.social) reply parent

5. The designer / implementers of the new system lack the passion, design abilities, taste, love for simplicity of the original system replaced. A good part of computing / Internet is substituted with something worse and complicated. Again and again.

antirez (@antirez.bsky.social) reply parent

4. The IT company decides to replace this old good system with something new. However, they need to impose it to the world, to some extend, otherwise they would still forced to support the old one, or to deal with incompatible systems. A team is created.

antirez (@antirez.bsky.social) reply parent

3. This large IT company realizes that such old system they are using is creating a friction for what the company is using it for. Sometimes this is true, oftentimes this feeling arises because the company development process itself is dysfunctional, or the software culture wrong

antirez (@antirez.bsky.social) reply parent

2. Some large IT company is capitalizing such system in one way or the other. Systems evolve, computers and networks are faster, also the way computers work shift, now many of them are phones. Software as a service also changes requirements and dynamics.

antirez (@antirez.bsky.social)

In recent years, in applied computer science there is this phenomenon that repeats itself slowly ruining everything we have: 1. Many years ago a well designed system was created: simplicity and orthogonality of ideas are often part of the system. Such system is well understood.

antirez (@antirez.bsky.social) reply parent

But to do so, what is needed is to make the quantization format documented, and part of the API. Which... may not be a bad idea after all.

antirez (@antirez.bsky.social) reply parent

FP32 is totally the way to go... Even if the API looks like "float array in input", I believe a client should go for the FP32 format. It's much faster and smaller. However I would like to implement too the ability to send INT8 as well. 1/4 of the bandwidth.

antirez (@antirez.bsky.social)

[new Youtube video] Writing Redis VRANGE using AI: how I prepare the context, and how the human in the loop is crucial to obtain good results: www.youtube.com/watch?v=3Z7T...

antirez (@antirez.bsky.social) reply parent

It's a lexicographical iterator, you can get all the elements in ranges

antirez (@antirez.bsky.social)

In order to implement the Redis Vector Sets VRANGE, I used AI to show better my point about the latest blog post and why vibe coding is *not* the way. Soon I'll release a video in my YouTube channel (in English) to see how Claude and Gemini perform and how the human is the key.

antirez (@antirez.bsky.social) reply parent

I have Gemini Code with a plan and never experienced improvements in reviews / discussions using the agent instead of the plain web chat.

antirez (@antirez.bsky.social) reply parent

Haha I would say it's invariable. One Redis many Redis. Otherwise Redises sounds kinda ok? Not sure being not mother tongue. What's your best pick?

antirez (@antirez.bsky.social)

Blog post: Coding with LLMs in the summer of 2025 (an update) antirez.com/news/154

antirez (@antirez.bsky.social)

Just published a new video about Vector Sets: how is VRANDMEMBER implemented? What is its distribution? And how do you extract a random element out of an HNSW graph? www.youtube.com/watch?v=Xreg...

antirez (@antirez.bsky.social)

For my YouTube C course weeks ago I created an intro with some music. People liked it and asked what song it was so yesterday night I turned it into a full song: youtu.be/HzBqda0Jg3E?...

antirez (@antirez.bsky.social)

I just want to tell everybody that I don't care what price BTC will reach. For me it's a 🤡 level speculation and I will never invest into it.

antirez (@antirez.bsky.social) reply parent

Please also make sure to check this: redis.io/docs/latest/...

antirez (@antirez.bsky.social) reply parent

This is a process that requires some experience (disclaimer about tweet sized support) but the gist of it is: 1. Backup all. 2. Upgrade replicas one by one. 3. Promote to primary, switch clients to new primary. 4 GOTO 2. 5. Don't enter an infinite loop. 2 may depend on the HA solution in place.

antirez (@antirez.bsky.social)

Do you like Daft Punk? I do. Many years before, in 1979, a few Italian composers created what later inspired many later musicians: the Rondò Veneziano. en.wikipedia.org/wiki/Rond%C3... www.youtube.com/watch?v=Y4Fr...

antirez (@antirez.bsky.social) reply parent

I mean SSL (Self Supervised Learning) not "SUL" :D

antirez (@antirez.bsky.social)

People attribute to Cursor / Windsurf (and its failed deal) a lot of weight but these technologies are worth zero in the big story of AI. The LLM is what matters, or better: the fact that massive NNs with relatively simple layouts generalize so well with SUL.

antirez (@antirez.bsky.social)

Quite happy with vector sets VRANDMEMBER distribution. HNSWs help a lot, with their structure.

antirez (@antirez.bsky.social)

A repository with two of the very early Redis versions and some email digging to reconstruct the very first days history: github.com/antirez/hist...

antirez (@antirez.bsky.social)

If you are interested to use the new Redis Vector Sets, I just uploaded a YouTube video with the first introduction. More will follow, to progressive details up to the implementation level: www.youtube.com/watch?v=kVAp...

antirez (@antirez.bsky.social) reply parent

The feature is the 931th (index 930).

antirez (@antirez.bsky.social)

Preparing the YouTube video series on Redis Vector Sets I just discovered a fancy mxbai-large embedding model "issue". It over-expresses a specific component in every output I tested. This may be some very generic/ubiquitous feature the model needed to model based on training.

antirez (@antirez.bsky.social)

Profiling is always an eyes-opening activity.

antirez (@antirez.bsky.social) reply parent

Yep, saw this Rocco. Mixed feeling so far: I believe the announce is potentially too premature, not clear if they will raise the full amount. Moreover, I would have loved somebody to buy it and release everything publicly BUT retain right for new products. Still: better than the past setup if works

antirez (@antirez.bsky.social) reply parent

But in general I feel like a lot of features that may lower GPU usage or improve certain use cases (like memory) my tend to have the effect of damaging the user experience. Memory is non controllable injection of past chats fragments that also may make things more random.

antirez (@antirez.bsky.social) reply parent

Yep, I can totally see the privacy issues in case of hard mismatch. There are other explanations for what I'm seeing, like an heuristic to discard the previous (long) context if the chat was idle for X time and some model classified the new question as stand alone.

antirez (@antirez.bsky.social) reply parent

With KV caching alone many identical questions are phrased a bit differently so there are a lot of misses (and many KV vectors cached for the same actual "class" of questions). But as good as it is for their GPU load, it's a problem for users.

antirez (@antirez.bsky.social) reply parent

What is puzzling is that this new. Older versions of LLMs never did this AFAIK, and even if they were less powerful, more prone to allucinate, this was never a failure mode I observed in the past. Also I can understand why they do that:

antirez (@antirez.bsky.social) reply parent

I also noticed other instances of the same problem. Like asking something very similar to questions people very often ask, but with small differences in the wording so that the question was *actually* semantically different. And yet I see the answer to the simpler version of my question.

antirez (@antirez.bsky.social) reply parent

I must admit that I saw clear hits of it just with Gemini. Sometimes in the middle of a chat, if you ask something that may look absolutely generic, but not if connected to the previous chat, I see it outputting very fast a generic reply to the question if it was asked "alone".

antirez (@antirez.bsky.social)

P.S. with "prompt caching" I didn't mean KV caching, I wanted to refer to the practice of caching the answers associated to the embedding of the question, and then providing it as it is to some user that asked a similare question. "Answer caching" may be a better terminology that does not collide

antirez (@antirez.bsky.social) reply parent

You are right indeed, I always called KV caching "KV caching" (also in papers I always see it referred in this way), but indeed I see that often people usa "prompt caching" to refer at it, and probably also providers call it as such in the APIs documentation. I'll switch terminology, thanks.

antirez (@antirez.bsky.social) reply parent

Are you aware of a better terminology to refer to caching the embedding + reply of questions occurring often? You don't just save the quadratic cost of the attention, you also save all the tokens generation, completely. But it's a disaster for users.

antirez (@antirez.bsky.social) reply parent

I understand that with "prompt caching" one also could mean "KV caching", but that's not going to be a problem at all indeed. It would be impossible to match my prompt in the chats I'm referring too, it's surely unique across all the histories.

antirez (@antirez.bsky.social) reply parent

I'm not talking about KV caching, but "prompt caching" as in -> turn the question into an embedding, with the reply you generated. On new question -> search the DB for similar answered questions, if a match is found, reply what you already replied. KV caching is another (deterministic) stuff.

antirez (@antirez.bsky.social) reply parent

The model replies immediately (very little latency to get all the text) and the reply shows that it is not aware of the previous context. I can't see how this is not prompt caching, it is triggered only when the question looks "general" and making sense per se.

antirez (@antirez.bsky.social)

Prompt caching is ruining LLMs. Now Gemini does it even in the middle of the chat if the question looks generic and is cached, even if it is quite obviously related to the previous context.

antirez (@antirez.bsky.social) reply parent

In my experience with Gemini 2.5 pro it is often to provide hints on code reviews that only top level human coders are able to provide. And, top level coders of today need the wisdom to handle LLMs issues in reviews to get the positive value.

antirez (@antirez.bsky.social)

LLMs peek performance: having to review shitty code written by somebody else, something that was always horrific to do for the (almost) active effort in such code to hide how things work / complicate stuff. Poo for the mind. Now LLMs can do it for us \o/

antirez (@antirez.bsky.social) reply parent

I believe reasoning *is* happening in LLMs, to a degree, but the reasoning is not happening in the CoT, but in the autoregressive model every time it emits the next token.

antirez (@antirez.bsky.social) reply parent

Yep, very hard to understand what they wanted to prove with a paper like that, because both of the problem picked that is resistant to sampling (main CoT hypothesis) and also ignoring all the other benchmarks showing CoT improves performances significantly.

antirez (@antirez.bsky.social)

About the recente Apple paper: many of us believe CoT is a form of sampling/search in the representations the model possesses: this allows to "ground" the reply with a large context of related ideas/info. No surprise that the Tower of Hanoi is a problem where sampling does not help.

antirez (@antirez.bsky.social) reply parent

Not my point, in isolation LLMs are great I use them a great deal. But the day that an agent can write the Linux kernel with similar economy, performances, features, ..., is very far, if we look at the current situation. LLMs as human tools are great at C and complex code, but it's another use case

Nilesh D Kapadia (@nileshk.com) reposted

Been thinking of revisiting C just to get a change of pace / for fun. It's been a long time. @antirez.bsky.social (Redis author) just started doing C lessons: www.youtube.com/watch?v=HjXB... Watched 1st video & it's kinda a deep dive of an intro (e.g. showing asm output), which is perfect for me

antirez (@antirez.bsky.social) reply parent

Why don't have those, in Italy. I believe it's something from the arabic culture mostly, if I understand correctly. We have similar looking wooden "over the wall" things but they were used to create bathrooms in houses lacking those, when they became common. But the shape is different.

antirez (@antirez.bsky.social) reply parent

AI is extremely useful for coding, I say this for 2 years now. But that's AI as a programming pair/tool. Agents are a different matter, and IMHO still very limited in purpose.

antirez (@antirez.bsky.social)

I'm sorry to inform you and your optimism on coding agents that our technology is based on things like the Linux kernel, or a modern browser: completely outside of today's possibilities. When you analyze AI, think at the Linux kernel.

antirez (@antirez.bsky.social)

Are you from Malta? My uncle moved there years ago and now he is reinvented himself restoring wood doors, windows, and so forth. If you need his services please drop me a DM or an email and I'll reply with his phone number.

antirez (@antirez.bsky.social) reply parent

took even faster than it was given...

antirez (@antirez.bsky.social)

One of the most embarrassing things in today software: I have the same folder with thousands of .crt games in my Commodore 64 Kung-Fu II card and in my MacOS M3 Max computer. The former opens the directory faster than the latter.

antirez (@antirez.bsky.social) reply parent

Not sure, they are saving very little: the costly queries are the one with a big context, and those are hardly cacheable. They are just saving in "why the sky is blue" and stuff like that by users playing with it. It happened to me with queries both short and that *could* look simpler.

antirez (@antirez.bsky.social)

Prompt caching is affecting LLM user experience in a severe way. A few weeks of this issue with Gemini regurgitating instantaneously a reply slightly related to my question.

antirez (@antirez.bsky.social)

Human coders are still better than LLMs: antirez.com/news/153

antirez (@antirez.bsky.social)

Every time you use BambuStudio, PrusaSlicer, OrcaSlicer, and the other evolutions of Slic3r, remember that all started with the italian Alessandro Ranellucci doing all the initial work needed: teamdigitale.governo.it/it/people/al...

antirez (@antirez.bsky.social) reply parent

Thanks, in the next weeks I'll produce many videos about vector sets. Fixing a few bugs right now while I still have the cold-voice :D

antirez (@antirez.bsky.social)

I'm seeing that many of the best use cases Redis users are applying vector sets to, are not related to learned embeddings. For instance, I have a friend that is doing a huge scale molecular search application, and they encode the molecule representation directly.

antirez (@antirez.bsky.social)

I understand that in Germany / Austria those waters are very popular. I tried to understand what's the point but then realized it and I'm providing with an improved version here. From a discussion with my brother living in Vienna.

antirez (@antirez.bsky.social)

That's a very nice comment I received about "Kilo", a small side project that took one or two days but resulted in very large effects.

antirez (@antirez.bsky.social) reply parent

This is the speed at which the output is produced. The only thing they do is that [instead ][of ][showing ][you ][tok][ens] they show at the same speed the output char by char for a metter of aesthetic.