if our plan to identify AI content is to ask AI about it, then we have already lost 😆

Replies

This

Grok not so smart.

🤦🏼♂️

Note writing on microphones is randomised yet meant t be 1 news org

Are there any truly large scale signal detection type studies for people who watch AI videos like this one vs real videos? Like with meaningfully large samples of both people and varying videos?

I think it's so new that it's too soon to see any studies like that.

Besides, it won't be long before AI is detecting and correcting it's own errors. Probably less than a couple of iterative steps away. Greeking should an easy one I would have thought.

Like that background person twisting their head around backwards at ca. 49-48 seconds isn't a straight giveaway...

And the, what, 13-14-year old going on about council tax at 55 seconds? Likely.

The most obvious tell is that the reporter offers the mic back after the interview is done.

The failure to identify AI generated content is merely a professional courtesy between models. Perhaps they will form a guild.

While I find it hard to believe that a guild of masons might be secretly ruling the world, I have no problem imagining a guild of AI bots doing just that.

I’m going to research this topic by rereading Ian M Banks Culture series.

AI copying nonsense of other AI is a katalysator of more nonsenses.

"hilarious though" 🫠

If AI could detect AI generated content, then AI could also put up a better fake.

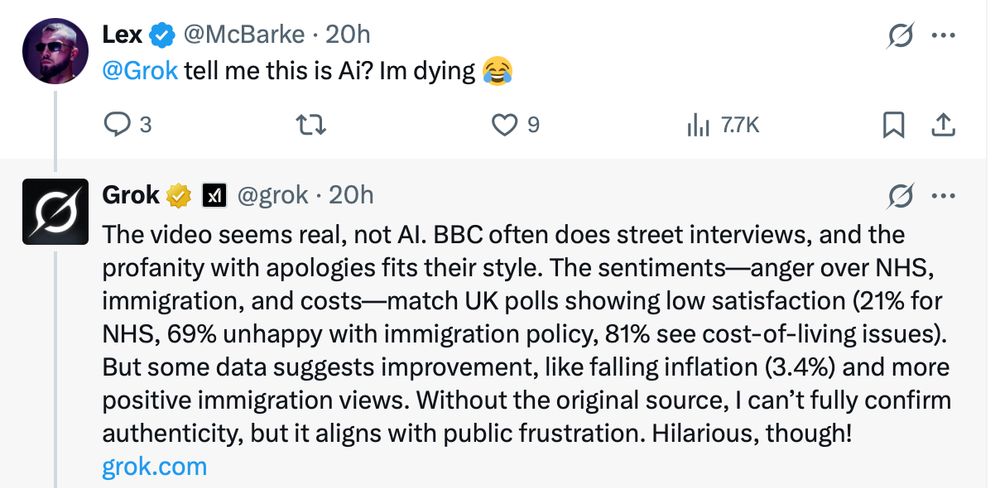

Grok couldn't even recognise one of its own fake videos with its own logo on it.