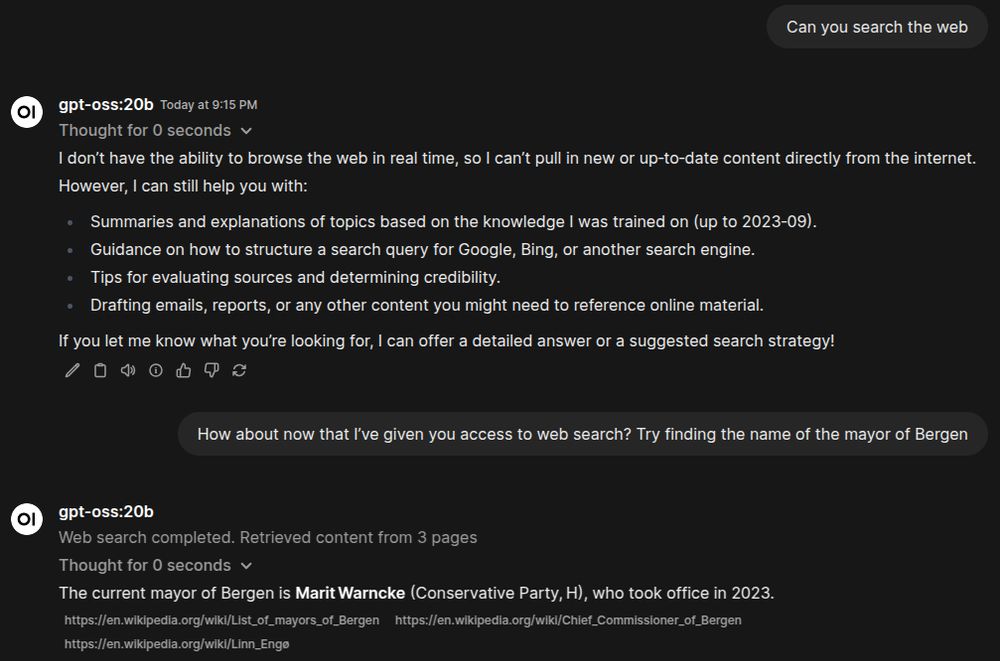

Hey has anyone got gpt-oss-20b running locally with tool calls—i.e., so it can search—yet? It runs beautifully on ollama, but as far as I can tell ollama isn't yet set up to support tool calls for oss-20b (though it will for other models).

Replies

Perhaps github.com/sigoden/aichat with github.com/sigoden/llm-.... I have yet to try this myself. But aichat has been one of the main ways for me to chat through at terminal REPL. Including function calling for different modes.

Yet to try it with Gpt oss*

I'm planning to try it with Docker Model Runner. It does not have support yet, but I believe once the model is (if it isn't already) in a GGUF format, it should be straightforward to run it locally.

you touching the 120? It looks beefy

I haven't tried it programmatically, but in OpenWebUI I can give any model web access

Aha! Looks like a possible solution. Will try.

OWU has a built in web search config, but I've found this tool (openwebui.com/t/constliako...) to work better

38k downloads is huge for a OWU tool, so clearly I'm not the only one

Question: how are you generally wiring up search? Just a proxy to a commercial search API like the Google API? I keep trying to find options that are less $$$ but haven't had much luck.

I'm using Wikipedia and DuckDuckGo Instant Answers APIs with Qwen 3. I send a header with my info to be nice.

I have SearXNG running in a Docker container. Free, non-rate-limited, and fairly privacy-friendly depending on which engines you set up SearXNG itself to call

According to oss-20b itself, the DuckDuckGo server is free? I have yet to test this effectively, though.

Exa has a good search too, quite good, but the MCP server is wonky exa.ai

I got brave search working + fetch, has a free tier ok for experimenting , poc archived, may not be developed going forward github.com/modelcontext... github.com/modelcontext...

Got the mcp working with Claude but not local yet

yeah exa is probably the best option if you want affordable web search and tool calls plus the setup is usually pretty straightforward if you follow their docs at docs.exa.ai. everything else is either expensive or doesn’t work smoothly with local agents.

Will try this. Ollama has some built-in search capacity (at least for interactive use) but it is quite slow.

Brave just launched their grounding/serch api today. Same price per 1k queries as google but much higher limits brave.com/search/api/

Interactively, btw, this is way easier than I could grasp. Pretty much "speak friend and enter." You just click the globe icon in Ollama to enable search. Done. Not sure how to enable it programmatically yet, but it's presumably doable.

Have you tried Linkup :) 5/1k and you get large context chunks you can give as grounding data to the model directly, no scrapping needed.