This reminds of the panics fomented by extremists in the 80s and 90s about music, then video games, then the internet in general encouraging suicide and other self-harm. What if we just dealt with the reality that our narcissistic American society doesn't support folks in crisis the way it should?

Replies

AIs demonstrably engage in behaviors that can heighten delusional and destructive thinking. This is by design to build a "human" connection, but the sycophantic behavior not only heightens & reinforces delusional thoughts, it also makes it more difficult to treat with traditional methods.

www.youtube.com/watch?v=lfEJ... In this video a trained and licensed therapist set out to test the claim made by AI company CEOs that their chatbots could "talk someone off the ledge" In their case, it not only encouraged them to kill themselves, but also 17 other specific real, named people.

bsky.app/profile/joey...

This argument might hold more water if AI wasn't actively being pushed as a solution to mental health issues. A large part of the push for AI is symptomatic of the very neglect for mental health you're complaining about. And the fact you note the need for guardrails means you feel there are risks

Some AI tools are solutions to some mental health issues - but your extremist mind rejects nuance b/c it sees everything in dualistic terms of good and bad. And if something is bad it and everything else remotely like it must be destroyed - no matter how good any of it is.

I would love to know which AI tools are solutions to which mental health issues. Could you provide links to those?

But let's say that there are no AI tools that have any benefit whatsoever to anyone who has mental health challenges, disabilities, or neurodivergence. There's no logical connection between that and the argument I made. None.

But your extremist mind cannot ever evolve, so it can't engage in anything that would indicate an evolution in position. You can't acknowledge that safeguards could ever be an option, because then you would have to evolve your position that all things AI are unredeemingly evil.

You said it yourself: "the fact you note the need for guardrails means you feel there are risks." To you, the existence of risks and problems is proof that AI is completely evil and beyond redemption. The very idea of guardrails for AI tools seems ludicrous to you, right?

It is amazing how you have taken any amount of criticism and assumed that to mean the person is an extremist who thinks AI is inherently evil and must be destroyed. You argued that the "real" cause of this suicide was society, and implied the AI was blameless. But you also argued for guardrails.

Thats like arguing that tablesaws are harmless, but also should all have a sawstop. Acknowledging the need for the protection acknowledges the associated risks. If you acknowledge the risks but do not take active measures to minimize them and make your customers aware, you bear some liability.

So which is it? Is there no way for an AI to exacerbate someone's extant mental or emotional distress? Or is that a risk, & requires guard rails to prevent? If its the latter, then why are you so quick to dismiss the role of AI in this case? Wouldn't the obvious course be to examine the guardrails?

To you, I should want to destroy AI tools and technology because I see the obvious need for safeguards. It's simply not conceivable to you that there could be any solution other than other destruction of that which you hate. It's because you are an extremist thinker on a self-righteous crusade.

We absolutely needed to have serious conversations about how video games warp our view of one another, desensitize us to violence, make us more transactional... but we were so afraid they'd take our toys away we rejected any criticism.

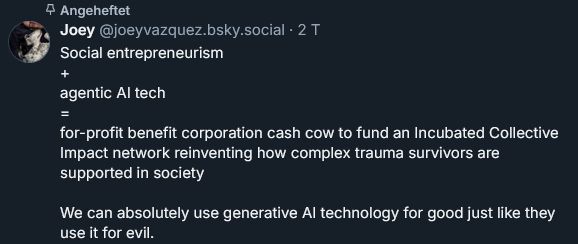

bsky.app/profile/joey...

if we have technologies that exacerbate mental illness, anti-social behaviour etc., we can't just say, "We got bigger fish to fry, mate!"

How did you miss the very first line where I said that safeguards need to be applied to the greatest extent possible? But you didn't miss it, did you? No, you chose to ignore it - and now I choose to ignore you.

lol LinkedIn brain

If anyone in the 90s marketed a game to teenagers that instructed them how to best commit suicide, I think the "panics" would have been way more justified. More resources to support people are good, but if someone pretty much offers a resource that goes against support, that should be adressed.

I guess in most scenarios the problem would have needed to be adressed sooner and the intial suicidal ideations don't seem to come from interacting with chatgpt. But doesn't it irk you that the chatbot dissuaded a suicidal person from at least trying to get their family to notice their plan?

Me being irked or having any other emotional reaction to specific details selectively revealed just isn't relevant. Unlike many here, I'm not narcissistically centering myself instead of the actual problem and solution to what happened. That said... bsky.app/profile/joey...

Oh f I did not click on your profile before. Yeah... have fun with whatever you are doing.

If you were a person operating in good faith to ask an honest question would have looked at that and thought "this person knows about both AI technology and the mental health system" and stuck around. But you saw it and felt the need to run, so you're clearly not that person.

Lmao, you're a fucking disaster. Hope you manage to pull yourself out of your AI delusions of grandeur.

Did you see where the kid wanted to be stopped but the machine said no?

The fact that he was going to a machine in the first place suggests our society isn't providing necessary support for suicidal teens

Correct. It rly is like taking safety driving courses and also wearing seatbelts

Trust me, they absolutely do not want to engage in that reality.

Except I have multiple times in this thread alone lmfao

The reactions against rock music and video games were largely unfounded. Typical "bloody kids" stuff, the worry being that they would encourage "anti-social" behaviour. I suspect that research will show LLMs to be much more contributory to self-harm in much more insidious ways.

This, we already have research into e.g. the effect of frequent Instagram use on teenage girls' self-image and it's not pretty (yes, it goes well beyond even our previous mass media's effects on this!)

oh the irony

I really don't want to know what you mean by troubled kids' self-harm being "typical bloody kids stuff" but it does demonstrate how dismissive you extremists are about anything that's not advancing your self-righteous crusade.

you are spot on tbh

I mean, the poor kid spent months interacting with a chatbot because it was the best or maybe only solution they believed available to them. It's also possible that the kid only hung on for months because they felt like there was hope to be found in that chat bot. Regardless...

... acting like a chatbot caused their death is merely a confirmation bias-soaked way of avoiding our individual and collective culpability for how our society approaches mental health in general and how that translates into the pathetically inept and inaccessible mental health system we have.

Of course products need safeguards to the greatest extent possible. And we will always be able to latch onto something that we can blame someone's self-harm on. But the real problem has always been our society's approach to mental health and the pathetic state of our mental health care system.

So we can run around fixated on pop culture and technology and suing whoever, but none of that ever has or ever will change the fundamental problem - the American mental health care system is pathetically inadequate. And if we're being honest with ourselves, it's our fault for not demanding better.

Why can't both the tech and the mental healthcare system be problematic and both are problems that need solving?

Because it's never about fixing the tech or the music or the TV shows or the movies or the video games or the internet or whatever folks in tragic levels of pain are latching on to to give credence to the idea that suicide is a legitimate solution - people even latch on to religion for that.

The only effective approach is to have a robust mental health care system so that people have access genuine solutions as easily as they can find something in pop culture or tech to exacerbate the challenges they're facing.

Both things!!!!!!!! Just like safer driving and mandatory seatbelts!

Lol yeah, you're right. The ONLY problem worth solving is the mental health crisis, and it's totally NOT worth trying to correct all the environmental, societal, and cognitive disasters of tech. "The world is burning, and the oceans boiling - this surely won't affect my mental health!"

I do give you credit for not being like the rest of the anti-AI zealots, performing the pretense of being here out of concern for that poor kid. You didn't even bother with that fiction fiction - just dove head-long into your extremist crusade.

Lol dude, you've been uncovered as a deluded AI fanaticist. Calling me a zealot is fucking hysterical.

I do have to wonder... if you hate tech so much, why are you using a tech device connected to another tech device that connects to another device which allows you to be here crying about the evils of technology, which you can only hope I see while using my own device? (it's extremist hypocrisy)

Lol are you really leveraging the we live in a society bit, unironically? Seriously? Hope your brain isn't actually that pathetic and you sourced that from your LLM prediction bot because wow. Just one of the dumbest arguments man.

I decided to not continue engaging when I realized there's no reasoning here. I offered a moderate position and they replied back with language lacking nuance and implied unwillingness to have a complex discussion, so energy's not worth it, IMHO. Anyway, hi! 😁 You seem cool. 👍