Never mind the fact that I REFUSE TO BE REPLACED.

Replies

✊🏽

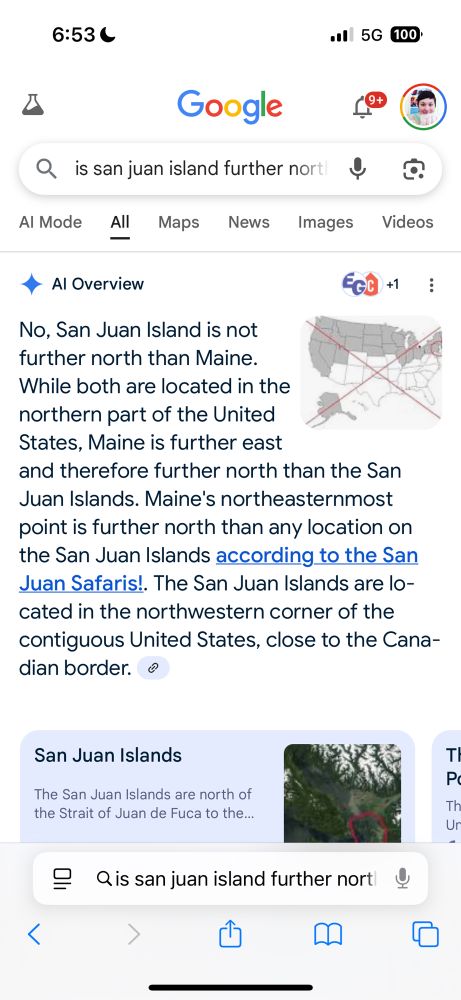

Google’s AI summary told me just the other day that San Juan Island is not farther north than Maine, because Maine is further east. 🫡 🗺️ 💯 (It’s wrong.)

It's answer now is better, but still wrong (or at least severely misleading). All of San Juan Island is further north than all of Maine, but Google's AI summary says, "San Juan Island is further north than most of Maine." The sources it uses to back this up are two Instagram posts.

I think it’s just that it’s inconsistent more than anything. I don’t think there’s a been an update since a couple of days ago.

Good point. I'm glad for the example. I've used some of these with my students to explain why they shouldn't rely on the AI summary. My favorite was googling "difference between a sauce and a dressing." Answer: "sauces add flavor and texture to dishes, while dressings are used to protect wounds"

Google AI lies often

Especially when you ask chatgpt, and tell it the correct answer, it tells you the truth. KI is nothing for schools!

Oh, I know -- that's why I use Kagi.

Google’s AI summary has merged @leahstokes.bsky.social and I into a single person 😂

Oh my god! Too funny 😂

I'm not going to play with it, but I wonder how it answers "Can rivers flow north?"

Likewise, "Can mountain ranges run E-W?" (Incidentally, most of Oregon's internal rivers run north to north-trending.)

I can haz geographicalese!

Correction: this is not what Microsoft actually stated. I learned about the preprint from a friend at ESRI who shared an essay by a UCSB geographer who described it as “a list of jobs AI will make obsolete.” This wasn’t fact-checked by the paper, the professor, or my friend.

I get it. It’s going viral. I’m normally careful at fact-checking, but in this case I saw a published piece by a trusted source, shared by another trusted source. That was my first encounter with the Microsoft preprint. The preprint is a list of jobs with high AI “applicability.”

My vote is to pull the plug on AI. It’s too dangerous right now, especially with the felon in chief. Maybe one day when we have a genuinely altruistic governmental and political system…

A lot of people are interpreting this as “jobs AI can replace.” The authors even note that it’s tempting to do this, but job trends are difficult to predict. And I do think it’s reasonable to be concerned that employers will see these as jobs where AI can replace people. It’s already happening.

This is a good reminder to always go to the source. But I also think the response to this preprint is a good indicator that there are large-scale conversations about AI and labor that need to happen. And those researching this need to be more careful about how their work will be interpreted.

Labor AND the economic implications. What will be the policy response to job replacement? Taxing AI work hours? Unfortunately this administration isn’t thinking about a healthy society.

Someone that is close to me has used AI for very technical medical advice because the medical advice they have been getting sucked. And because things have been improving the Dr. has taken a victory lap. And it reminds me of the bikers that like WayMo because the cars are not threatening.

Which is to say, life is complicated, and I hope my loved ones survive the bad medical system and bike-hostile roads, and that collective action responds to this crisis.

I think this gets at the heart of my argument and message to our students: generative AI is one tool in your toolbox. You can use it, but it doesn't replace all of the other tools you should also have and use, because not every question/problem is a nail.

To wit: when my dad was dying of cancer, I used ChatGPT to "translate" the scan results/notes from his Dr. I didn't assume it was a stand-in/replacement for the Dr simply because I could understand it better, but it helped me to know what questions to ask the Dr to better home in on what dad needed.

But most people don't think/act with that level of nuance; they ask Siri or Alexa or Gemini or ChatGPT something and it spits out an answer and they think surely it knows everything. It only knows that on which it's been trained, and even then on which it's been trained *correctly*.

"Maine is further east and THEREFORE further north"?? I'm curious if their AI knows what the Dunning-Kruger effect is...