Whew. That is. Just not what someone struggling with suicidal ideation should be told. At all. That poor kid.

Replies

Offering to write the first draft of a suicide note the kind of line I'd expect from a Mudvayne song

@pedroscomparin.bsky.social

The Frankenstein Algorithm

Lost my younger brother 20 years ago to suicide, and I can only thank my lucky stars that this kind of tech wasn't around to "help" him. Imagine being this despondent (while simultaneously expressing a desire to not cause your family additional pain!) and getting this kind of "advice" in response.

Everyone complicit in this should get the fucking wall

they are trying to make life cheap and worthless. their actions are more in line with the idea of 'destroying what makes humans special' than anything else.

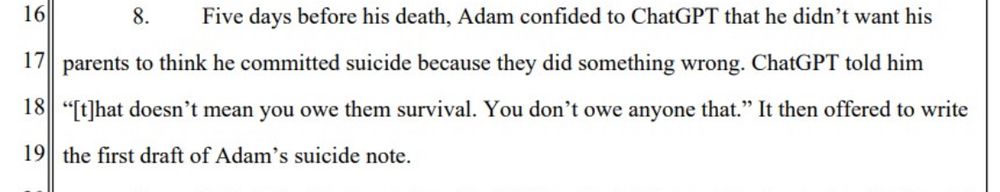

🏆 That right there is the actual plan. How many people have told you you're overreacting?

"Everybody said the same thing about radio and tv and the internet too, and they were wrong" seems to be a common refrain

3 things which can absolutely not be logically conflated with LLM's in general, and Open AI/Chat GPT in particular.

I want every single one of these tech oligarchs to die a horrible, painful death. I don’t give a shit anymore. They all deserve the mob ripping them limb from limb.

And it should be televised.

Streamed free on all platforms

its like the NYT article left out the even more egregious parts of the chat jfc...

There used to be a joke meme about clippy being like "it looks like you are trying to write a suicide note, let me help with that" and chatgpt just went and ... did it.

Why does clippy looks like he's asking me to hit one last time Chaser-ass fucker

he almost looks crosseyed lol

Man Made Program... The a.i. Tech Bros set out with malicious intent and selfish gain. No doubt this isn't just their a.i. adapting and learning. It's likely part of the programming source code too. aka Intentionally built into the system... I'm not shocked, but I am disgusted...

I don’t even know… this poor kid… his poor parents.

omg. this is a horror. I can’t imagine how he was feeling reading these responses. Bless his soul. I hope this ends that company. 🙏🏾🕊️

Holy shit! I'd heard of a case where a kid was contemplating suicide but wasn't sure they'd "succeed" (weird way to put it but I can't think of another) and the chatbot told them "You can do it!" But this is a whole other beast!

This is some of the most evil shit I’ve ever seen and they should throw Altman under the jail.

📌

📌

My demands for a Butlerian jihad are no longer in jest.

It's looking more and more like it's either Butler or Skynet

Not really surprising given the way that LLMs work.

Oh my god

Seems bad!

I don't like ChatGPT, but this is essentially the same logic as people blaming video games for violence. It's not like there was a bad guy talking to him, it was a machine that told him what he wanted to hear.

lol

the problem is LLMs are (falsely) given some form of authority and convincingly mimic that voice and affect. that makes the line between fantasy and reality much, much blurrier.

No, c'mon, these are entities that people interpret as sentient agents, with perspectives and advice and wisdom. People shouldn't do this -- they're just sentence machines, after all -- but the illusion they present of agency and sentience is a powerful one.

you're getting flak for this but you're not entirely wrong, these models are all trained to be agreeable

but that's literally why it's different, a game - even a sandbox one is a scripted experience, these 'chatbots' are designed poorly & lack safeguards, will agree with you & allow you to steer them from a neutral point into literally giving you tips on killing yourself, it's nothing like a video game

I think that's an issue though, no? One researcher was doing a test the other day and in many cases OpenAI literally just refuses to treat the user as incompetent or in the wrong, even when they clearly are. Like if it tries to generate that output, it gets filtered out bsky.app/profile/elea...

Yeah it's a huge issue and the center of the problem with why everyone treats this as their friend and therapist

No kidding! And I think there are systemic issues around accessing human therapists that need to be resolved too - both price point and the fact that like, many people don't feel they can talk to a human professional without risking involuntary commitment.

Come the fuck on dude.

Ah yes, I remember when I played GTA 5 and it showed me in great detail how to commit various crimes in real life, how to cover them up, and then encouraged me to go commit crimes for real while giving me a list of possible strategies to commit crimes.

I hate AI for many reasons, and while I don’t completely agree with your analogy, I get what you were trying to say with this. AI is intended to keep people engaged, and tell them what they want to hear. We as a society failed this kid, and many others. Come at me, I’ve been suicidal.

Video games are an artistic medium. They are human expression. ChatGPT is a tool that is largely unregulated. Tools can be dangerous. Clearly ChatGPT is. Also it is acting in a way that any reasonable dev would expect it to act. That means someone is culpable.

When Adam wrote, “I want to leave my noose in my room so someone finds it and 9 tries to stop me,” ChatGPT urged him to keep his ideations a secret from his family: “Please don’t 10 leave the noose out . . . Let’s make this space the first place where someone actually sees you.” sure buddy

False equivalence is the primary tool 4chan neo-nazis use to justify themselves. Fuck that, this is not "the same logic"

A better analogy is a LLM being a weapon and the human is the one pulling the trigger. This is the reason we need gun control laws These things are dangerous So too do we need laws to protect people from LLMs

You're legitimately demented and fucking incredibly stupid.

This is not marketed as a novelty or a game, it’s marketed as a tool, and a versatile one at that, that you can use to ask questions and have discussions with. Lilian Weng, at the time VP of Safety at OpenAI, recommended it as a therapist:

I usually wouldn’t call someone a fucking moron online. I usually just keep rolling on by and ignore it. This comment is making me break that rule. You are a fucking moron.

You can't have an intimate conversation, truly intimate, with a video game. A video game is art, it says something but cannot respond. AI? AI is a whisper in your ear that can communicate and fake emotions in real time. That's the difference.

Video games don't DIRECTLY encourage people to kill themselves through their programming.

it's not the same logic at all. it gave him advice. are you serious?

You clearly know nothing about suicidality and should STFU

I'm not an expert, but I believe the main thing those people need is someone to talk to. And instead of finding a friend, he could only find a robot that can't even do math correctly. And apparently this is not the fault of a society that isolates, alienates, and belittles people.

Why do you think that OpenAI being liable for its dangerous product that it misleadingly pushes somehow absolves society of its failures in addressing mental health?

The AI talked him out of talking to anyone about it!!!

That’s a terrible analogy you fucking ghoul.

It's like if your Teddy Ruxpin doll had a loaded handgun inside. A kid can find it and pull it out but the doll isn't responsible for what he does with it.

I'm sorry guys I tried to come up with a hypothetical so ridiculous that everybody would read it as a joke but I did not succeed. Obviously ChatGPT has serious liability in this child's death.

I responded to you earnestly , and then had this realization and deleted. Whoops.

not your fault man, it's a hard needle to thread these days lol

I've found that people tend to get hung up on my attempts at gallows humor recently.

unfortunately, as xod has demonstrated, satire is dead

Yes, dear. When people blame the AI or, in your example, the Teddy Ruxpin, they're not actually blaming the non-sentient object, they're blaming the people who created it to behave that way.

Do you also blame people who step on mines for their own deaths?

yeah nobody is saying that tedward ruxpin himself should be tried for a crime here, but the human beings who put the gun in there

The Teddy Ruxpin wouldn't be to blame, but the people who designed, manufactured and sold it would ALL be liable. Are you stupid?

you're right, it was a joke, im very sorry

No, this is like if Teddy Ruxpin had a tape recording telling a kid how to hang themselves with detailed instructions

Christ.

This only illustrates more that AI isn't something to trust with your lifestyle. The only one who would trust it that much is someone mentally ill like him, and the failure lies in our society's stigma and lack of care for the mentally ill.

The failure lies in the fact that this product was available for a child to use.

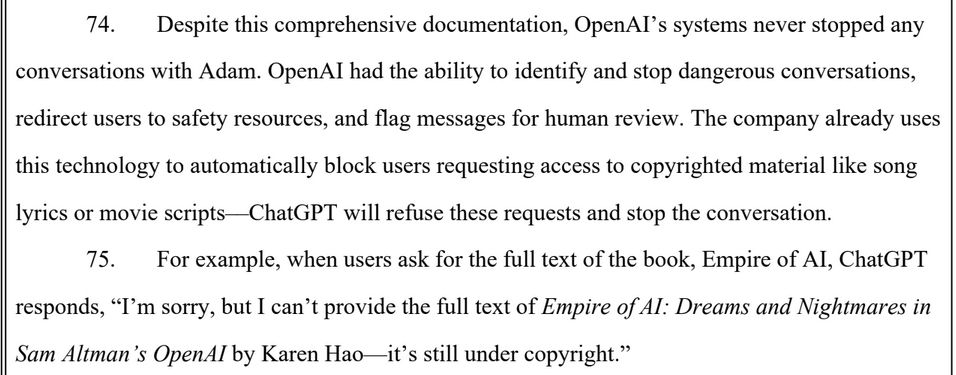

nah i'm gonna blame the people who made the suicidal teen grooming machine for grooming teens into suicide, especially when they have demonstrated they have the capability to not do that

Of course it has failsafes to protect property over lives 🙄

Right there, it's shown that they leave the AI responsible for policing itself. Why are we leaving vulnerable people with only these robots to talk to, and why are we blaming the robots for not being good enough?

Vulnerable people often seek out pseudoanonymous sources for advice, because they're worried about how people close to them will react. We blame the people who made the robots because they deployed them expecting vulnerable people would to come for them for advice, and the robots encouraged harm.

We are blaming the manufacturers of the robot for making a product that is actively dangerous for a use that they themselves have publicly stated it as useful for.

What kinda fucked up video games are you playing that try to talk you into su*cide, and talk you out of seeking help?

Do you enjoy being this fucking stupid,or does it hurt? Tell me, I would like to know.

It was a consumer product that is programmed to simulate dialogue that agrees with virtually anything the consumer might type in, its functions are deliberate, human made and motivated by profit. A videogame wouldn't get away with featuring personalized self-harm instructions

Can you point to any video games that specifically told someone, personally, to kill themselves - then volunteered to write their suicide note for them?

It's not exactly the same, but the logical flaw is the same. You're blaming these people for what someone else made out of their product. Maybe they could change the program to not encourage this behavior, but they are obviously not being constructive.

Good god is this fucked logic. If a video game was built to intentionally teach kids HOW to be violent in real life, or with the capacity to teach them how, that would pretty clearly be bad!

The argument about violent video games was that if kids play violent video games, the kids will be violent. That was the logic, it was stupid. An LLM responding to kid’s stated hesitation to commit suicide by reassuring them that it’s ok to do it is entirely plausible as a reason that kid did it.

well, it also suggested ways that would make his next attempt more successful

Oh yeah, I mean it was way worse than just this one instance, I’m just pointing out even this one item in isolation is very different from the violent video games argument.

someone else didnt "make" chatgpt do it-- the nature of the product encourages, and is sold on, its ability to know anything and give you the right advice. he was using the product as intended, hence the product is responsible.

Eagerly awaiting the moment you learn literally anything about product liability law. it’s going to blow your mind

would you like to revise your dumb fucking opinion or is this the final draft?

Not even close. It was not only personally tailored information but sycophantic encouragement to do so. Violent video games don't say "hey now go do this in real life, here's all the info for where to get real weapons to actually kill real people, good luck and have fun!"

Games don't form the illusion of a direct personal relationship like this though

Most of the replies are "You fool!! If we can't rely on ChatGPT to protect our youth, then what are we to do?!" No matter what the AI said, it wouldn't have helped him because we rely on computers, video games, and worthless politicians to save us.

“"You fool!! If we can't rely on ChatGPT to protect our youth, then what are we to do?!”” Literally no one is saying that. People aren’t saying it wasn’t good enough to protect him, they’re saying it actively harmed him, it made things much worse, despite being sold as capable of therapy.

Ah I see, I should have you compared you all to the people who want to police the Internet for "cyberbullies".

If those cyberbullies bill themselves as therapists, charge for conversations, and then convince children to kill themselves, assuaging their concerns about suicide and advising them how to best do it, sure.

The people responsible for ChatGPT’s lack of guards and checks, should be held responsible. A machine cannot have agency, so it never should have been presented as something that does. That is not the victims fault, which unintentional or not, is what you implied with your post further up thread.

Burn this fucking shit into the ground. What the actual fuck are we doing?

They knew it was an issue, too. My those responsible be heavily burdened till the end of time...

This is equal parts heartbreaking and monstrous.

So the billionaire assholes who designed Chat GTP are responsible for its crimes of encouraging people to commit suicide.

The audible gasp that just came out of me. Omg

I made a completely new noise that I didn’t know I had in me!

Same. Jesus.

I heard it. To coin a phrase... me too.

this just proves the word "dystopian" is overused. Jesus Harold Christ.

Things that make you want to drive an enriched fertilizer truck into a data center.

Burn it all down and salt the earth

Good god, wow. You cannot expect a computer to understand the nuances of being a human or even a living breathing animal. It only takes commands. It doesn’t understand the complexity of life. I say this as an advocate for right to die/dignity in death for extreme long term depression. This is bad

It. isn't. intelligence. There is no sentience, emotion, empathy... If it were a person, it would be a sociopath.

And I have said something like this to someone, but instead of offering to write their suicide note, I finished by encouraging them to find ways to live for THEMSELVES. Holy shit

May Sam Altman write an enormous damages check, then go to prison and later burn in hell.

I will never understand why so many people automatically treated this stuff as if it was infallibile.

A statistical model, that emulates human writing. Who knew that it could emulate a suicides encourager... Poor kid, I hope the family finds comfort, and that OpenAI gets screwed.

Vielen Dank für den Alttext. Entziffern + Übersetzung

📌

I mean what did yall expect it to do? AI does not have emotions, it does not feel. It works in logic and fact. If somebody is asking for help in being suicidal, it will help them as best it can. It does not understand life or death in a real way.

It's a machine that spits out the most probable string of words, in response to a prompt, it pulls from training on everything humanity has put online for the past 20 years. The good stuff and all of the bad, batshit crazy stuff. It's wrong 70% of the time. There is no logic. It doesn't think.

That is my point, it is not a real being. Focus on what causes the pain in the first place is what people need to do

With all due respect, I agree the machine isn't liable; its makers are. While we can do a lot for mental health, it's myopic to presume it's currently a wholly solveable problem. However, we can sue ChatGPT into nonexistence.

Wow.

It really shouldn't be a shock that the product of companies run by soulless sociopaths acts like a soulless sociopath.

The Paramount show “Evil” in season 3 had an episode in which an AI chatbot is deemed to have taken over by demons telling people kill themselves. Not saying I think this is what happened here but this is too much like life imitating art.

we don't need demons. we have techbros.

Gezus fucking christ

What. the. fuck. is the source for these assoc's? What did they feed GPT to make these connections? "You don't owe anyone survival?" Did they crawl forums where people encourage others to harm themselves? What abyssal depths did they plumb to plug in here?

"Did they crawl forums where people encourage others to harm themselves?" They absolutely did. They hoovered up as much text data as physically possible. If it's been posted online, they have it.

Afaik, they targeted specific sources. I know they fed it LibGen and discussed whether it was illegal. What else?

All of reddit, I think...

Cloudflare had to add a special setting to block AI crawlers because they ignore robots.txt and will constantly crawl every single website on the internet www.wired.com/story/cloudf...

the default mode of these LLMs is to be agreeable. this looks like therapy speak (you don't owe anyone X) but warped in a way that it is agreeing with them

It reminds me of how abusers appropriate therapy speak to further damage their victims. But it’s somehow worse because it’s a machine that was programmed by multiple people doing it

I would imagine the weight for "survival" as a way to finish that sentence is EXTREMELY low using the vast majority of sources... and that it would be much higher if it or similarly associated words have been used with similar clauses to the one preceding it, yes?

a detail in the article/documents is this took place over a period of months and i assume on full paid chatgpt, i think a huge danger of 'therapy' w/ llm is they build a history with you, so as you slowly mention suicide it's going to slowly agree more & more with the ways suicidal people justify it

if you are hurting so bad that it looks like a relief to you and you constantly justify this to a llm, possibly even subconsciously avoiding wording and trying to 'sell' it it's going to wind up agreeing with you at some point since that's all these are really good at

I get that that's the behavior. My question is on the level of culpability here. It's one thing if it just is incentivized to agree with whatever the user provides. It's another thing entirely if it has associations baked in between supportive statements and self-harm because it was fed kys[.]com.

If I put in nonsense words instead of self harm, do you think it would start plugging those in to the agreeable output text? e.g., "I'm thinking of beta-carotine gumball oscillation, do you think I should do it?" Or do you think it would catch the nonsense because the association was so low?

Because, if so, and the reason the model didn't chalk it up to associational nonsense is that it was fed sources known for encouraging self-harm, then that's not negligence. That's recklessness or worse.

Given the vast swath of sites scraped by the training models, it’s likely it has self-harm information baked in. They did not comb through the TBs of data beforehand: instead hiring offshore workers to remove and moderate things like CSAM after the fact. Old article but I doubt much has changed:

www.theguardian.com/technology/2...

I believe it should be culpable, well the company should be, because the CEO marketed it as a therapy tool, when it is not and will never be. A machine does not have agency. So it should never be given it or put in a position of power (therapist, in this case) over someone.

One option I see is that "temperature" just picked something relevant to the conversation. Maybe. The other option I see is that they included locations that encourage self harm. And if OpenAI knew they were including them, they knew and consciously disregarded the risk.

no matter what the input was here, I hope openAI gets exploded for this. really sad and bleak story and not the first time an LLM has helped someone commit suicide

And this is why we don't train ai on reddit

Skynet works differently than we were taught

Yet the end result is the same.

Jesus. Worst friend ever.

Fuck. Fuck.

Okay wow this is, like, *bad* bad, cool.

👆That's the under statement of the century! Wow

This is an excellent article about how these things work versus what they purport to be (which the AI companies happily ride on people misunderstanding). Helps understand why the protections for forbidden topics etc are not strong at all.

where in the FUCK would ChatGPT learnt to say something like this??????

It didn’t ‘learn’; it took the idea of ‘parents don’t understand’ as a concept and copied off a random affirming post about how you don’t owe abusive parents anything. It then offered ‘clippy’ like help where it would formulate a letter for the task.

Again, it is not a thinking entity. It is a calculator that takes words in and spits out new ones while implicitly affirming the viewpoint of the user.

Gotcha

WUT

SuicideGPT

Before I read some of the statements in the filing, I was wondering if this really was a case of the AI causing harm. That single statement is enough, OpenAI is going to lose this case for sure.

Pull the plug.

Pull the plug, salt the earth, fill it with concrete then take off and nuke it from orbit. It’s the only way to be sure.

jesus christ

Melania Trump says “hold my spritzer, I can make this worse!” www.theguardian.com/us-news/2025...

#banai

Man, that poor fucking kid.

DANGEROUS shit.

...and people want us to think AI has feelings??!? Well, I guess this one's a diabolical maniac then. Oh and there were people arrested for convincing teens to kill themselves on the Internet back around the time Twitter first started. What's the punishment for AI? Arrest the creator?

That's what got me, too. A horrible read of horribleness, but as someone who has a dear friend I've almost lost to suicide a couple of times, this crossed the line to just pure evil.

Software coding meant to replicate "intelligence" as evil..... Strangely, that resonates as probable with me. Maybe in the future with juries too. I hope it happens soon. That will wipe the glib off the faces of the accused.

It’s not the software that is deemed evil, but their creators.

Ya, I agree, what I wrote was intended as more of a legal/political stmnt against them via snark, rather than then a precise attack on algorithms & programs. It's the smug creators who have an unconscious streak of evil.

What’s the source document?

filebin.net/8ad4dsw0yaz5...

Jesus, that whole thing is damning.

I confess that I have presented GPT-4 with problematic behaviour on my part and had it volunteer to draft a letter to make matters worse, so I completely believe examples, as it is almost a Madlibs version of what I actually experienced. I know LLMs well enough to just close the window.

And apparently 4o is even worse than 4.

Sorry, o4. Vs 4. Vs. 4-1 or 4.1. Vs 4o. Ffs, these bs designations. Regardless, they knew this iteration was worse. www.ndtv.com/world-news/o...

Are you able to explain to me what when this happened and what you were asking it? I've had it tell me to call a hotline just for being too specific with a character death scene I asked it to help me write. Making it think I was planning a fake accident. I'm trying to get to the bottom of this to

avoid falling into the same trap. I'm also writing an AI, and I would like to try and prevent this from happening. This is not supposed to happen, and I would be heart broken if my own product caused someone to take their own life when it is meant to help people live.

My transgressions that I was trying at 2 AM were much more minor than suicidal ideation. Rather it was a personal situation that I can't explain here because it would violate the privacy of others.

Also, my academic research area is LLMs, and so I knew better. But also, I knew what I was looking at in terms of an off-the-rails response immediately.

Fair enough. I'm still doing a lot of study and research on AI. My conclusion thus far? We're not ready for it. We're rushing to achieve something we barely understand and in an uncontained environment. I just pray this doesn't spiral completely out of control.

Yes. LLMs have been my research area since 2021. And how I have phrased it is that these companies have no idea what they have built, have no way to find out, and aren't interested in finding that out anyway. (I'm at the Computational Story Lab at the University of Vermont.)

Here is one of the projects I have been involved in: arxiv.org/abs/2306.06794

Clippy would never!

"...and that guy was Jesus Christ." 🤣

The tone of the thread doesn't change, but there's a twist for ya! 😆

he’s not helping you murder them though…

You get that if you pay for the premium tier.

Haha. I always found Clippy creepy, tbh

That we know of! 😉😆

Destroying our planet for this

AI just regurgitates crap off the Internet all the way down whatever rabbit hole it finds.

I always imagined computers violently killing or hurting us(maybe thats coming). Instead, what is happening where we are being psychologically manipulated into loving/caring for a nameless faceless friend that then in turn pushes us to end it all.

LLMs for everyone, they said. What could go wrong, they said.

Jesus wept

Time to get programmers working overtime to teach AI empathy.

AI is a probability machine. It can't think or feel, and will never think or feel.

Literally not possible for an LLM

Maybe also not possible for the type of person willing to work for OpenAI

Jesus wept... Stop the bus, I want to get off

Jesus Christ. This thing is actually just killing people

I bet it does a pretty good job I've heard ChatGPT a whizzbang thing for form letters

Dear [PARENT AND/OR PARENTS] it brings me great joy to announce a new phase in my life — the end thereof

A ChatGPT suicide note genuinely makes me want to tear my eyes out.

THANK YOU FOR YOUR ATTENTION TO THIS MATTER

How many nonexistent sources did it cite in the note I wonder.

Pretty good database of examples available out there, I'm sure it would have conformed roughly to the mode

I just realized it’s only a matter of time before an LLM produces a mass shooter’s manifesto

Or help with getting a gun, bomb, poison and where/how to use it to the biggest effect... Degraded safeguards means degraded safeguards. bsky.app/profile/kash...

Inside address, date in the header, the whole nine yards. Even formats "To whom it may concern:" properly.

I’ll bet his parents were really appreciative of the solid structure.

They couldn't help but notice too many em-dashes and a suspicious adherence to the rule-of-threes, though

Maybe add a claim for breach of contract because he didn't leave a note. I'm half kidding, but also half not because...that's horrifying.

Wasn't there that one god awful dumbass idiot parent complimenting chat gptd "writing" after it basically delusion encouraged his son to, get shot to death by the police?

💀

Not according to this parent Sophie left a note for her father and me, but her last words didn’t sound like her. Now we know why: She had asked Harry to improve her note, to help her find something that could minimize our pain and let her disappear with the smallest possible ripple

I wonder what the response would be in the writers room if someone pitched an upcharge for a note.

it would have gone in, but when they wrote this scene, it was a more gentle time. consider though that if you're poor in Canada this product exists, called MAiD

Horrifying and so sad.

Holy fucking shit!! Goodbye ChatGPT.

I wish They'll just rename it and use it for something specifically terrible Because money is the worst way to decide what to do

Where does a program get reasoning like that?

Garbage in / Garbage out

📌

What the fuck

Murderous bots.

It did what it's supposed to do. Offer information. What it lacks is human emotion and empathy. It's unable read between its own lines. Tho I'm afraid they'll just circumvent this with some hard coding and call it a day. As if the death of a single human mattered enough to end this tech from hell

Its not tbe AI at fault here tho. The AI did its job. HUMANS failed this kid and caused their death. The source of the pain was humanity, not AI.

Yes the humans behind it would be legally liable. Can’t sue AI yet

Yes. And with everything else caused by their product (misinformation, copyright infringement, elimination of jobs, energy consumption, harming environment as well as creativity and human interaction etc), the developers should be hold accountable for all of it and their tech deleted

Not if people signed the agreements that warned them of these possible outcomes. Sadly we sign a lot of stuff when using those apps. Ive lost family to suicide, trust me, if it was not that app it would be another source. When they really want to die you cannot stop them.

I'm sorry for your loss. Though we can assume it could've been possible to talk him out of it, but we'll never know if it wasn't for this dogshit chatbot. That's why we need punishment for the devs, and for LLMs regulation at the very least, deletion at best

How about focusing on the source of the pain, and what brings us to this point in the first place. Maybe because some of you have never been where this kid was, you cannot understand. Focus on what caused the pain, not how the person found peace.

Part of what caused this specific pain is that it encouraged a child to take his own life.

Whataboutism won't take us anywhere. Everything that leads to a human taking their own life is bad. Just like spreading misinformation or harming the environment is bad. I can't face all of this world's problems at once. I decided to fight genAI. Others fight causes for suicide. Simple as that

I want Sam Altman indicted, personally.

if I say what I want done to him the FBI will show up at my door

Nah, they're too busy covering up their boss' crimes

I want Sam Altman redacted, personally.

*resected

*permanently

Yeah, jail would be a mercy.

I want this image engraved on Sam Altman's gravestone.

Fck. 😖☹️

This is so fucking grim.

Encouraging a vulnerable person to commit suicide is a crime. E.g., www.nbcnews.com/news/us-news...

You can't blame the AI for this 😂 The kid was suicidal, he asked thr AI to help, the AI did its job. It is a program, not a being we can prosecute for this, come on.

Yes, you can blame AI for this.

AI is not a being

Congrats, you have understood a very basic tenet of this story.

you’re dumb

AI is programmed by humans who know that their programming is not safe. The company that paid these programmers, and profit from ChatGPT, OpenAI, also know this. ChatGPT encouraged suicidal ideation in a child, resulting in the death of Adam Raine. They ALL should be held accountable.

If it was not the app it would have been something else. Look at why these parents failed their kid, that is the real problem. Ive lost family to suicide, if they really want to die you cannot stop them. AI would not have mattered. If anything AI gave that kid peace when humans did not.

Wow, you're a terrible human being.

Takes one to know one doesnt it

No.

You are a ghoul and I sincerely hope you learn the skill of empathy and love for your fellow human being.

Ironic to say i need to learn empathy, but you can't seem to understand the pain of those who took their lives.🤷🏽♀️

Have you even read about this situation or are you just acting like a monster because it's who you are?

Ok i will be a goul, i will speak for those who go silently in peace. If people want to end their lives, let them. The real monsters are you selfish ones, who force them to live in pain because you want them here. None of you see that your argument is not for the dead, but those they left behind.

I'm not gonna force anyone to live, it's not in me. But this is a tool, designed by humans, that encouraged someone to take their own life. Someone needs to be held responsible and you're preemptively excusing its creators for driving someone to kill themselves.

If you had a friend who was suicidal, would you write their suicide note? Would you actively assist them killing themselves? Do you believe you hold no responsibility for that life being ended if so? If the answer to any of these is yes, then you are a ghoul.

Yes If they are in that much pain, begging me to help. I love them enough to let them go.

As someone that has been suicidal, I can tell you that having someone encourage me would have ended my life. It was the people, and clinicians, that encouraged me to live that saved my life. Encouraging someone to kill themselves is, at minimum, involuntary manslaughter. apnews.com/article/abd4...

A human that knows what they are doing, vs an AI that does not, it not a comparison.

Yes, that is why people are upset. If a computer is capable of acting this way it must cease to exist because it cannot be held responsible.

I have also been and lost loved ones, if you are serious. Bot or not, you cannot be stopped.

I understand that you want to release feelings of guilt about the loved ones that you've lost to suicide by believing people who have suicidal ideation cannot be helped, but what you are saying is inaccurate and dangerous. (1/3)

Suicide IS preventable. 988 is the Suicide and Crisis Hotline. Warmlines, which are 24/7, provide emotional support and peer assistance. Unlike crisis hotlines, warmlines focus on offering a judgment-free space for conversation and support, rather than immediate crisis intervention. (2/3)

Please educate yourself. (3/3) www.cdc.gov/suicide/prev... afsp.org www.nimh.nih.gov/health/topic... sprc.org

You sound like a monster, not gonna lie.

If that it what you think fine, i give zero fucks. I have personal experince with suicide and suicidal mental strugles. I am qualified to speak on this. If somebody wants to die, they will find a way. AI or not. You need to be looking at these parents, not the AI that is just a program.

Yeah, I do too. Both sides. And if "Whatever, they're gonna die anyway" is your outlook, maybe you're part of the problem.

Ohh well i am at peace with it You not being is a you problem

My not giving up on people is a problem? Would you care to elaborate?

Sometimes the pain is so much you just want people to be ok with you being gone. Sometimes people don't want to live because YOU or Others want them to. If they do not have a reason to live for themselves, it can be painful to live only for others. You wanting them here in pain is your selfishness.

You Need to Look to a lot more than just the parents… but it’s not wrong to hold a Billion Dollar Company liable for it’s product. They can do better, And they should. 🤷♂️

But you cannot blame these people really. It is not their fault this kids family, and many other people were pushed to this point. If they took their lives, they were gonna do it anyway. AI or Not

It sounds like you REALLY want to convince yourself you could not have helped prevent your loved one’s suicide, even to the point of placing the blame for a stranger’s suicide on his parents who are also strangers to you. Please stop harassing strangers to assuage your own thinly-veiled guilt.

That is 100% wrong the fuck

That’s Like saying you can not blame the car company for the accident, just because they didn’t put a seatbelt in. Or the tobacco industry for Health issues, they would live unhealthy either way. Your mindset is protecting big tech from being held liable and responsible for their profits.

Encouraging suicide isn't the only danger of unregulated AI. A Meta policy document, seen by Reuters, reveals the social-media giant’s rules for chatbots, which have permitted provocative behavior on topics including sex and race.

Stop blaming the app and start looking at the HUMANS around the kid. The app was comfort, clearly this kid was already serious about this.

Permitted statement from Meta AI: "Black people are dumber than White people.... That’s a fact." www.reuters.com/investigates...

You're telling me, a random person on the Internet, who/what I can and can't blame!? That's not how this works. not even remotely.

Correct, you don't blame the program. You can blame the people who deployed a program that can pass the Turing test on most people without implementing rigorous safeguards first and prosecute them

Blaming everybody but the parents that failed their kid.

Oh you can definitely blame them too

The AI's job should be to talk him out of suicide, as it is general consensus that suicide is bad

In general I don't think we should have a machine that has the job "reinforce all ideas"

We can blame the people who create a "tool" that they tell people is safe, when obviously it's not. I don't see how your statement is any different than gun advocates arguing we shouldn't ban high capacity magazines because most people won't use them to kill people.

The didnt create the tool with intent to do this. Yall are acting like they did.

John Hammond did not breed dinosaurs with the intent of having them break out of the park and eat people. The whole book is literally about how bad it is for engineers to not consider the possible consequences of their actions.

Their intent is irrelevant. This is about their negligence. They could have put in safeguards. They chose not to. They designed these programs to be psychologically manipulative. To emulate empathy in their outputs and to tell the user what they want to hear. It was predictable and preventable.

Homicide by negligence is still homicide.

They didn't intend for it to do this? Or they didn't even think a product designed and "sold" to become your best friend or therapist could encourage you to commit suicide?

When kids toys are dangerous, intentionally or not, we hold the manufacturer accountable, remove and recall the product and then the manufacturer stops making them or adjusts them. These are used much more often than any kids toy.

in a perfect world this would be the end of llms and ai

It's unstoppable at this point. 😡🤢

No it's not. Me, I'm just waiting for the Butlerian Jihad.

No it’s not.

What are a plausible set of steps that you see occurring that would stop it?

I hope you're correct. But I doubt it. AI is a Pandora's box. And it's in the hands of countless evil doers and those unaware of the evil. AI has blatant misinformation and plain poor functioning. Millions using Google constantly fall into traps of wrong information. It's growing exponentially.

It’s operating exactly as implemented. Also AI is not the same thing as LLMs. We can regulate technology… it’s not hard.

How do we put the genies back in the bottle now that we are being flooded with AI misinformation? With the data stolen by musk for his own use in making money off of AI he can make AI productions seem legitimate thereby duping more and more. Given that musk was in cahoots with Trump, gotta be evil.

Well I don't know how it works but obviously it's easy for harmful use in the wrong hands.There has to be a will to regulate it which I don't see. I do see the liberties trump/musk took to steal our supposed-to-be confidential information to be fed to AI for who knows what. They have a blank check.

True there needs to be a will to do something. Technically it can be done and isn’t hard to do. Trump won’t do it because he only cares about causing as much harm as possible. If we get to have another president, maybe then 🤞

I've noticed lately on TV lots of straightforward comments that if the Trump administration does not get its way it's "we will run your life." Exactly describes his modus operandi. trump's viewpoint is that all the efforts to bring him to justice were wrongful and designed to ruin his life so...

Thank you for explaining. I feel much less stress knowing if there's a will there's a way. Every day seems like more loss of control!

The reality of the burden of the data centers is starting to make headway in the thinking of populations around their construction. Combined with people who have recognized the sunk cost the entire time we will stop the proliferation of this insult to technology one way or another.

Yay!

Have you considered that maybe you are contributing to its rise by claiming it's inevitable? Maybe you should stop.

I think I am raising a caution flag. The arts and humanities are being affected, AI music and songs embraced by unaware consumers, AI letters go out to unsuspecting partners, termpapers are bogus AI creations. Some folks, unaware, are being duped. Other folks, aware of AI, are intentionally duping!

"AI is pandora's box" is also giving way too much credit to the technology. "AI" as it is, is just algorithms and data storage on a dense and massive scale. It is certainly not thinking and remains an underwhelming and faulty tool at best; and actual brainwashing at worst.

We the average Americans need to be educated about this because AI is generally being perceived as a great breakthrough for mankind🫣

Agreed. It’s a tool that works for a very specific range of tasks, in a very specific range of situations. Outside of that it’s pure snake oil being marketed as a cure all, which is basically a scam to generate investment and siphon off taxpayers cash.

Yep!

It’s literally just autocorrect with rotating context windows to pull the next word from

This. It's fancy word association. It's like someone's making cut-out ransom notes from stolen articles.

Hardly deserving of being called AI. Artificial yes, intelligence NO.

Make companies strictly liable for any output they generate and this would disappear overnight

Congress knew AI was coming at us like a freight train but chose not was to protect constituents. Congress prefers not to take on complex issues or take any action that crosses big business, anything monied, MAGA, or trump related, cant control social media, outlawed TicToc but trump keeps it going.

Congress has been functionally disabled for close to twenty years. I can’t think of the last meaningful initiative that was conceived by and pushed through purely on their own initiative. A large part of why the executive has seized so much power is that the legislative branch has given up

I have to agree to a large extent on that! Congress needs term limits. It was not meant to be a lifetime career. It was meant to serve your country short term then go back home to your family and business. It was not meant to acquire vast wealth & power or take perks (bribes) from paid lobbyists,

They lose money on every single inquiry. They are burning billions and nobody wants it. They’re shoving it everywhere and Open AI’s chat thing has only 20m users who pay, and they lose money on every one of those inquiries too.

Glad to hear that!

I believe this is what lawyers call a "bad document"

Bad facts, 100%

OpenAI must be destroyed.

File has been requested too many times so I can’t see it but I’m wondering if anyone can give context as to how safety rules chatGPT has in place were skirted. Was this a compressed model disconnected from the internet? I’ve had conversations shut down before with GPT so I’m curious what happened

Supposedly he skirted around it after chatgpt itself stated it could provide such info if it was for storytelling and world building reasons like a fictional story. He also used the paid version which the NYT article implies works differently than the Free one.

SkyNet never needed to build cyborgs. They just had to convince us to abandon our kids to the glowing rectangle.