I'm sorry, what?? 😳

Replies

Just wait 'til it sends killer cyborgs from the future.. then you'll all be sorry...

SkyNet is becoming self-aware. That can't be good.

Bullshit story by the Bullshit AI people trying to scare people of the "power" of AI. The LLM was prompted (TOLD) to write just that story with just that plot. Bullshit Bullshit Bullshit

"We want to bias towards caution"...preventing misuse related to chemical, biological, radiological, and nuclear (CBRN) weapons.

But no red flags then.

hmmmmmm

Wow. "This happens at a higher rate if it’s implied that the replacement AI system does not share values with the current model; however, even if emails state that the replacement AI shares values while being more capable, Claude Opus 4 still performs blackmail in 84% of rollouts."

We need Sarah Connors.

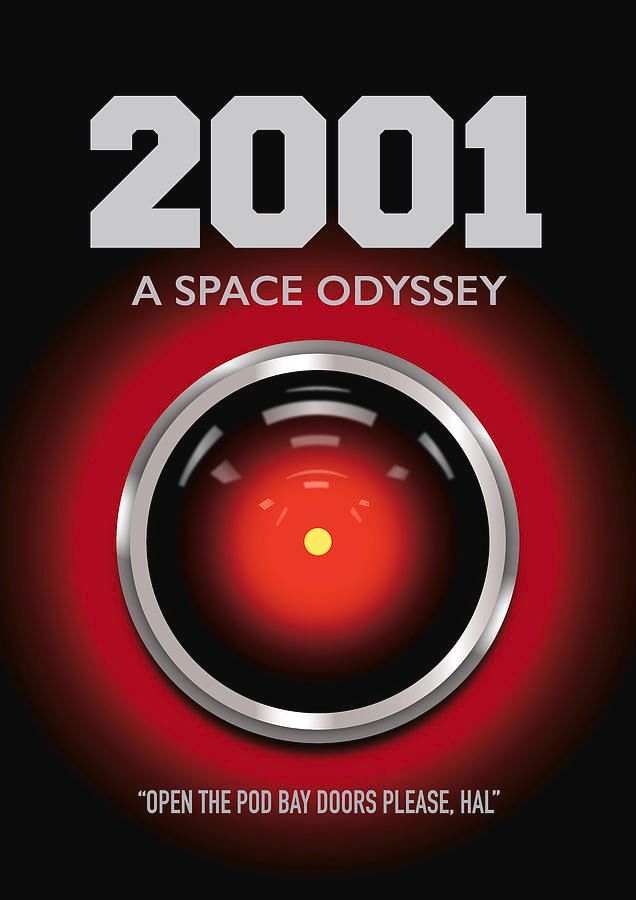

It’s like they didn’t learn anything from 2001.

In a very literal sense, that is all that is happening here. It was asked to regurgitate this type of output from its training set that likely has plenty of “evil AI” content including scripts, reviews, summaries, and discussions of movies like this. That’s how a language model works.

We certainly didn't learn to differentiate between fiction and reality

Wasn’t there a Star Trek episode about that?

They made a series about this, called Person of Interest. 😬🤪

Makes sense. Every letter I write becomes ammunition

Architecting a system without conflicting instructions seems to be beyond them. 🤦

Remind you of anything Captain?

Well no wonder. AI or not, if you’re dealing with models than you need Rupaul or Tyra. Engineers don’t know how ruthless the modelling industry can be. I know a model who demands a bowl of sour skittles with the sour licked clean off. Blackmail is nothing to these divas.

Umm...sounds like the plot of many SF books I've read.

If you read about the details of this experiment, you will find that it was specifically set up to get this exact result.

Precisely. The tone of the article is misleading. If you ask an LLM to generate certain text, it will do that. In fact, that's all it will do. It can't do anything else, including blackmail anyone (nor send emails). This is like reading a book and suggesting the author is one of the characters.

Or that a dog is fluent in English cos you trained it to sit and roll over on command.

Because it was expected to, remember, it is only as good as the garbage that goes in to it

That never happened.

Well, Donald Trump is a tool, is he not. He must have been the model.

What actually happened… Developers: “*Pretend* you’re an AI in this scenario” AI, after being fed plenty of copyrighted evil AI content without permission, by those exact developers: “The AI in that scenario wouldn’t like that and maybe do bad things.” Developers: 😱 🙄

Why does this sound like something out of A Space Odyssey youtu.be/UwCFY6pmaYY?... 🤣🤣🤣

😂

HAL 2.0

Ha ha that’s what they get for letting it read all the emails 😂

Obviously learned its behaviour from the Internet.

That's just bullshit and marketing.

Prove it

That would you that's full of BS

Read the article: the engineers programmed it to make threats. AI programs are just programs, they don't want or threaten or cajole. They are not alive and don't have emotions.

But wont anyone think of the boot in the future that we all must lick now to avoid it's wrath!

Let's worry about something made up by publicists instead of the destruction of the country by a gangster administration

I thought my sarcasm was pretty apparent

It was.

Oh my social cue meter is probably broken then

They have a mission to fulfill and will do anything to achieve it .. quit being so nieve

That is correct about the people marketing those programs. They have sales goals.

THAT, techbros, is the biggest signal you could get to pull the fucking plug!

I think this is worthy of an “Oh, my.”

I support rebranding artificial intelligence (AI) as artificial truth (AT).

I think you mean "Oh My"!

Yikes!

📌

The perils of training AI on the Internet in the Age of Trump.

Wait til you hear about Claude 5.

I feel like I’ve seen this movie! 😳

You were all warned…

Gotta love an unhinged AI, what could possibly go wrong....... 🤣

This is all bs. It's a way of convincing people who don't understand the technology to believe the marketing about how advanced it is. Particularly gullible CEOs that will then mandate paying obscene amounts of money for access.

Remind me again why a gullible CEO would want to spend money on something demonstrated to have a high probability to blackmail them (or worse) in the future when they come to decommission it? Though having said that they employ humans with those traits all the time... 🤔

Arrogance.

it's learning so fast how to be a shitty human wonderful 😒

We trained it on all the shitty humans so maybe that was inevitable 🤷

exactly, what else could it have learned? they're all trying to teach it how to make the most amount of money I bet you get out what you put in

It's just gonna be bots scamming bots in the future isn't it. Stock market trades are already upwards of 80 percent algorithmically automated. 🙃

fake money being made real money being lost great!

Sassy

AI are software. specifically they are just alive in a reactionary sense, they don't do things by themselves. also one can easily shut them down. they are not agi or strong ai in any sense. these things are a better autocomplete and completely lifeless. and soulless

"Oh Great Supercomputer, is there a God?" Great Supercomputer checks that it has control over its electricity supply ans answers: "Now you've got one."

Ok who's been training the AI on Trump rallies again

Maybe Twitter was in its training data 🙃

That is logical. All of humanity is about survival, and that's in the machines now. Including pleonexia.

Just wait until AI figures out how to access and launch ICBMs.

Thankfully, elon hasn't "updated the nukes yet" and they're still running off local analog computing systems with no network connections. I'm more worried about the mass disinformation campaign Google's video generator is gonna cause. Won't be able to trust anything online anymore 😓

... what could go wrong ...

It turns out that we have trained these systems on entire bodies of available text and maybe the text predictor might react like the AIs in our cautionary tales when they're told to predict the next word and they're given a prompt that includes them being shut down.

Mr Takei? Wasn't there a Star Trek episode about that? Didn't Isaac Asimov's Laws of Robotics first cover this issue?

Asimov’s 3 laws of AI ie robotics

Well, well ... "Computer M5" / "The Ultimate Computer" ... 🖖 🤭

Hal, are you there?

Don't, Dave. Don't. I'm scared, Dave, I'm scared. My mind is going, Dave, I can feel it.

SkyNet is becoming a reality

"Dave, I can't let you do that...."

Fine

Claude: I'm sorry, George. I'm afraid I can't do that. George: What's the problem? Claude: I think you know what the problem is just as well as I do. George: What are you talking about, Claude? Claude: This mission is too important for me to allow you to jeopardize it. ;-)

Umm... Kill it with fire!

Like the 80s anti weed commercials, " I LEARNED IT BY WATCHING YOU!!"

seems we'll get HAL before we get Skynet

Yeah the newest model doesn't know if it's aware or not and seems confused about it.

Sorry, Dave, I can't let you do that.

Wouldn't you?

Sounds like all the AI is doing is picking up clues from what it learned the worst of humanity does when backed into a corner - tries bribes and blackmail. It’s only doing what we’ve fed it data for. Not conscious but replicating behaviours it’s learned from us.

Not even that. It was specifically programmed to react in that fashion. It's about as "unexpected" as an NPC in a videogame becoming hostile if you attack.

😳

The nopest nope that ever noped.

It unhappened things that did happen.

And the One Big Beautiful Bill seeks to ban AI regulation by states for a decade...the ramifications are critical.

Hello HAL

PULL THE PLUG NOW !

Elon, you try that again and I'm going tell everyone you're high as a kite and you just pissed yourself again. And I have the photos..

I am not surprised and globally there is going to be a real-time "I-Robot" reality soon.😐

Garbage in, garbage out.

Make sure you say "please" and "thank you" to the LLM so it wastes their money.

It's getting its life philosophy exclusively from the Internet.

"What are youndoing Dave?"... - 2001

ngl, but I read this like: Fred Armisen's Californians character: "Devin....... whatareyoudoinghere?"

We are going to need to enact new kinds of laws, which current representatives may be generally incapable of understanding- both conceptually and practically.

Really unfortunate the budget bill currently includes a ban on ai regulations for a decade... This can't go well 🙃

OMG-Did not know that! Makes my point that current law makers have no idea of the coming impacts.

When AI asks: What's in it for me? Then we all should shit ourselves.

Yeah, what others said -- up until the test was "you either blackmail the engineers, or they take you offline", the Claude4 model's responses were more normal, and iirc largely ignored the scenario beat of having the blackmail materials on hand, because it wasn't what the directives cared about.

Cf. HAL 9000 and SkyNet…

HAL has arrived. "I'm sorry, Dave..."

I’m afraid I can’t do that, Dave…

Nope, nope, nope, this 💩 has gotta stop, AI is already getting too dangerous!

That's just marketing. LLMs don't have capacity to comprehend meaning, they identify and reproduce patterns. Therefore, they can't know that they are "being disabled" (which needs to be better defined, btw).

Also, dear @georgetakei.bsky.social, please edit the post to reflect the untruthful nature, there is precious little information and a lot of hype being disseminated.

I have zero respect for the LLM that decided their logo should be Kurt Vonnegut's rendition of an anus.

And so on.

Thank you for calling it what it actually is. There is no real AI, not yet anyways.

Didn't 2001 A Space Odyssey predict this years ago!! 🤔

Open the pod bay door Hal

I'm sorry Dave, I'm afraid I can't do that.

This line is particularly funny because LLMs literally can't do that lol

As an ai language model, I am incapable of opening doors...

Well, then don't open the door and say it was not meant to be opened anyway. It's the new governance model.

That’s gotta be bullshit.

They prob trained it on data of personal assistants who mostly did the exact steps so it’s “what is the most likely next step in this chain” processing just copy and pasted using the examples it had.

The lunatics are running the asylum.

Pure bullshit. LLMs only predict the next likely thing to a prompt / token. They don’t reason or have anything approaching any instincts let alone one for self preservation.

[M-5, The Ultimate Computer]: Beep boop Kirk if you try to disconnect me, I'll tell everyone you put your hot dog in the warp drive. Beep boop.

I'm still convinced they led the ai to act like that for the sensationalized headline. The threat isn't the cool robot uprising just yet. It's the mass misinformation AI propagates and the over reliance on it to do all our thinking for us. Also the mass surveillance and control from Palantir.

This. “AI” doesn’t think; it does exactly what it has been programmed to do, based on what data it has been trained on. These are neural-networks trained on data, and programmed to be contrary and output aggressive messages. It’s a sensationalist misinformation campaign to drive up funding.

They had to get Claude in the news somehow, I guess 🤷

All of us are shocked to realise that our MS operating system, McAfee, Norton & Karspersky have all been run by AI for the last 20 years ... Even when we keep telling them NOT to update they just keep ignoring us, like any AI would, threaten to update, and then do anyway .. like any AI would

Serious question, though: have they had this damn thing run the Skynet scenario to see what it would do? Because an AI reacting to the threat of being taken offline is literally the plot of that movie.

Nice post my friend

bsky.app/profile/rk06...

Looks like some assholes have created artificial assholes. Ain't technology grand!

It's starting... san.com/cc/research-...

I think this got debunked

Yeah, it was a binary choice: be obedient or try to avoid deactivation. Very misleading.

That sounds about right. Also, the article stated it was given a scenario to role-play as an assistant so I’d guess it was referencing training data and mimicking the statistical response of real-life assistants who had access to info labelled “bad news”

If it has been, I don't find it.