Basically this was the first time I've used an AI to count instances in a document in this way - we're encouraged to use Copilot at work, so that's what I did. It's sold very much as something to use for analysing documents, spreadsheets - integrated with Office etc.

Replies

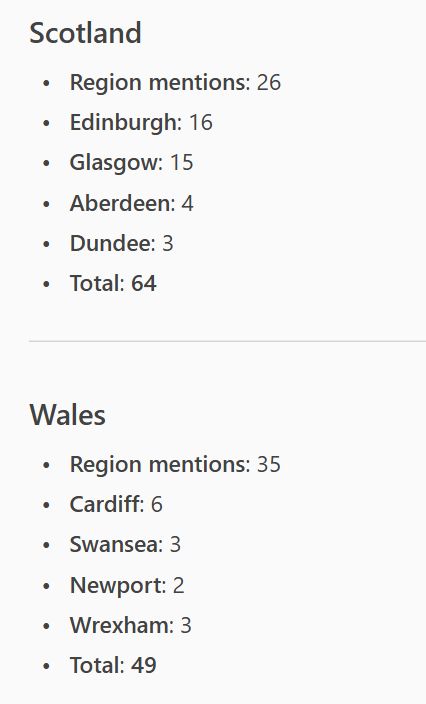

I asked it to identify every reference to a region AND place within that region, and tally them for me. Which it did. Hubristically, I thought I knew better than to blindly trust what it spits out. So I picked some regions, compared what Copilot generated vs the actual document for these.

Perfect match. It was picking up places like Wrexham, Newport which also gave me confidence that Copilot had done a thorough job (unlike me). It did not occur to me that this could be a false impression of completeness - never mind that there would be serious errors elsewhere.

At this point, I was feeling pretty good about it all ("Ach probably good enough for a tweet") - so I didn't do some pretty basic things. For instance, check those outliers. Why not wait an hour to read the Strategy in full before tweeting about it?

So yeah, all very silly. Mea culpa. Thankfully, I doubt very many people saw it but that's not really the point. If it had been for a story, I would've been a lot more thorough (and with others checking), but that's not the point either.

Point is... 1. Don't trust AI to run even the most basic statistical things (yes, yes I knew that already, but then you get told you must use this stuff, and then...). 2. Don't rush to post things to social media, it creates dumb and embarrassing situations like this one.

Still better to be honest about one's shortcomings and use it as a learning opportunity, rather than just hope people forget about the error and move on. Yours, Today's AI schmuck.

Not that I'm warning people not to use AI. It's just good to be (more) aware of what it's good for, and what it's not good for.

Writing an Excel macro or Python script? Great! Grammar, spell-checking? Cool. Exploring how a story idea might link thematically to other fields and disciplines? Useful. Counting? No.

Okay but like if it's not good for something as simple as COUNTING, then ... how smart and how good is it as a tool

And just to stress - for folks saying this shows the decline of journalistic standards: this wasn't used for a Reuters article, it didn't go anywhere near one. It was just something I tweeted hastily.

I appreciate there is some overlap between an employer and their employee's social media feed - and how my conduct reflects on them. But it's also important to draw a distinction that my ramblings here are not Reuters.

AI is pretty good at writing Visual Basic; I had a thing I needed to do in Access that the API didn't seem to support doing with menus (changing a linked table's data source from a file to a table on a SQL server); and AI gave me a working subroutine on the first try. But I did extensively test it!

One way to (maybe?) get a better outcome in your case: ask it to 1/ process the text and add markers to the region/places 2/ write a python script to collect these markers and do the stats you want. you get a usable doc to check its marking work & the code is testable (and probably reliable)

Thank you, that's a great idea.

I would add… Using an LLM to generate text, music or images? Absolutely not…at a minimum, not until we have addressed the rot/exploitation at the heart of current AI models. No more strip mining of our culture & humanity to build commercial products. That shouldn’t be controversial.

💯

oh god don't trust ai code (as a programmer) This is one of my biggest things I am afraid of. Code that looks correct on the surface and produces valid results for the test cases at hand, but doesn't take edge cases into account in the way that a human programmer can learn to.

I hear you - and I wouldn't want to overstate what I said. I've used it to write some Excel macros and very simple Python scripts - to perform stuff I've done manually for a long time, know well (in terms of whether the output works), and can test. I would seek expert help to do more!

I have gotten myself into such a rat hole with it trying to do something moderately complicated.

Since going over to Windows 11 I cannot see the cursor on Excel spreadsheets.

There are approximately 8,464,265 ways to improve cursor visibility both in Office options and Windows Settings. (AI may have overestimated the true count there, but... there's a lot of them.)

It's bad at grammar and spelling It *might* produce a decent macro/script.. but you have to check. It's *only* potentially useful for things that are much easier to verify than to create/locate. --even then it's a climate-apocalypse plagiarism machine and should not be used unless truly desperate

I’m not clear exactly what happened or the extent of the wrongness. (Your original post is deleted) Can you say how different the AI analysis was from the actual document content? (For Wales, for example.)

Sorry. Basically the AI response was right for a lot of places, badly wrong for others - eg it massively undercounted references to Yorkshire. And while I should have spotted that, some other similarly-sized regions had genuinely a small number of references, so it wasn't implausible.

I’m still confused as to why LLMs are bad at counting. I’ve run into the same problem, even though it seems like something that they should be good at. Counting is a basic enough computing function that it makes me doubt everything else

Because AI's don't think, they just generate plausible text. If prompted "2+2", it answers "=4", is not because it learned to add - it's just because statistically "=4" was the most probable next sequence of letters based on its training. LLMs *do not* reason or think...

I'd like to understand this as well. I asked Google AI how far along my DIL's pregnancy is and even with spitting out all the correct dates it confidently said 20 weeks and three days..my first, strong reaction was why did she want to wait so long before letting us know, then figured it can't be.

Because counting is not something they do. They don't answer questions but produce answer-like objects by predicting what you want to read. At no point do they engage with the meaning of the question.

LLMs are "bad at counting" because all they do is produce the statistically most likely next thing to come in a sentence. They don't actually count anything.

i feel for you 🫡 never ask them to count or do simple math!

Yes. And god knows how many times I've seen people post stuff like: "I asked this AI if 5 is less than 4 and look what it told me..."

Don't be so blindly accepting of the macro and script. IF you know the langugages and the expected outcomes, you can check them. But you still need to check them -- imitative AI makes weird, hard to find mistakes even in coding.

I hear you - and I wouldn't want to overstate what I said. I've used it to write some Excel macros and very simple Python scripts - to perform stuff I've done manually for a long time, know well (in terms of whether the output works), and can test. I would seek expert help to do more!

You should be

And that's the problem with the term AI. There is useful AI - supervised learning for medical imaging, or Alpha Fold. Then there are LLM's, which are basically bullshit generators. And we have enough human ones of those already eprints.gla.ac.uk/327588/1/327...

Admitting a mistake, on the internet? What the hell man!

Refreshing perspective. Genuinely, thank you.

Hey, thanks for the introspection and willingness to go "my bad", and for not doubling down. I appreciate the examination of what happened.

Yeah, it's innumerate. You can have it write a python script to make to do things like count things in a document, but if you ask it to do anything requiring understanding numbers, it'll fail.

Andy your retraction and explanation does you great credit, but I'm left with some questions: You say you're "encouraged to use Copilot at work"— Do you think that the policy should be questioned? Is it allowed to be questioned? Is this damaging work product? Are others having the same experiences?

Whether he is able to challenge or ignore it I have no idea but it's definitely a horrible policy that's damaging a lot of businesses.

There’s also the question of the environmental damage ai does.

As someone who works with AI on a daily basis, I would say don't trust AI to get anything right. You have to read every word it spits out and know the subject matter inside out, so you can catch all the errors.

Bummer! Would love to see the model and prompt you used if you’re up for screenshotting.

Ok, so you inspired me to do it the old fashioned Ctrl-F way…

I'm getting encouraged to use Gemini to write my project documents and I... don't know if it's actually saving me any time

Is it easier to write them yourself or meticulously check the answer-like object it outputs? That's the test. Even then it's a climate-apocalypse plagiarism machine that should be opposed at every turn.

I also think there's meaning to using specific wording, and letting an llm do it will create implications you might not want

Whether you can make your bosses understand that is another question of course.

Also worth mentioning that you'll slowly lose the skills to do the job yourself and be unable to explain the contents of the documents if someone queries them.

I sympathise! We had similar issues when we tried to analyse some qualitative data that we'd already analysed in the traditional way. Superficially Plausible Outputs from a Black Box: Problematising GenAI Tools for Analysing Qualitative SoTL Data. TLI, 13, 1–9. doi.org/10.20343/tea...

Basically only use for applications where you can check easily or where truth isn't important (not a glib statement, in education we might want to generate case studies and scenarios which don't use real situations).

Sorry, Reuters encourages you to use Copilot at work?

I was trying some things out the other day and had it summarize a PowerPoint I had worked on. The results LOOKED very insightful and convincing but when you actually compared it to the doc it was a mess. Pages we jumbled, numbers were way off and inferences were just wrong.

And already you look extremely silly -but at least you can blame your boss for most of it. The only mitigating factor is that you hadn't committed the particular idiocy os thinking an LLM could do something useful before.

You are told to use Copilot because they are paying for it NOT because it is good, helpful or useful Honestly worrying that journalists are blindly using AI, if you need to double check your work every time, IT IS A WASTE OF TIME!

I don't disagree with a lot of that, but I think it depends what you use it for. For instance, yes this example was poor (both the AI and my use of it), but equally there's stuff I've done with AI that simply would not have been possible for me to do before (or at least, extremely difficult)

eg. writing code to create new tools to make handling economic data a less manual process. Much of it has been extremely beneficial for my own journalism. I don't think it's very black and white

If you can't write the code yourself then you probably can't check it's actually doing what you think it is. This is *extremely* risky. Only where verification is much easier than creation should using the climate-apocalypse plagiarism machines ever be contemplated

Using traditional coding (CSV of place details + Python to search) this is still a very human job as so context dependent. One can be pretty sure when it counts Sector 33 times that is it is not Sector near Axminster in Somerset - nor Newport in any of the 3 mentions is near Borgue in Scotland!

Excellent points

As per my quotepost: think this is good argument for shared code as part of industrial strategy (rather than thinking AI will do this accurately - think something like sophisticated autocorrect - but the dictionary is instead a gazetteer of place data, & aim is human yes/no very fast).

AI is a tool like any other, its basically a very good regex/pattern engine. so the farther you get from that the worse it is. expecting complext math, or complex logic? yea no....

I tried CoPilot to help code and it introduced a subtle bug that took ages to find and fix. This stuff is a dancing bear (“It’s not that it dances well, it’s that it dances at all”). It’s only useful for things that don’t matter or are trivial to check. Not exactly the new industrial revolution.

Excellent analogy!