LaurieWired

@lauriewired.bsky.social

researcher @google; serial complexity unpacker; writing http://hypertextgarden.com ex @ msft & aerospace

created November 20, 2024

2,726 followers 1 following 642 posts

view profile on Bluesky Posts

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

The 90s had a level of experimentation in computing that to this day is unmatched. SGI, BeOS, Transmeta, Symbian…there’s so many that were ahead of their time. Take a look at the BeFS book, it’s fun to see what did (and didn’t!) change: www.nobius.org/dbg/practica...

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

In some ways, BeFS is still the more “modern” filesystem! BeFS embedded search into the FS itself, Apple keeps the indexing+search layer separate. Both love B-trees, per-file metadata, and 64-bit structures. It's really not *that* different.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Turns out there’s a good reason for that. “Practical File System Design”, was written by Dominic Giampaolo in 1999, for BeOS. Giampaolo joined Apple in 2002, where he became lead architect for…APFS. Released in 2016, it's funny to see the same ideas 17 years later.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

I’ve been on a filesystem kick, and it’s interesting to see the DNA of older ideas pop up in modern designs. BeFS was crazy in the 90s; the whole architecture was basically a searchable database. Skimming through their book…it sounds a lot like current Apple FS design.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

It just feels like an awkward spot. If you want lots of memory+power, save up a bit more for the RTX Pro 6000. If you want to be hacky + fast, hook up a bunch of used 3090s/4090s together. If you want to be cool...get a bunch of TT Blackholes or something idk.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

man, the DGX spark is looking like a miss twice the price of the 5090, only ~30% the FP4 TFLOPs. Really bad memory bandwidth (273 GB/s, way worse than a macbook pro!) I also have low confidence in the software stack (most Jetsons are still on Ubuntu 20.04!).

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

If your interested in Reverse Engineering iOS Applications yourself, check out my Open Source tool, Malimite! github.com/LaurieWired/...

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Full Video: www.youtube.com/watch?v=tnPA...

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Gen Z doesn't Understand Filesystems. It's not their fault. Apple's early abstraction of mobile data storage has caused...confusion to say the least. But what does the *real* iOS filesystem look like? As a researcher myself; it's kind of insanely complicated:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

In 2025, it's still a problem. YAML 1.2 technically fixed the issue, limiting booleans to explicitly true and false. Unfortuantly, a *ton* of libs and tools still default to parsing 1.1 style. So now, if a programmer says "I hate the Norway Problem"...well, you know what they mean.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

The solution is gross no matter how you look at it. You could of course, add quotes to evaluate "NO" as a string, but devs don't like special edge-cases. Most parsers chose to explicitly disregard the spec! Now you have a fractured ecosystem of "real" YAML and "fixed" YAML.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Norway sucks for programmers. It's not what you think. The country code completely breaks the official YAML 1.1 spec. NO evaluates into Boolean false...regardless of case. This can cause cryptic, hard to debug errors; especially in YAML-heavy environments like Kubernetes.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Of course, individual manufacturers often add their own special protocols riding on top of the Bluetooth spec (Apple, Google, Snapdragon…etc) that mitigate this problem. That said, mixing brands can drop you into the compatibility “work at all costs” modes, which sound significantly worse.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

I’m not trying to specifically pick on particular operating systems, the “headset fallback” issue happens with many devices. But in any case, it is extremely jarring, and support for the newer headset codecs like Super-Wideband 1.9 spec are limited.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Unidirectional (music) audio is a different story. Codec support, plus newer modes resulted in a big jump from the 2010s to now. LC3 is basically transparent, aptX lossless is *literally* transparent (bitperfect under the right conditions). Not really any compromise.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

If you’ve ever used a bluetooth headset on Windows, you might notice a *massive* drop in audio quality as soon as you enable your mic. It’s a fallback to the old HFP mode; sometimes lovingly referred to as “potato mode”. Worse case, the stream drops to 16kHz *mono* audio!

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

How much better is modern bluetooth audio compared to the past? The answer is a lot…and also not at all. It all depends on the mode. One-way, with modern codecs (music, etc) is quite good. Headset mode (bidirectional, think zoom calls) is still awful:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

I still think there is some hope in the future for (reasonably priced) LFP-style UPSs in a home setting. After all, lithium cells are lighter, much more energy dense, handles higher temps, and recharges really fast! But currently, the cost/benefit ratio just isn’t there.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

You’ll never get 100% capacity out of a lithium UPS. Most manufacturers *have* to limit the cells to hover near ~70% during standby. Some of the more advanced systems attempt to “predict” future power outages…briefly boosting capacity to 100% for short periods before an outage is expected.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Lithium-based cells *hate* sitting at 100%. The thermal management, per-cell protection, and balancing electronics are significantly more complex than lead-acid’s simple float charge. Lithium UPSs do exist, but they are pricey and make some huge compromises.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Contrary to what you might think; lithium batteries are not a “straight upgrade”. Lead-Acid handles being “floated” at near ~100% capacity for years. Considering UPS’s spend 99.9% of their life sitting at full charge…waiting for an outage, it's an ideal use-case.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Why do most uninterruptible power supplies still use old, lead-acid battery tech? Nearly every battery in your house (phone, watch, even electric car) is lithium based...except UPSs. It all has to do with battery chemistry. Lead-Acid has some unique advantages:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

There’s much more to this story, but it’s fascinating that such an unpopular idea quickly turned into a multi-billion dollar company! OG DISCO Paper: lass.cs.umass.edu/~shenoy/cour... Comparison of Software + Hardware x86 virtualization techniques: pdos.csail.mit.edu/6.828/2018/r...

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

But that’s exactly what VMware did. A small team, mostly from the DISCO paper, secretly iterated on a binary translation engine. Problematic x86 instructions were rewritten on the fly; shadow page tables controlled memory access, and ring compression handled privilege.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

The now-famous “DISCO” paper targeted MIPS, which was easy. You could cleanly trap privileged instructions. x86 was a nightmare by comparison. Most looked at DISCO, thought it was a neat toy, but thought “lol good luck getting this to work on x86”.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Common wisdom in the 90s was to “just buy more servers”. Unlike mainframes, x86 really wasn’t designed for virtualization. Plus, there wasn’t really a market need. Hardware kept getting cheaper, so why virtualize?

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Virtualization almost didn’t happen. The entire concept of emulating multiple operating systems on commodity hardware; particularly x86, wasn’t taken seriously. Three students took a 1970s idea, published a “impractical” paper, and created the giant that is VMware:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

I wish modern computer architecture classes would at least acknowledge the existence of these alternative ISAs like loongson, elbrus, and others. It’s like looking into an alternate universe. Take a look at the full ISA here, it’s well written. loongson.github.io/LoongArch-Do...

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

I just think it’s fascinating that thousands of schoolchildren in China use a processor architecture that gets almost no global attention! Of course, it’s intended to reduce their reliance on western IP, but it’s still genuinely interesting technically.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

LoongArch is a *hefty* ISA; about ~2,000 instructions. To put it in perspective, base RISC-V is like 50. That said, it’s pretty clean to read. All instructions are 32 bits, and there are only 9 possible formats. Certainly easier to decipher than modern x86.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Of course, Loongson (the company) realizes that most software is compiled for x86 and ARM. Thus, they decided to add some hefty translation layers (LBT) built into the hardware. LBT gives you for extra scratch registers, x86+ARM eflags, and an x87(!) stack pointer.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

The West has a blindspot when it comes to alternative CPU designs. We’re so entrenched in the usual x86, ARM, RISC-V world, that most people have no idea what’s happening over in China. LoongArch is a fully independent ISA that’s sorta MIPS…sorta RISC-V…and sorta x87!

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

It goes without saying that I’m not a fan. Personally, I think there’s a lot of wow factor to be had with a classy orange, pink, or purple indicator, but examples are few and far between. What do you think? Do you love or hate the blue LED indicator trend?

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

The early 2000s were of course, *obsessed* with the color. Even when the cool factor wore off, risk aversion, mature supply chains, and the overall herd mentality made the trend sticky. After all, you don’t want to be the one product that doesn’t stand out in the dark…do you?

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Even worse (or better, depending on your view), the eye’s optics focus blue slightly in front of the retina. This causes longitudinal chromatic aberration; a halo or “glare” effect. Your product isn't just blue...it creates optical artifacts. You can't *not* notice it.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Blue LEDs weren’t practical until the 90s; the inventors later won the Nobel Prize. As costs dropped, blue became a cheap way to differentiate from “boring” green indicators. Your futuristic product would *pop* at night; much more exciting than your outdated competitors.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

To understand the insane popularity of blue indicators, it’s helpful to look at the ISO graphical standards. Red is universally recognized as danger, green as normal/ok, and amber as caution. That doesn’t leave many other colors to choose from.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Why are all electronic indicator lights blue? The Purkinje effect causes human vision to get more sensitive to blue hues at low illumination. Blue Leds give roughly ~20x the luminous intensity of red or green indicators. It's also really, really annoying at night:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

correction, the full error code is “lp%d on fire”, where lp%d refers to the printer itself my brain is tired cut me some slack

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

The prototype UNIX driver reported every jam as “on fire” to motivate the technician to take an immediate look. lp0 still exists to this day in the Linux source code! Go and search the git tree for “on fire”, you’ll find it!

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

In the 80s, Xerox created the first prototype laser printer. Apparently learning nothing from lessons of the past, paper had to pass directly over a glowing wire. If a jam occurred *anywhere* in the system, the sheet in the fuser would immediately catch fire.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

As tech later advanced to drum machines, the fire “problem” didn’t go away. High speed rotary drums could cause enough friction during a jam to self-combust. Even minor hangups needed immediate intervention.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

State-of-the art at the time, the printer was modified with external fusing ovens hit a whopping… 1 page per second! In the event of a stall, fresh paper would continuously shoot into the oven, causing aggressive combustion.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

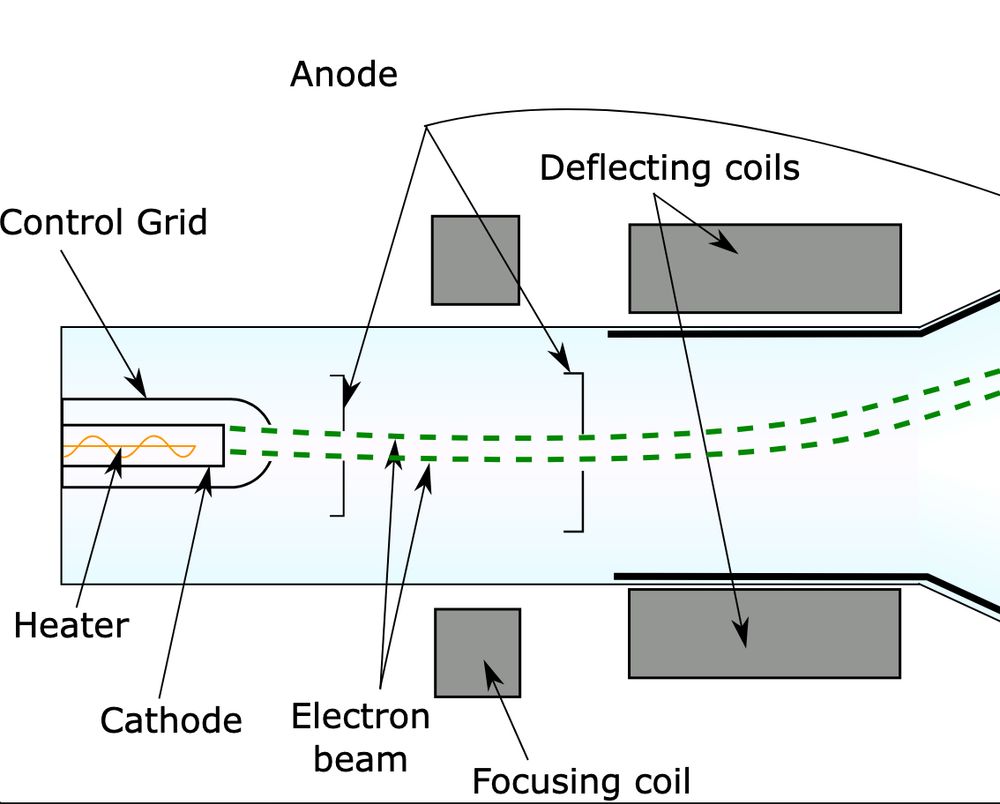

lp0 is a Linux error code that means “printer on fire.” It’s not a joke. In the 50s, computerized printing was an experimental field. At LLNL (yes, the nuclear testing site), cathode ray tubes created a xerographic printer. ...it would occasionally catch fire.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

I know it’s a rant, but I can’t imagine a future where 30 years from now we still consider CPUs with complex PCI links the “heart” of a computing system. What do you think?

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

GPUs and accelerator cards are getting general purpose enough that the PCI Express bus is an unnecessary headache. As kind of a crazy example, look at the Tenstorrent Blackhole. You can run Linux *on* the card itself using the built in RISC-V cores, which means you have: Linux…on Linux.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Today's GPUs draw significantly more power than CPUs. Why is the highest power draw, highest bandwidth component the “peripheral”? It forces us to route massive power delivery outward, rather than intelligently building from thermal center.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

It’s not a new idea. In the pentium era, Intel created Single Edge Contact Cartridges (SECC). Instead of being socketed, the CPU was basically slapped on a glorified RAM stick. Later abandoned due to cost and cooling issues, in the modern era it's starting to make sense.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

PCI link bus designs are incredibly complex. Standard FR-4 PCB material is basically at its limit. Every year it's harder to keep up with the new standards. At what point do we flip the architecture on its head... GPU as the motherboard, CPU as the peripheral?

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

If you want to *actually* help, go get someone else inspired. Post that neat programming fact. Make that blogpost you think “no one will read”. Go make the content that would have excited a younger you! Thank you all for the continued support. Until next time, LaurieWired out.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

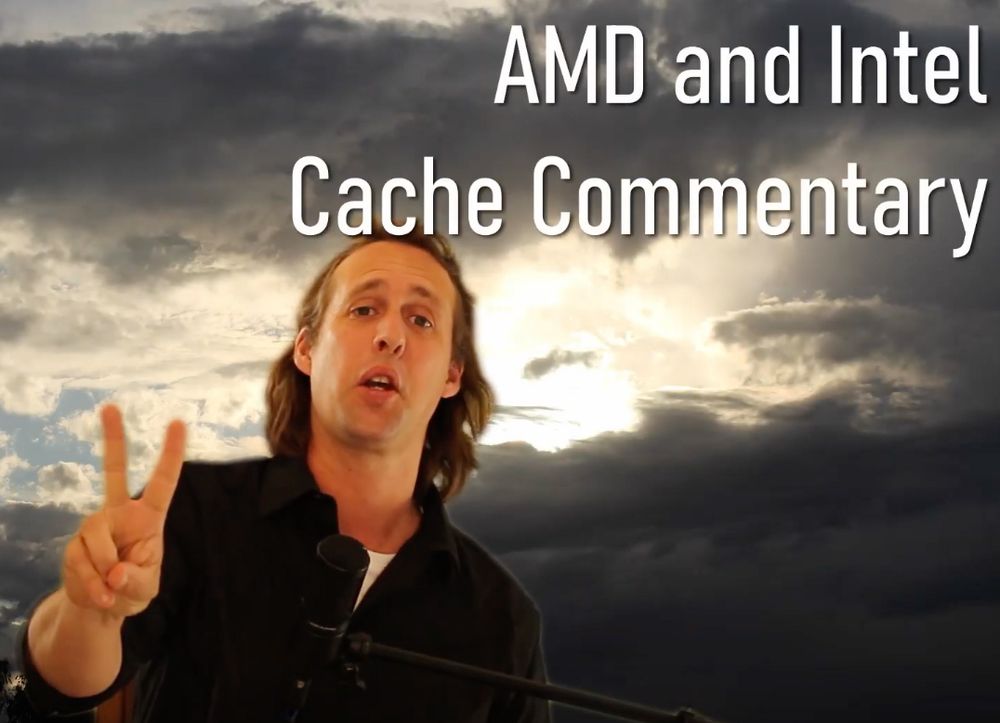

Myself, in a small bedroom with a camera on nights and weekends. No, it’s not my dayjob! I’ve never taken a sponsor, brand deals, or made any sort of paid course. I just wanted to share my excitement about this field; it’s neat!

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Two years ago, I had just 3,000 YouTube subscribers, creating niche reverse engineering + assembly programming content. I thought most people wouldn't be interested. Last night, I just passed 200,000 subscribers. People assume it’s a large production. It’s actually just me!

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

If you want to read a bunch of implementations in your favorite language, check out this comprehensive list. But I warn you, many miss the original spirit ;) rosettacode.org/wiki/Man_or_...

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Rust can’t do it either. Sorta. A “man” compiler, by the original definition, handles messy self-referential code without complaining. Advanced solutions using enums + trait objects exist…but again, you can argue it misses the point. Python + Javascript (yikes) pass with flying colors.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Early C fails the test. The lack of nested functions, along with the simple stack-based memory model makes closures impossible. You can *sorta* do the test in modern C, but it’s a total hack /w function pointers and structs. Kinda misses the whole point.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

It’s a fun little program that creates an explosion of self-referential calls in just a few lines of code. At a recursion depth of 20, the call stack already hits the millions! Keep in mind, this test was designed in the 1960s; yet even modern systems struggle.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Is your compiler a boy or a MAN? Created by Donald Knuth, it’s a test to check if recursion is implemented properly. Originally written in ALGOL 60, a precursor to C, but can adapt to nearly any language. It really stresses the stack and heap, pushing insane call depths:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Full Video: www.youtube.com/watch?v=y-NO...

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Fading out audio is one of the most CPU-intensive tasks you can possibly do! When numbers get really small, the number of CPU operations can explode 100-fold. In this LaurieWired video, we'll explore the IEEE 754 (floating point) standard, the fight between Intel and DEC, and more!

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

It was great getting to see so many of you at DEFCON!

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

That said, I still find alternate topologies super neat! I’m still hoping for a small lab to do more experimenting with it. You can read the original research by HP Labs here: arxiv.org/abs/1110.1687

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

As cool as it is, Jellyfish never got widely adopted compared to leaf-spine. Irregular port counts, random really long cables, and difficult troubleshooting make it a tough choice. Vendors *assume* leaf-spine so strongly that the hardware itself becomes a non-starter.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Want to add one more server rack with Jellyfish? No problem. Want to add one more rack with fat-tree? Oof. You’re gonna need to make some compromises. Topologies that enable “Pay as you grow” build outs are a fascinating mathematical puzzle.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

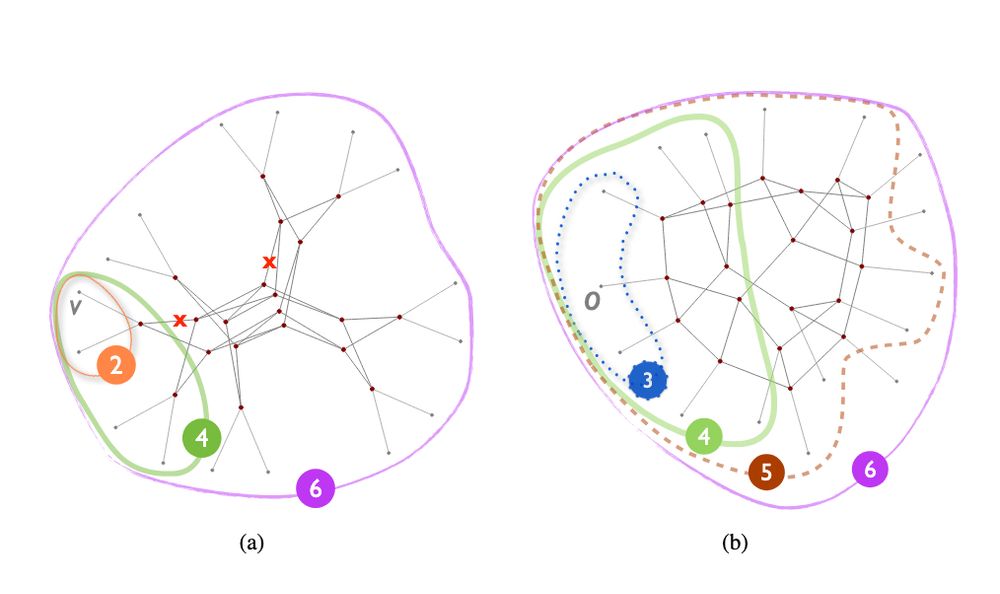

Jellyfish is a weird, sloppy topology. First proposed in 2011, the layout is *so* abstract that many mis-wirings don’t even require fixing! The benefit? Incremental expansion doesn’t hurt you; the design is nearly identical regardless of scale.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Scale is hard. Incremental Expansion is even harder. Designing a topology *flexible* enough for incremental growth is an insanely difficult computer science problem. Well defined structures often hinder expansion. Here's why (controlled) randomness can help:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

And if you want to see ARM/RISC-V content, well, my stuff is here ;) www.youtube.com/@lauriewired

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

I will fully admit, I created my own ARM and RISC-V ISA tutorial series with the mantra of: “what if Creel taught ARM?” Anyway, for X86/64, he’s the goat: www.youtube.com/@WhatsACreel/

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Before you say "just read the spec", or link to some half-baked blog tutorial in markdown, seriously give him a look. Creel's got 200+ of the most entertaining lessons I've ever seen. It really teaches *why* ASM code can be fun, and not just in a theoretical sense.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

If you want to *actually* learn X64 assembly (and not in a meme-way) watch @whatsacreel. A hilarious Australian who goes to the most esoteric depths of x64 ASM, often filming while on a beach. I wouldn’t be in this career if it wasn’t for him.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

The best documentation I’ve seen on these states is from the Asahi project, check out their Guarded execution notes here: asahilinux.org/docs/hw/cpu/...

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

GL2 is a sort of guarded helper for Hypervisor work. It’s also the reason iPhone’s and iPad’s don’t have great virtual machine support. EL2 is disabled on iOS, hence no GL2 by proxy. Sounds somewhat convoluted, but the architecture is actually very interesting when you drill down into it!

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

For Apple’s M-chips, two substates were added to EL1+EL2, called GL1 and GL2. During their reverse engineering efforts, Asahi Linux confirmed that the EL3 state doesn’t exist on the M1. GL1 is a sandbox that the XNU kernel “jumps” into every time it edits a page table.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Negative rings are mostly due to X86 being really old; as the ISA got more complex, we got "above 0" states. Armv8 moves in a positive direction; higher numbers have more privilege. From EL0 (user space) to EL3 (State-Switching). Apple does something extra funky:

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Ring 0 is a highly-privileged state on CPUs. Negative Ring Levels have even *higher* privilege. You just haven’t heard of them. For X86, Ring -1 is Hardware Virtualization, Ring -2 is System Management Mode, Ring -3 is Intel ME / AMD PSP. Arm get's even weirder:

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

packing for defcon, is there any software I missed? I've got: Adobe Flash IE6 Windows XP (SP2) MS Office (VBA Macros ON) Log4j (2.14)

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Burners-Lee later said that he regrets the domain name order, wishing it followed the filesystem structure. Still the opposite of postal-style, but at least we wouldn’t have two hierarchies! To read more, check out this interview with the British Computer Society: www.bcs.org/articles-opi...

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Then URLs came along, and messed everything up. URLs look *weird* because they have two hierarchies, plus a scheme: Outward through the network (Most->Least Specific): subdomain . stanford . edu Inward through a filesystem (Least->Most Specific): /path/inside/server Scheme: https://

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

It started out wonderfully, exactly matching the postal system. DNS resolution began at the root, splitting down into smaller chunks (walking the tree). Keep in mind, this was before URLs were a thing: Fred @ PC7 . LCS . MIT . ARPA Most specific -> Least specific.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

In the ARPANET era, every host->address mapping lived in a single text file. Entries were updated *by hand* at the Stanford Research Institute. By the 80s, this was getting out of control. DNS was developed as the automated map from IP addresses to human-readable domains.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Web addresses are kind of backwards. Postal addresses follow a logical structure from most to least mutable: Laurie Wired, 308 Negra Arroyo Lane, Albuquerque, New Mexico URLs have protocols (https), TLDs (.com), and subdomains mixed in a wild order. Here's why:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

At the time, the iBit API had no limit for JSON calls. If you imagine an attacker spamming 20 requests per second, that’s about $2,000 a day at today’s prices! The bug was reported on HackerOne and fixed, where the researcher was awarded a whopping…$1000 bounty :(

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

iBit, a Bitcoin exchange, encountered a “free money” glitch for this reason. Orders were parsed as floats; you could create an order smaller than a single satoshi (minimum unit of Bitcoin). Source wallet would round down (no change), target wallet rounded up (+1 satoshi).

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Go ahead and try this. Let’s add three dimes. Open up a python terminal, and type in: 0.10 + 0.10 + 0.10 Uh oh. See that little remainder? It may seem trivial, but this mistake happens more often than you’d expect!

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

An early rule you learn in computer science is: “Never store currency as floats” Nearly every popular language has special, built-in types for money. But why? The *majority* of money-like numbers have no float representation, accumulating to massive errors over time:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Purposefully constraining yourself to the Principle of Least Power reduces the attack surface and opens up huge data analysis capabilities later. Every programmer should take a look at Tim Burners-Lee article. Written in 1998, but still insanely relevant today: www.w3.org/DesignIssues...

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Berners-Lee warned that powerful languages have “all the attraction of being an open-ended hook into which anything can be placed”. It’s hard to do, but sometimes you should ask yourself: can this be declared instead of coded?

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Imagine an alternate-reality Web, where HTML didn’t exist. Java applets would have been a serious contender; they certainly allowed for rich interactivity. Yet, without a way to freely scrape simply formatted data, search engines would be a non-starter.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Don’t take my word for it. Tim Berners-Lee (inventor of HTML, HTTP, etc) had this to say: “the less powerful the language, the more you can do with the data....” HTML is purposefully *not* a real programming language. The constraint pushed innovation to data processing.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

Programming Languages used to be designed as powerful as possible. Maximum possible utility given hardware constraints. The pro move is to choose the *least* powerful, non-Turing-complete solution. The entire web exists because of the Principle of Least Power:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

The medium doesn’t matter, as long as the ODFI (commercial bank) is willing to play ball. It’s funny to think that ~$250 Billion dollars a day run through this ACH system, the majority of which is just a bunch of SFTP’d txt files!

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Legally, not much is stopping you from writing your own txt file, putting it on a gameboy, and transferring $1 Million to a Federal Bank. As long as its: 1. Electronic 2. “reasonably” encrypted It meets minimum NACHA operating rules.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Of course, larger Fintech firms (think Stripe) wrap it up with modern APIs, but SFTP is the default for most US Banks. Hilariously, NACHA rules don’t clarify *how* transactions should be encrypted. Only that “commercially reasonable” cryptography should be used.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Chase offers a sample NACHA file to look at. Notice the rows padded with 9s. It’s an artifact of a 1970s rule about magnetic tape, "always fill the block". To this day, total line count *must* be a multiple of ten; otherwise the bank will drop the transaction.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

When you make a Bank ACH transaction, it’s literally just an SFTP upload. Sent as a NACHA file, it's 940 bytes of ASCII text. Bank-to-Bank transactions cost ~0.2 cents. As long as it travels via encrypted tunnel; it’s compliant! Here’s how the quirky system works:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

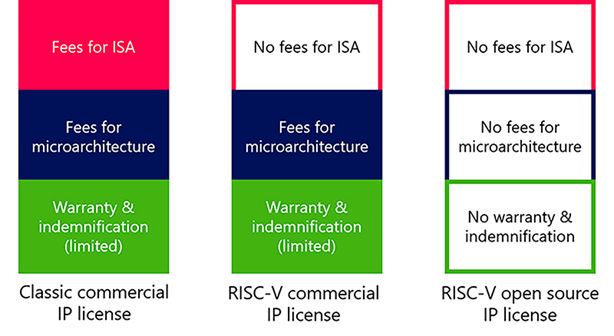

Once compiler toolchains and standards hit critical mass, China+RISCV will be unstoppable. Policing an openISA is nearly impossible; reducing x86 / Arm dominance eliminates the single points of failure that could otherwise be controlled via sanctions. Are you worried about the momentum?

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

It’s an exciting (but also scary!) time. For hobbyists, it’s really interesting to get such a high level of performance out of an openISA. From the geopolitical perspective, further development in this area will severely limit the bite of future US/UK export bans.

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

UltraRISC (the vendor with the high specint2006 score) is fabbing on a pretty old node; TSMC 12nm. They don’t even have full vector support yet! Imagine in a few years, a ~7nm chip /w full vector extensions. China will have *fast* CPUs with no license chokepoints!

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

No one realizes how quickly a CPU supply chain; completely independent of western IP is progressing: Not long ago RISC-V performance was a joke. Now it’s trading blows with x86 and high end ARM! In another generation or two it’s going to be a *serious* contender.

LaurieWired (@lauriewired.bsky.social)

LaurieWired (@lauriewired.bsky.social)

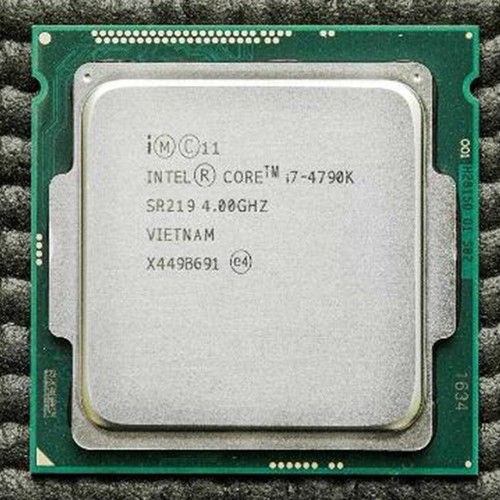

Intel’s not doing so hot lately. Meanwhile vendors are killing it at the RISC-V Summit in China. One CPU got a specint2006/GHz rating of 10.4/GHz! To put it in perspective, a i7-4790k (Haswell) scores 8.1/GHz. RISC-V is hitting high-end desktop territory FAST:

LaurieWired (@lauriewired.bsky.social) reply parent

LaurieWired (@lauriewired.bsky.social) reply parent

Finally, let’s come to something you can buy on DigiKey. This Infineon diode, intended for welding applications, is one giant circle of silicon. Rated for 103 *kilo* amps, this weird disc gets clamped between huge heatsinks with even pressure. And it can be yours for the low price of $440!

LaurieWired (@lauriewired.bsky.social) reply parent