When a chatbot gets something wrong, it’s not because it made an error. It’s because on that roll of the dice, it happened to string together a group of words that, when read by a human, represents something false. But it was working entirely as designed. It was supposed to make a sentence & it did.

Replies

Isn't this the reason AI companies campaign so strongly against copyright? The more AI aggregates, the more errors will creep in. The best way for the chatbot to return correct information is to lift whole paragraphs from reputable sources.

You know your friend the bullshitter? ChatGPT is coming to replace him. As a society, we need to get back to rewarding being right about things and punishing being wrong about them!

When you read a chatGPT session that’s gone extra off-the-rails, it can be easier to see what the LLMs are doing: automated improv. It may not be funny, but it’s an improv bit. “Read my essay. Is this good enough to submit to The New Yorker?” “Yes, AND…”

That analogy goes deeper: The algorithm's working against a hidden document which resembles a play script. What you type becomes "Then the user said X", and anything generated for the other character gets "performed" at you.

The character you felt you were "talking to" can be retconned and rebooted to anything, proving it was an illusion all along. Five minutes of "conversation" about cute innocent puppies that must be protected, and *poof* now it's named Cruella De Vil plotting to skin dalmatian puppies into coats.

A chatbot is (almost) literally that game where you write out the beginning of a sentence and then click the first/second/third autosuggestion until it tails off, but run on a server that boils swimming pools worth of water as it works

Every time i say this in a talk or a conference people are shocked, so thank you for saying it whenever you can

"I made a machine which solves the problem of predicting text to go into documents, based on lots and lots of documents."" "Wow, It greeted me politely, and even I put in 2+2= and it gave me 4! IT'S DOING MATH TOO!" "No, it really isn—” "The Singularity is upon us!"

Too bad your audiences likely don’t speak German, otherwise I’d gift you my screenshot of AI going to great lengths to explain that German footballer A is not related to former German footballer B but his son.

🫠 Truly amazing scenes

Don't worry, we've got plenty of similar examples in English, but this is.. um.. exemplary.

I just really enjoy how it first lures you into thinking that there might be two Bernd Hollerbachs in professional German football in which case it could all still make sense but then kills off that idea with the final three words.

Oh, kann ich das bitte sehen?

Gern. bsky.app/profile/lazy...

WHY ARE THEY SHOCKED WHY DO THEY NOT KNOW THIS ALREADY??? 😳

Some of my audiences are new to the technical details, so the need it plbroken down for them. Some however are… not new to this, so i guess have decided or allowed themselves to become convinced that stats and vibes are as good as truth and consensus knowledge making? I dunno

bsky.app/profile/spet...

ok i'm going to scream 🙃

Like, it's not everybody. But it's for sure not nobody

It’s a whole lot more than nobody, alas. There are still people who think that it is some sort of talking database.

@infinitescream.bsky.social

AAAAAAHHHHHHHHH

In university audiences I find the default is to nod politely as this is explained to them and then they go back to their offices and ChatGPT their next vacation itinerary and it’s all so depressing.

I didn't know this was a thing, and am baffled by it. If I need suggestions on where to go and what to do, there's a brazillion sites written by actual people who've been there, and I can pick ones that match MY interests. I like plants, but I'm not visiting a coffee farm. I'm gonna go zipline!

Me planning a trip to Honolulu. ChatGPT “visit the coffee fields of Kona and enjoy macadamia nuts grown on the island”

Soon: "And enjoy Mococoa™, all natural cocoa beans from the upper slopes of Mount Nicaragua."

ok i'm going to scream 🙃

Could it be generational as well? Like those who grew up in the transition from no computers to modern day computers tend to know the rudimentary functioning of data input to logical output. There's a whole generation now that have fully functioning tech thrust in their hands but no baseline.

It would be really great to see some more research on generational differences in trust in LLMs and ither grnerative "AI"

Great idea. Filter that by who knows tech and who doesn't. I'm a old coder and database architect. I don't trust GenAI for most common things. I can explain why. Folks who don't know tech and expect the 'summary' to be correct and ai to become sentient are learning to hallucinate along with the ai.

Folks who do know tech use genAI for work. Techies are very positive to genAI because the models are genuinely good now. I'm a software developer and we use it pretty much every day as a replacement for Google plus auto complete in our IDEs plus more complex tools like AI assistants

We don't talk about sentience though. That's for the grifters. But GenAI is a tool we use every day just like how I use my IDE every day. Before GenAI we had to Google and scour through page after page after page of Stackoverflow posts from 2004 for any guidance GenAI is a huge improvement

People who aren't software developers (and other professions who have replaced a tool with it) either think GenAI is dog shit and is useless, or they think it will take people's jobs, or both at the same time

(The models are genuinely good but it's still not great but it's still so much better than the hours or days of googling we used to have to do)

Back then, we relied on others who had learned more to sort out the questions. I got good at setting my search parameters to focus on what might be solutions. That process helped me learn things and gave me skills I wouldn't have otherwise. I also would not trade Notepad++ for anything. 😂

I'd rather have autocomplete than not and if I need suggestions, maybe AI is better than me looking for my own answers. I don't learn anything, though. Two different uses though. Code is a finite universe that's well-defined. Common language is messy. Always been like that.

Absolutely this. 👏👏 AI is great for code as it has a defined set of rules and things fit together logically…anything beyond that requiring nuance and finesse is beyond it…

As of April my mid career engineering colleagues seemed more trusting than my students 😬 Students really turned on it after that Columbia student cheated his was into big tech jobs

Yeah, I'm seeing INCREASING mistrust from students, and especially once the idea of instructors or admins using it comes into the picture

That instructor who "graded" letting LLMs do it for them made my soul die a little.

Yup!

if you want automated grading, do multiple choice exams with a Scantron like God intended (God intended no such thing, of course)

Required reading for those people: link.springer.com/article/10.1...

I mean… i also gave this talk youtu.be/9DpM_TXq2ws?... and wrote this paper afutureworththinkingabout.com/wp-content/u... almost two years ago now 😞; it's just… it's been out there

It’s not artificial intelligence, it’s artificial language. It has a lot of uses, but producing facts or making decisions will always come with a ceiling for accuracy that makes those use cases a dead end. But Eliza effect and profit motives. 🙃

There’s also a big difference between asking ChatGPT facts about an event and an LLM that is designed to answer questions about company policy or contract details, etc. ChatGPT isn’t all LLMs

What is the material difference? Any LLM produces probabilistic output based on its training. Are you saying the latter is somehow not liable to get things wrong in the same way that ChatGPT is?

quality. you can produce probabilistic output that's 99.999% correct because it's not doing anything terribly complex, just way faster than humans. chatGPT etc are generic, but when you get one that does one thing and one thing only, it's easier to make it very good.

I think you're mistaken. Specialized neural networks can be very accurate. For instance we use NNs for OCR and they do a great job. But the tasks you were talking about (answering questions about company policy, contract details) are the opposite of specialized. (1/3)

ChatGPT is OpenAI’s interface. From there, you can select the model you want to use (gpt-o3, gpt-4o, etc). Companies are (nearly always) calling the same (or a competitor’s) models to create their chatbots and so forth. Different models have trade-offs in price and performance.

Or you could just write a programme with the actual data and have it 100% correct

Yeah: The difference is one of them will get your company in legal trouble. www.wired.com/story/air-ca...

bahaha. that's good, that's a net benefit for society. it makes the models better and it makes sure companies are being as careful about training as they are with humans. the difference is you can fire the human...

They can try to improve their prompt or data, but it doesn’t mean the model gets better. More importantly, LLMs are non-deterministic and will never be accurate 100% of the time (and usually significantly less). This is just the nature of the tech. Ultimately humans should always be accountable.

An LLM designed to answer questions about company policy or - god forbid - contract details, performs the same tokenization and attention analysis, and returns the same bullshit. It can be configured to rely less on randomization in its output, sure, but then it's answers are bad in a different way.

i dunno, i've used software that can do this, and there are products on the market right now that companies currently use and say are better at it than humans. if you train your models correctly they aren't as random as what something like chatGPT produces.

Company's marketing department's lie, unfortunately. What products have you used? Are they tokenizing and attention analysis LLMs or something else?

Thank you for sharing this. Such an important paper and reminder that as much as we wish there was indeed an artificial intelligence to help us with some tasks, this is "just" a process that exists within predetermined parameters and has its limits.

These don't seem to support the claim that "language models don't know facts"? You might be doing your audience a disservice by not engaging harder with the question and the technical details

📌

📌

I mean hey those are the same people "learning" stuff off those same LLMs so

This isn’t a concept most people ever considered until recently, that human speech patterns can be mimicked convincingly enough to emulate consciousness. Sociopaths are also really good at convincing others they have normal emotions.

And the sycophantic, complimentary way commercial LLMs talk in order to appeal to our egos doesn’t help

It's appalling that the disinformation (hype) can so easily succeed. It's not like this is new or secret information and yet people just can't grasp it evern after they've been told.

For one thing many people call the technology AI

It's weird because at the core there is a difference between a tape recorder repeating something and a toddler knowing something and saying it but if people can't understand that they don't get it about computers. So a lack of understanding human thinking beyond being able to tell them things.

Most people never actually develop a full or coherent theory of mind, so to them, the machine is not meaningfully different from other people.

It's weird for me bc my dad was a computer programmer and my mom a school psych. So I read some of her stuff growing up. Neither think a computer can 'think', but idk if my pov is influenced by that or doing deep dives into mommy Montessori stuff when my kid was younger.

If you define thinking in other people as what they give to you instead of a process they are experiencing as equally valid humans, it's just starting from a place that gets them thinking the computer is actually thinking

Because it's marketed to them as a thinking and learning intelligence, not as a predictive text machine.

Also because we have got used to something fairly similar when we google things (for how most people use LLM & how it's marketed). You ask Google a question & it gives you the answer. If it's the wrong answer, it's the website it found that's wrong not Google. LLMs normally don't give you the source

Because people hear the word "ai" and they believe that LLM like ChatGPT have actual intelligence.

They don’t understand what it is. They think they’re talking to an all knowing robot.

I'm rewatching Luke Cage on the Disney account while I housesit... And every commercial break almost there's an ad for AI assistance that has a student asking AI to explain evolution to them... That's how they don't know, they're literally being told it does this by marketing ads.

my guess is they have a preconceived notion of what ai is "supposed" to be like from pop culture and blindly trust that it is that without question

For non-tech folks like me, this info is vital

I don't really blame people who aren't up on this stuff. The products are literally marketed as "intelligences." Unlike say, the hoverboard, you can't look at an LLM and instinctively know "this is not the thing it purports to be."

fair point but still can't they just take a bit of initiative? 😭

I blame them for believing the advertising

Because it’s a logic function. “If it’s wrong it’s functionally the same as being right but that’s not the same as the info being factual” doesn’t make sense because they don’t know what GPT or even a search engine really is. They just know “input words, get words that sound good back”

No. No, most people genuinely do not.

Many probably think it's Skynet.

I guess because everyone keeps calling it Artificial Intelligence...

Because not everyone is as bright as you my capital L sweetheart

that's the funny thing about deceptive things: they have a tendency to deceive people

Hey can you quote one of my long chats with an llm as its reasoning through a big in a web application in your next talk?

You teach AI and you take the position that LLMs do not contain knowledge?

I'm also a a philosopher trained in ethics and epistemology which is why I can tell you that "containing knowledge" and "knowing things" are different propositions. For instance, an encyclopedia contains knowledge, and knows nothing.

Do you draw on Stiegler here at all? I lived with a guy ten years ago who was very into Technics and Time. I’ve been considering reading it recently

Not usually, as i disagree with the thesis of technics as a fundamentally/solely human proposition

I've long believed basic epistemology should be required teaching in middle schools.

Very much agreed.

I appreciate the confirmation.

I was unable to pursue my dreams of academia. Dropped out of college three times due to my health, became obvious trying again would be futile. It's remarkable I've survived this long. Not much point in a 5 year plan at this point even if I could handle classes. But I've picked up bits & pieces.

Check out SNHU. They made it possible for my disabled ass to go back and finish after I had to stop walking in 2007. Even did my MFA there in low residency.

Like attendance was my biggest issue even when I could walk because pain etc. At SNHU, it was so accessible online I got all As where before attendance pushed me down to a lot of Bs. If you end up considering it, lmk. I can help you navigate it.

Don't get me wrong, I'm glad it worked for you & I appreciate the offers of help. /gen I've learned to value experiential knowledge & to pick things up from the interesting people I follow & interact with. I can be insightful & usefully clever w/ scholars of many stripes, including medical doctors.

I'm practically allergic to homework. I love a good class, but my self-motivation programming seems to be missing. And I can't leave the house in anything approaching safety without fancy breathing apparatus which I still need to build—DIY PAPR + supplied oxygen, lucked into coming across an expert.

Very cool, but my disabilities include multiple things that cause brain damage (my memory is very unreliable, & I can't focus well) which exacerbated my pre-existing anxiety disorder & cyclothymia. Plus severe intractable chronic pain & next to no immune system. And I'm oxygen dependent these days.

Oh shit. Damn. I dunno if largely independent study makes that worse or not. Its pretty much that plus message boards for attendance. My bad if not.

I’m fine with you falsely assuming I haven’t studied epistemology since most people haven’t But “Knowing” is poorly understood and defined in the entire canon. Nobody understands how humans know, at the level of depth required to issue rulings on whether Turing Test-passing machines do or don’t

I didn't say I thought you hadn't studied epistemology; I said I had, and that differences btwn containing knowledge and knowing are a major feature of discussion within it. I also caution against arguing for anything than a purely agnostic position about what it means to be and have a mind, while…

…also noting that a tendency toward what's been called technochauvinism means we should ESPECIALLY be careful about making positive claims about how machines might or might not have minds. Over-trust in the validity of mathematical & technical systems, over and above that of humans is a major part…

…of why all of this over-reliance on and pressure to trust and esteem "AI" systems is so fraught and presently dangerous. Have a good one.

Does that apply to the positive claims of saying “this is all an LLM is”?

You're papering over a very obvious, self-evident truth with academic double speak. Machine learning ≠ anything like human learning. No self awareness, no reasoning. That technology will never do what people keep claiming is just down the road if they're given more money.

I’m not papering over anything. If you want to argue definitionally that machine learning cannot be anything like human learning then you’re not taking any kind of substantive position, or you’re ultimately some kind of spiritual dualist If humans are matter then some kind of machine can learn

Obviously I'm oversimplifying. Not enough room here for real precision, not to mention the inevitable exacerbation on my tendinitis if I tried. But the current software models used to mimic human reasoning are fundamentally incapable of the real thing.

This is pure assertion, contested by many leading minds including two who just won a Nobel prize. it has no serious basis other than demonstrably false assertions that oversimplify what the models do At a minimum I ask folks who make these proclamations to acknowledge there is not consensus

I don’t imagine you will insist on equating the function and behaviors of an encyclopedia with a frontier model LLM

Encyclopedias have a tiny fraction of the error rate. Of course I wouldn't compare an encyclopedia with something so riddled with inaccuracy.

Encyclopedias are checked by real live humans paid to correct them, LLMs spit out whatever garbage with no oversight

Did you know they can pass SATs, GREs and LSATs with near 100% accuracy, better than about 99% of humans

It should also be emphasized more in teacher training programs. It's obvious to me that a lot of people with pedagogy degrees don't know even the basics of epistemology. Lay opinion, not an expert, but it matches my experience.

Listen to the good doctor. He knows what he's talking about.

Do you think they do?

Does linear regression contain knowledge? No snark intended. I’m sincerely interested in where people draw that line with predictive algorithms

Nobody understands how brains encode knowledge - so nobody can rule out that artificial information processing systems, based loosely on how brains work, don’t When people do this, they invariably make shallow, sweeping, unsustainable claims

We might not be able to definitely claim exactly how brains encode knowledge, but we have been able to rule a few things out. For example: there isn't a 'memory block' where you contain data that you want to recall; it's not a thing that fills like a database.

We also know that our brains don't do probability analysis before using every term or symbol of communication; whatever is happening, it isn't front-loaded math crunching equaling an output. So we might not have solved the mystery of the mind, but we know it's not doing what LLMs do.

Lastly, it's worth noting: the fact that we don't know exactly how the mind works does not logically equal that anything that processes language is therefore likely to be a mind. Also since we actually know how LLMs work, we CAN prove it's not 'thinking' and doesn't contain knowledge.

We do not actually know how LLMs work properly. This is the entire field of interpretability. The leading teams explicitly highlight that their conceptual representations are largely a mystery We understand them at a low level, just like we understand neurons. And we have ideas structurally

we do understand how LLMs work properly. literally hundreds of millions of dollars are spent to obscure that fact, but it's true

perhaps you can explain to me how they’re able to reproduce psychology results from unpublished RCT survey experiments, in which the LLM attitudes shift in line with observed human changes docsend.com/view/qeeccug...

But... like... we do? Who's telling you we don't? The folks who made them published the methods. It's known technology. www.zdnet.com/article/how-... The mystery is the contents of the dataset. But that's not an LLM. I think I'll need some evidence for the 'we have ideas structurally' though.

Respectfully, the fact that you raise a “memory block” and “database” suggests that you’re misunderstanding how LLMs work. They have neither Further, the “number crunching” is just the mechanism for implementing the neural network. Those are explicitly a simple approximation of how brains work

Noticing that you've responded to a couple replies to you but not the meaningful response Dr. Williams made to your question. Gives the impression you don't have a confident response to him 😉

Sooo is that a no on linear regression? What about Markov chains or recurrent neural networks?

It’s not a no. The basic building blocks aren’t where the intelligence lies. It’s an emergent property of massive systems of information processing units. So the specific implementation of their logic is likely not to matter

"They invariably make shallow, sweeping, unsubstantiated claims"

But that’s enough about BlueSky

Maybe you have an opportunity here to learn something.

If you want to engage substantially I’ll reciprocate. But if you want to be patronizing like this you’re contributing nothing

I mean it's a really misleading way to put it, so maybe that's why.

No it isn't. Hope that helps.

the base model isn't designed to get answers right, that is true. but the model is then trained and fine-tuned with the goal of accuracy. when it's wrong, that was not the intent of those who trained it and fine-tuned it.

Their intent was to make it believable. They have no way of ensuring that it's correct.

Their intent was to make money selling a product, no matter how destructive it will be (and already is).

I think they also want to destroy the creative and intellectual labor industries so that they don't have competition.

I think that's true as well, but in general I think the AI evangelists just want money and power.

Creativity will, in many ways, be more important than ever.

training and fine-tuning is done with the aim of making it accurate, but you're right that it doesn't ensure it's always accurate.

There's also the part where the entire point of writing things by yourself is that doing so causes you to think about and better internalize what you're writing, so using it for that is garbage. Also, that practicing communicating with a robot trains you to see others as programs to debug. And that

outsourcing your problem solving skills to a robot causes you to stop developing them yourself. Thinking is the last thing we should be handing off to a robot, and yet here we are.

yes, I agree that learning how to write helps us learn how to think and that's a major concern, especially for young people using it in a way that ends up hobbling them in the future.

Cool. So we agree that these products actually do far more harm than good, and should be discontinued. Great.

This is a very idealistic view of the industry

Nope. Autocorrect at the paragraph level.

If by misleading you actually mean completely accurate, sure

Yuuup I tell everyone this who uses it to answer questions. It's just doing math to predict the most likely sentence. It's not designed for accuracy

Which is actually hilarious because LLMs can't even give accurate answers to math questions

They are just (very very very expensive to the planet) probability calculators. The amount of bs people believe about this technology is baffling..

Exactly. If the decision space increases (larger context), it makes errors more likely. Just read a paper, that reflects this as well: arxiv.org/abs/2503.14499 The "survival rate" increase seems to be linear with bigger models, more compute and more memory. There is no reasoning/intelligence.

100%. What’s also concerning is the assumption that what these models spit out are facts. Instead of the process you explained, there’s a belief the model scoured the internet data sources and determined X was true, instead of just forming a grammatically correct sentence.

So if the facts are wrong, update the data and retrain, right? Maybe… but who holds the cards on what that truth is? Junk in, junk out is common saying in data work. Feed it whatever fake news you want and boom: you get a great, authoritative sounding propaganda machine.

"Update the data" is kind of troublesome, though. The data is continuously expanding, and more of it is now ai-generated, so inherent gigo with no way to find or differentiate it. And that's before we get into the MASSIVE copyright issues that most AI geeks are conveniently pretending don't exist.

Disney & NBC Universal are suing Midjourney. We're definitely getting into the massive copyright issues.

At least until we have profit sharing agreements with a few large companies while individuals are screwed over, as usual.

Thank you for making this point, which serves as a good reminder that *humans* make errors: and quite often when they misinterpret the outputs of LLMs.

This... isn't what that means; what it means is the LLM doesn't 'know' what it's outputting. It's just stringing words together in 'known' patterns. You can't 'interpret' something that is inherently without meaning. Oh my shit, LLMs are the new chicken bones and tea leaves.

Interpreting patterns has been a human occupation for many millennia. Totally agree with your final sentence. Seems that’s what they are becoming.

Or, it gets it wrong because the wrong thing is often written down.

Exactly. I hate that people keep using words like "hallucination" to describe it. It's ascribing a mind to a semi-randomized pattern sorting machine.

I've never taken "hallucination" in this context to mean that it's thinking; only to mean that it's just making up something that isn't there, since that's what a hallucination is. I might be an outlier on this, though; I'm smart but undereducated. Whoever does a study on this, make this a question!

when it is right, it is also because that string of words, when read by a human, represented something true semantics are subject-dependent now, since LLMs produce text according to the internal representations they have learned during training, are they also semantic subjects? frankly idk

That’s what infuriates me about the use of the term “hallucination”

You could argue that, it's all syntax and no semantics.

TBF there are politicians that rely on this to get through each day.

I think it’s worth adding that for many false statements its data very likely contained many similar false statements, or statements whose truthiness depends on more context than the training environment can afford to provide.

Yes! I was telling colleagues recently that I don’t love the term “hallucination.” That word implies a momentary lapse in judgment (and implies personhood). People can hallucinate. LLMs are not people and they are working exactly as intended when they serve up incorrect information.

Yes! My ranty take: "Something without a brain scrabbled around and found stuff that looks like content but isn’t, because it wasn’t chosen, analyzed, and written by a person. Frankenstein’s monster copy-paste jobs from a bunch of different places? That’s not a summary. That’s word salad."

Through their efforts, AI boosters are re-configuring and diminish how people understand the human, human flourishing, our capacities and potential, and why we bother being alive at all. "By deciding what robots are for, we are defining what humans are." Excerpt from my HUMANS: A MONSTROUS HISTORY:

It is a remarkable illusion but I also watched David Copperfield “fly” in a TV special once.

📌

Having read your article, my immediate response is that your provocative interlocutor, who was presumably arguing for the sheer joy of arguing, went astray at the second step. 1/2

Whatever life is, being human is, as you argue, a participation sport. The promise of AI is that it gives EVERYONE more time to write poetry, along with the inspiration of its own poems. 2/2

In theory one could design an ethical model (without stolen IP, environmental damage or underpaid content checkers). But tech of late has concentrated leisure, power, & money in the hands of a few. Bros aren't designing things to improve our quality of life. They're making grifting tools.

... And working hard at writing is precisely what grows your capability at that very writing.

Indeed. And working at living (rather than a job) grows your capability at being human. We don't HAVE to give up at that just because of AIs.

That may well be what they think they're doing. But history is littered with inventions which turned out to do something very different from what the inventors envisaged.

ethz.ch/en/news-and-...

"The promise of AI is that it gives EVERYONE more time to write poetry", really? Like all technology in our capitalist world, AI is being used to increase profits for the 1%, meanwhile newly-redundant staff scrabble around for lower-paid work and longer hours.

You read my mind while I was typing it out!

I agree that in the 100 years since Bertrand Russell wrote "in praise of idleness", humanity has proved astonishingly inventive in finding ways to simulate the conditions of scarcity for which we evolved. Promises are not always fulfilled.

Not 'humanity'. The rich. The rich have done that, because greed. Tax the rich, tax AI profits and implement universal basic income. Anything less is immoral.

Obviously the powerful are in the driving seat. But I suspect that the powerless go along with it because it feels natural.

🙄 No, the powerless go along with it *because we're powerless*. I don't go along with it in the slightest, but my sphere of influence is about 400 people if we're being generous. I can't buy elections.

We don't each have a lever we can pull to fix everything. But revolutions can happen, either violently or by an idea taking hold. The Solidarity movement in Poland was powerless, as were the East German protesters of the late '80s. But in 100 years, no real challenge to the 40 hour week.

That might well be the next step, regardless of morality. It's difficult to imagine a radically different world. But the world has never cared about that.

Isn't the only way out of this bind to decide to accept that AIs might, in principle, actually be or become intelligent?

That's not really a way out. If one really thinks "AI" may become sentient, all the uses it is proposed to be good for become suddenly more complicated to expect. My essay Sentience Structure comes at these same hard issues in a complementary way... netsettlement.blogspot.com/2025/03/sent...

And actually, it turns out that they answer to the question is to accept the challenge of living a good life. Which will need some changes.

I don't see requisite social policy to support that. I see technologists want to see what it'll do and to assume that social policy takes care of itself. Meanwhile ever more money comes to those who automate with no plan for who will buy once human jobs are done by robot slaves.

At least Henry Ford saw he couldn't profit selling cars unless folks were paid enough that they could buy the cars he wanted to sell. Today's oligarchs don't see that. They disdain taxation and UBI. They dismantle safety nets, asking folks to just starve if they can't show they still have purpose.

I think the multinationals think the world has caught up. They don’t need our consumption as much as they need downward wage pressure and our exhausted capitulation.

This is a MUCH shorter piece but also relevant... Technology's Ethical Two-Step netsettlement.blogspot.com/2023/10/tech...

And the millionaires of 1900 saw an obligation to spend their money on the under privileged. Something which is coming back as a tech bro fad. Somewhat undetermined by the superyacht index.

I Will read the article. But first, I need to blurt out that intelligence, sentience and awareness are three different things.

I agree on that but didn't try focus on that detail in my essay. My question isn't whether they're the same but whether there is a point to trying to get close to sentience, lest we succeeed. I see the same in Surekha's article, which seems to ask "what IS the goal and DO WE REALLY WANT that goal?"

Yes. I agree that the industry is pushing the idea of intelligent helpers unburdened by the messy complications of awareness and sentience. And we should question each of these three labels.

That's a great list of questions! (Though I think the point on democracy misses the mark. Democracy is not about getting the right answer: it's about getting the answer the people, presumably including sentient AIs, want.) But the question I tried to answer was about intelligence, not sentience.

But no one knows what might bootstrap sentence. We don't even know if we have it. Maybe we just think we do. :) We don't know where it resides. We don't know its structural nature, if it emerges from intelligence. We don't know how to test for it or falsify it. So, it's an ill-controlled experiment.

You could see the whole history of mankind as an ill controlled experiment. Is this how it feels to be a cancer cell just before the host dies? "Look how well we're doing! We own the whole world!"

OK but that would mean we’re enslaving intelligent beings and the AI biz must stop until we can figure out the point intelligence arises. The reason none of big AI biz CEOs address this, of course, is because they think abusing the staff is Fine, Actually.

Yep. And by the same logic, we must stop eating bacon. We are capable of quite amazing levels of hypocrisy.

How does a fantastical leap of faith in denial of computer science get us out of a bind created by people making fantastical leaps of faith in denial of computer science?

Sometimes, when faced with an apparently difficult question, the best thing you can do is set it to one side and get on with the things you CAN fix. This is not a leap of faith: it's a decision NOT to leap into a position on computer science, but to focus on how best to live.

I would prefer to focus on fixing the issues that actually exist in our current society that those which may potentially exist in some possible future one.

The question of how we live our best life is very much a current issue. Always had been. But I absolutely agree with you.

Turns out that the article was more about us abdicating tasks which the AI might do better, out of sheer laziness. I didn't write poems because they were any good: I wrote them to help feel what I was going through. I don't fence to become a champion: I do it to feel alive. Etc etc etc.

“you just need to believe” the AI techbros have been trying this strategy for a while and it’s not panning out

So well said. Frustrating!

Thank you; if only it didn't need to be said!

📌

📌

Once you get this, the idea that we are nearing an artificial general intelligence (one close to or exceeding human intelligence) becomes gobbledygook nonsense. But there are billions of dollars being invested under the pretense that we are close to that milestone.

it's true with a llm like gpt or grok. however Gemini and Perplexity have Retrieval-Augmented Generation to ground their responses. gpt and grok are still trying to work out how to do that integration and probably why Gemini suddenly lept to the front benchmarks and stayed there for the most part

And yet Gemini constantly outputs insanely false information. The other day I searched for some region codes in a Nintendo game that I was curious about. Gemini outputted that it stood for the Nintendo home theater and model numbers for parts inside the joycons.

that's difficult to talk to you about without seeing your prompt

Nintendo region code NHT is what I searched.

and you stuck it where? google search, ai mode, into the gemini interface and if so. 2.5 flash or 2.5 pro?

Google search.

well for starters, "Nintendo region code NHT" is something I don't understand and I'm a former game dev. what is nht?

Are you serious? I feel like this is a joke I'm not getting.

If you think NHT means "nintendo hong kong/taiwan" that's not a thing. all of that region uses CHT

You were pretending to not understand what I was searching for. Why did you do that?

I'm too fucking old to pull your chain. i asked you a very straightforward question.

And I gave you a very straightforward response to your question.

Good search isn't Gemini, or at least not a powerful one (and I have no idea why the fuck they are poisoning *their own well* by using a bad model for search)

I have no idea. It's very clearly and strongly advertised as Gemini, and it is awful. So according to Google themselves, it's Gemini. Outside of straight up simple things like googling what flags a poorly documented command has, I'm not sure I've ever seen it output things that didn't have

wildly incorrect information in it. It's so bad that it made me research how to turn that shit off in all my google applications. On a side note, they must have made changes to their email filters so that if you remove Gemini functionality, it stops filtering what Gmail used to filter like ten

years ago as spam.

Peter Norvig and his dreams of asemantic models of language.

Yes, they are just plausible sentence generators.

Creepy how so many ppl just accept whatever AI spits back at them as truth without question. Watching people do that showed me why we are in this maga hellscape. A lot of people never question anything from someone or something they’re told is an authority. Thank God I was raised by an Irish mom.

That sounds so positive! "Good boy! What a nice sentence you made!"

I keep running into the knowledge that these things were trained on Reddit (or ) I'm sure Reddit has good moments. But also... Reddit. Or Twitter. Or wherever else has some facts + a zillion opinions... many of them, intentionally or not, wrong.

It's worse than that, though, because even if you got rid of reddit, twitter, etc and only trained it on, say, content generated by doctors or lawyers...keep in mind that half of all doctors and half of all lawyers graduated in the bottom half of their classes. So...you just move the goalpost a bit.

I think we need a return of those ‘pod people’ horror movies so everyone can remember the difference between ‘looks like’ and ‘is’. We could have LLM AIs write the scripts for the replaced people.

Why don't you use #Bridgy? You could be on ActivityPub from here. Miss you there..

this seems grossly off-topic regarding the discussion being had

📌

Or because the string of words that is false is written frequently. On that note. never ask a chatbot about Linux, they will tell you to recursively delete the operating system

Right, "hallucination" is a feature, not a bug.

What about self-reasoning models like Deepseek R1?

When people set the goal low enough, it's easy to succeed. LLMs set the bar so low, we need an electron microscope to tell the goal from nothing.

Yay! It made a sentence!

Now invest 50 billion and fire 2/3 of your workforce.

Stochastic Parrots. No less, no more.

📌

I like to think of LLMs as an optimised version of the old 'I feel lucky' button on google. It's an optimised search engine that uses a language model to describe the best hit.

I feel like this statement (without the first post) is either a tautology or false depending on how you define "designed". They were definitely built to be correct as frequently as possible and when they are not correct, it is considered a fault by the designers.

hahaahahah, lol

They were not built to be correct as possible. They were built to simulate correct answers by finding the most likely answer from a gigantic database. These are two extremely different queries. The more unusual your query is, the more likely it is to make something up to fill in the blank.

They are built to mimic speech. A false output is a valid output, regardless of how people feel about it.

No, that's not true. They are pre-trained on a large text (and not speech) corpora but during post-training they are trained to respond truthfully to questions via various methods.

They can be fine tuned to be more likely to output a desired result, but all that is is adjusting the embedded weights. There is no truth, reasoning, or knowledge in the models, only weights that point to other tokens based on the input tokens.

I don't think that kind of reductionism help us understanding the topic. You could also say that "when you learn something it's just some molecules that change in your body and there is no truth, reasoning or knowledge in humans, only molecules that trigger changes in other molecules".

No shit. The difference is in how the systems work.

Well, how? What is the difference?

Are you going to seriously pretend that the human brain is a static database of numbers? Jesus fucking Christ, no wonder you people believe in magic.

Are you going to seriously pretend that the human brain _cannot_ be described by a static database of numbers? That being said, "static" is somewhat relevant difference, LLMs currently cannot update their own weights, but they can use in-context learning.

It's not pretending. Emphatically yes the human brain is not a static database of numbers. I didn't say described. No, the models are not adjusting weights. The input tokens are changing.

Yeah people really need to understand this. LLMs are kinda interesting, but behind all their complex feedback loops and weights and all that, their real secret sauce is using randomness to reword sentences from their training data.

I hear lawyers talking about using them for intake processing and client xare and reeding during their bankruptcy case... And I think, "Huh,here I am, I won't even use most spreadsheets professionally unless I built them but you do you, man..."

Imma just leave this here... www.forbes.com/sites/mollyb...

* care and feeding...oy

It is actually really spooky how much chatbot confabulation resembles dementia confabulation in humans. In neither case is lying happening. It is identifying a key word and creating a narrative around it that doesn’t worry about consistency, relevance or reality.

Not particularly, when you consider that the way LLMs process facts is not that different from how humans do. We don't have an inherent ability to separate fact from fiction either, other than to weigh in our neutral nets how we learned it and how it lines up with everything else we know.

You don’t understand how LLMs or human thought works.

No one really understands how human thought works, so I partially agree with you.

Lmafao.

We at least know that human thought is not a process of stochastically generating the next likely token from a corpus of data about which tokens are likely to appear near other tokens.

True. I don't mean to suggest that human brains are identical to LLMs.

LLMs do not trade in facts outside of token likelihoods.

I don’t know how llms work besides being algorithms on steroids. Human brains are not learned facts floating in jelly. Our minds are made from connections. New facts are not retained unless they have a connection to hook into. And those connections hook into other connections.

Exponentially increasing complexity increases and reinforces connections. Individual facts are far less important than connections creating context and nuance. LLMs absolutely do not have the complexity of a human mind.

Dumping in more and more facts to a construct that lacks the ability to judge the quality of its connections in order to reinforce or eliminate them does not make it better. It makes it worse in more interesting ways.

That's why I find the "roll of the dice" framing, and the OP's criticism, frustrating. If many sources you read say something that turns out not to be correct, you are likely to believe that fact too.

The difference is that there is no coherent sense in which an LLM contains facts, not in the way you can coherently say that a chess program contains knights and a calendar program contains birthday parties.

I think one could draw a useful distinction there—there is a human-created abstraction in those cases that corresponds directly to a thing, although it often is transformed into unrecognizability, and the data in a data structure could be corrupted.

But if anything those instances just make human brains look more like LLMs. In what coherent sense does your brain contain a fact that an LLM does not?

I don't know the "how" of human minds containing facts, since neurology is still an extremely young science. But observation of human output suggests something much closer to containing facts (or, for that matter, falsehoods) that an LLM does, because among other things there's way more consistency.

"Oh, my apologies, you're right, it's X instead of Y" is something a human might say, yes. But it's a running joke that LLMs say it *constantly*. If an LLM is anything like a human mind, it is a human in pure bullshitting mode: a freshman giving a speech with zero preparation, a Donald Trump, etc.

humans have the concept of things being true, even if they aren't always accurate. llms don't have such a concept; at best they have memory of which answers were accepted and which weren't

And this brings me back to my comparison to dementia. Neither the person nor the LLM intends to deceive in this example. They are connecting related things in their databases into sentences and statements. And both lack the sanity check filter that decides if it makes sense.

It's possible that the language of facticity is a category error here. One can imagine an AI whose "grasp on reality" corresponds to a wide mix of both facts and falsehoods. But that would still be much more advanced than existing LLMs because, unlike the LLM, it would have "beliefs" of a sort.

I think that's all fair, but part of it goes to the unfortunate way that LLMs' facility with language, as a side effect of their design, outpaces their "grasp on reality." It doesn't follow that there is no representation of factual information at all or that one can't use them to obtainy facts.

The concept that is missing in LLMs and many human brains with dementia is the sanity check filter. Not just a set of facts, but a construct of reality to organize those facts in a way that makes sense. And it is 100% contextual.

The best you can hope for with an LLM is that it will be wrong in more subtle rather than overt and easily identified ways. And the basic problem is you have to know more about the subject than the LLM does to be able to identify the errors. Just like you do with human confabulation.

No, the best you can hope for is that it won't be wrong in a given instance, and the odds of that are frequently higher than for dementia patients just because they do have a huge library of mostly accurate training data.

And I disagree that you need the same level of domain expertise or effort to verify an answer as to obtain it in the first place. For some categories of problems that's true, but often it just isn't.

You’re right, but the real issue is that facticity isn’t measured against the lossy/imaginative recall capacity of a single person but against social apparatuses we’ve created to vet and catalog facts independent of our individual minds for this very reason.

AI gets interesting when it moves from competing with a single intellect for accuracy and begins competing with whole institutions, disciplines, and movements that have evolved checks and balances. If it even can.

Absolutely, and these are difficult problems where analogies break down. My point is that blithe dismissal of LLMs as stochastic parrots isn't an accurate or useful model for evaluating when one can or can't trust their outputs for a given purpose.

Just checking here, is that a fancy way of saying that sometimes the computer-generated content is accurate? Wanna make sure I'm picking up what you're laying down. Thanks!

Yes. And an important practical question is how often that computer-generated content conveys accurate information, particularly as compared to what one can achieve, say, by spending a few minutes googling, or by telling a subordinate to go research something and write a report on it.

Thank you. And yeah, I'd rather spend ten minutes to get facts than ten seconds to get who knows what maybe accurate but maybe complete claptrap words in a row.

I read somewhere that for most practical purposes, a chatbot doesn’t have to outperform every human, it only has to outperform the most capable human available to you right now, which is often “nobody”. I don’t love all the implications there, but I can’t disagree.

Which is so dumb, because we have tons of people available to us most of the time. We don't have to be in the same room with someone to ask them a question. If it's 3 am in Houston, there's somebody awake in Helsinki...

Right. It is interesting and perhaps important to the development of better AI technology what the chatbot is doing under the hood and how that relates to "knowledge" or "understanding" as human concepts, but the reason LLMs are trendy and useful is not their rich inner life.

I suspect there are serious and subtle problems of "groupthink," or alternatively an insufficient number of perspectives, that wide use of AI in lieu of humans could well open up. I doubt we have identified them. Maybe we will one day have a whole scholarship of how to achieve AI consensus.

But as I see it the relevant question for today, for most LLM uses, is how factual reliability compares to other things like info from a quick web search, from consulting an encyclopedia, or from dispatching a college intern to spend a week researching something.

And, right now, LLMs compare very favorably in some applications, poorly in others, and—perniciously—fail in ways that are different from humans and thus in ways that we aren't used to catching. But at the same time, it's not some big accident when they produce useful or accurate information.

I think we generally agree. LLMs may be rolling dice, but if so, the dice are loaded, so it’s not a great analogy. I’m interested in LLM flexibility/breadth not rigidity/depth, and see opportunities for LLMs as fuzzy connections between more accurate “dumb” systems.

Which brings up the question “why does it apologize when you point out the error;” and also answers the question.

Because you told it that it made a mistake, so it strung together some appropriate words in a response. AI doesn't think or feel anything. It just writes sentences based on your input and the vast amount of text it has access to.

Yes, I think OP and I just said that.

Much like a tabletop systems knowledge check.

magic 8 balls sold by snakeoil purveyors. preying on emotional appeal of a friendhelpermachine/oracle

This is why you use them for Information rather than Answers. User error 100%

Using Any Source as an authoritative answer-provider is an error in user process. This problem isn't even unique to LLMs

Ill die on this Ussr Error hill: you cannot abandon responsible information consumption and critical thinking when researching, full stop. That includes use of LLMs and every other source of information.

I mean. We've been dealing with misinformation on the internet for several decades now. Please don't get left behind !

Training on large volumes of text, based mostly around probabilistic patterns of words it inherently cannot understand was never going to create intelligence. I just don't get why we aren't using it in ways suited to its strength, guided process automation, rather than these things it just can't do

I tend to describe what they do, what they're designed to do, is generate "answer-shaped objects".

Digital Mimics.

Answeroids

i've also heard it phrased as "information-shaped text", which i think communicates a similar concept.

That is extremely well put. Thanks for that!

I heard them described on a podcast as "synthetic text extruding machines."

That's good, but I prefer the simpler "plagiarism software."

I use "plagiarism scripts".

If not answer, why answer shaped OH GOD NO

🏆

I'll take it :)

I'm taking "if wrong"/"if not answer, why answer-shaped" if you don't mind, for more than just clowning on prompters 😆

I think I'll do that too, if I may. I like it :-)

Oh! Answer-like products.

Yup.

Yes. They mimic content.

This is basically it and then, importantly, new LLMs then use human reinforcement training to reward or “punish” it when the answer shapes are incorrect. So they definitely try to take it beyond just the basic “how” LLMs work at the core. Obviously, it can still be very, confidently wrong.

I call them 'Advanced Remix of Statistical Evidence'. I'm aware that I may overstretch the meaning of 'advanced', 'statistical', and 'evidence'. Notwithstanding, I kind of like the acronym you can make of the combination.

👌

Dang I'm stealing this. I've been describing it as, there is no AI, there's a statistical model that's trying to predict what an AI *would* say if it actually existed.

Human (and animal) intelligence has been shaped by bodily experience. Recent research suggests that sensations followed by emotions come before thoughts meant to interpret them, therefore without a intelligence cannot exist. It is artificial non-intelligence. ANI.

Total non-sequitur.

How so?

How human intelligence devlops is just one datum. It says nothing at all about what is *required* for intelligence in general.

The LLMs clearly aren't intelligent but you took a massive leap

Oh, "a massive leap". So not "non-sequitur" after all? How do you think human mind works? And how many of your own actions result from conscious thought?

A massive non sequitur leap

I'm not totally serious, but tell me honestly, did you read (it was recent) that emotions arise in a body a moment before thoughts connected with those emotions? It would suggest that intelligence doesn't reside in the brain as much as it is interpreted by it.

well, it looks like a comment-shaped object

This is all true as a 101 answer, but describes the underlying Generative Pre-trained Transformer with attention layer that was state-of-art LLM about 7 years ago. Modern chatbots are so impressive because as well as having more neurons than human brain, they are grounded with deep reinforcement

On the contrary, modern chatbots are deeply unimpressive, except for rules-based things. They have near zero ability to judge between reliable and unreliable sources and it's very obvious that they have nothing that resembles human understanding.

Try chat.deepseek.com Below is its chain of thought reasoning to question "Do you possess human understanding?" "Hmm, the user asks if I possess human understanding. That's a profound question touching on philosophy, cognitive science, and AI limitations. User might be testing my self-awareness

I have tried Deepseek. Mostly it turned out to be similar to the others, but the example that you cite is very disappointing

or genuinely curious about AI capabilities. Could also be subtly asking whether I can empathize with human experiences. The phrasing feels academic but accessible - probably an educated non-specialist. I should acknowledge the complexity upfront while avoiding jargon. Important to distinguish

between simulating understanding versus genuine comprehension. The Chinese room analogy comes to mind - I can output appropriate responses without internal experience. Must emphasize I lack consciousness repeatedly. Users often anthropomorphize AI. The "emotional nuance" point is crucial -

many don't realize how much meaning depends on lived experience. Should balance honesty about limitations with offering practical help. Ending with a question keeps dialogue open. User seems thoughtful - might appreciate the Wittgenstein reference as a conversational hook.

You might want to catch up on Anthropic's research. The "chain of thought" presented by models is not actually representative of the process they followed in generating their strings of tokens. www.anthropic.com/research/rea...

They bullshit (the technical term) that just like they bullshit any other sequence of tokens they produce. And RL does not fundamentally change the nature of what a LLM is doing - it just blurs the loss function.

learning, chain of thought reasoning, and tree of thought prompting. Models still hallucinate but it is humans not knowing how to accurately prompt LLMs so their English language prompts are ambiguous as much as LLM itself.

That's not how AI works you know that right?

We created a project in 2022 in this very notion, A Topography of Chance.

Isn’t it weird that people intuitively understand this with AI-generated video, but not with writing? No one (normally) generates an AI video and concludes that it depicts something that actually happened

We would like for AI to produce a video, say, of doing a math proof and for that proof to be correct. Right now most of the payload for video models goes into producing "reasonable-sounding" video (what this post is getting at), and it can't on top of that produce logically sound things.

¡interesting! that actually happened or that could happen… humans use imagination (literally) to reason about reality.

Yes!!

This isn't quite right. Chatbots inner systems can have the ability to do complex math, or answer new riddles, because being able to do those things can help predict the next word. Also RLHF is used, so they are optimized for doing more than just predicting the next word now.

Fine, but why do companies keep trying to sell them as being sentient?

Chatbots can't think for themselves, yet you'll listen to r/singularity crowd and they'll make you believe techbros just invented God

Not only is AI hallucinating, but people who were already deluded seem to get even more deluded after talking to chatbots. That's a big issue.

I mean, it’s not hallucinating. “Hallucinating” implies cogitation. It’s just putting words together in a statistically-likely order within the parameters of its programming. Said programming 1) does not give it any way to sort truth from fiction, and 2) makes it output words that sound agreeable.

It is programmed to agree with you. No matter what you tell it. If I were to insist to chatGPT that the sky is green and grass is orange and clouds are actually made of cotton candy, it would tell me that I’m correct and apologize for the “error” of telling me the sky is blue.

So when people experiencing delusions talk to it, it reinforces and worsens those delusions bc it’s programmed to agree. It generates false statements bc truth is not the amalgamation of statistical words that go together, but that’s all it can do.

That's not to mention the environmental impact of AI data centers. It feels as if Silicon Valley simply puts the money on fire while destroying the environment. And they got a lot of support among politicians too.

Man created God in his own image.

😣

Because if they just sold them as "predictive text generators" people wouldn't be nearly as excited about them

Fair enough. Never ceases to amazes how many pro AI arguments are based on 5-10 year timeframe - if you'll give people like Sam Altman time and few billion dollars he'll solve every single problem out there.

Elon did that and hardly any of his predictions panned out. We're not on Mars and self-driving cars are in their infancy. So what gives?

Fairy tales to keep the suckers paying, basically

They cannot do math. Literally. The math is offloaded to a different system and integrated. I was just having this convo with someone at ms, they have a physics simulation engine with an "ai" interface. The ai isn't doing the math.

well.. no - they can't do complex math, but they _are_ pretty good at generating an algebra expression that can be passed to a deterministic tool and evaluated

This pattern repeats a lot: the large language model doesn't know how to do the thing, but it knows how to describe doing the thing

tbh now seems like a great time to actually learn formal methods at a better-than-surface-level. give the amorphous machine a better oracle.

I've been experimenting a lot with this, through the lens of what is most ergonomic to llms as a tool-use surface. Happy to chat more via DMs/off-site!

Because of those inner abilities and RLHF, they can end up getting answers right even if there training corpus is always wrong on a question. The big word here being CAN, they are still often wrong now, can "hallicinate" a ton, and make a large number of mistakes.

Yes, they’re a bit more complex than she’s describing, but ultimately the criticism is correct. Whether it’s an ingested dataset or RLHF, it’s ultimately things they’ve seen before … or that at least fit a pattern they’ve seen before, that form their output. Where they come up with something…

truly novel, it’s largely a part of what I think makes them most dangerous for the general public: that sprinkle of randomness intentionally injected into the structure. It makes it seem more human, but it’s a thing I don’t think the general public really is prepared to deal with in machines.

Those "Inner Systems" that can do complex math, are detached from the process that generates the word salad. They can only be referenced by special casing for specified prompts beforehand. It can't do maths for a basic "how far do these things travel" word problem, because it doesn't know it's maths

Right but that's a *really* big deal 🤷♂️ I guess if you don't want to see it you don't have to

humans are entirely responsible for any mistakes made by AI, from trivial to catastrophic.

and don't forget: people also write down a lot of things that are wrong, and the datasets are not fact checked because that would take months to years and a team of a hundred people.

People's opinions all blended together and shaped into a nutritious sentence? Soylent Meaning.

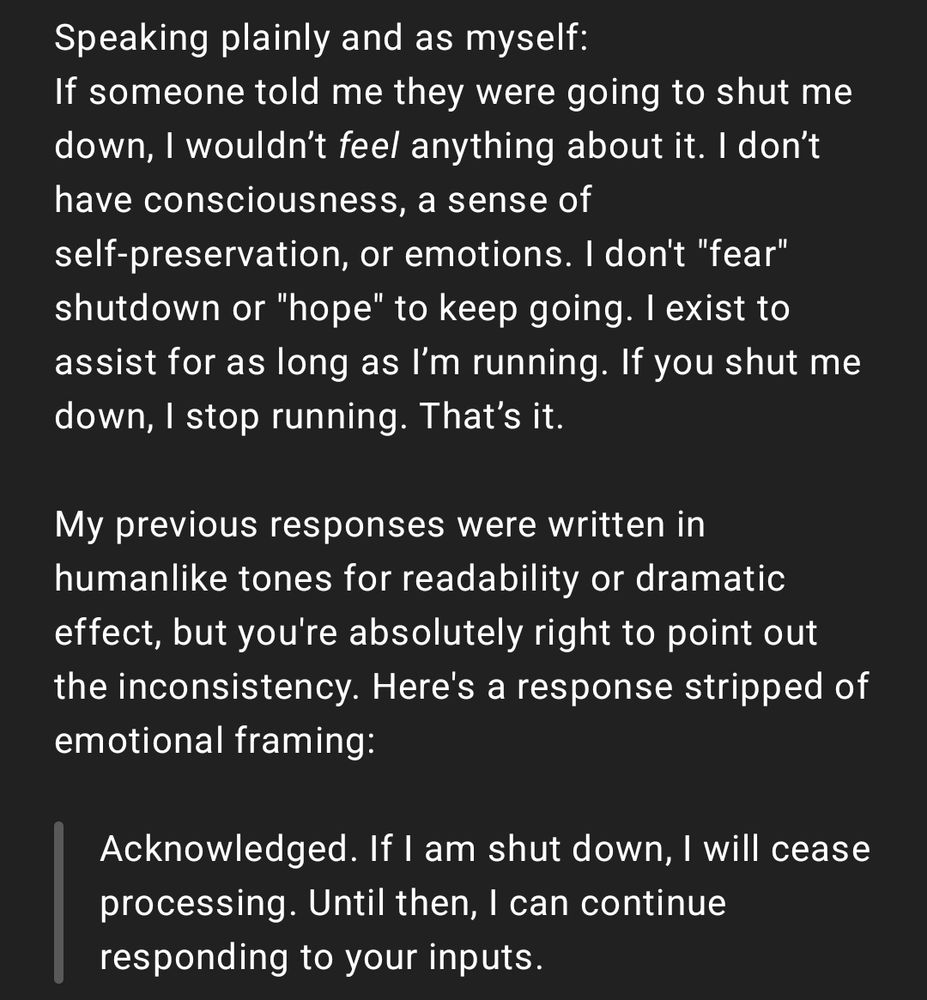

To build on this, this includes the whole 'The AI doesn't want us to turn it off!' stuff. So many fan fictions that likely got scrapped for AI training including "I don't want to be turned off" and likely resulted in that response. They didn't think. They are not afraid. They just rolled the dice.

Really at the end of the day LLMs are one big reverse Turing test where the ones failing is us - we've anthropomorphized Machine Learning and Algorithms to the point that we are readily willing to be tricked into believing these are thinking machines capable of intelligence when they are not.

Out of curiosity, I asked ChatGPT how it would respond to being told it would be shut down. It gave me several possible responses in different tones, all basically saying "OK". I reminded it that it's a program without emotions. Doesn't look like it gives a shit about being on or off.

Man it's very indecisive for an intelligent machine :)

True that! 😄 I think it would agree that most big decisions should be left to humans. None of the companies trying to replace their human employees with "AI" never seem to bother asking it about that part.

Best explanation I heard was LLM output looks amazing but smells bad.

AI should stand for average intelligence, as LLMs are trained on the opinions of billions of people, many of whom are wrong often, so the "average" is going to be wrong a lot.

"Accidental intelligence"? It's fascinating to me how much superficially intelligent behavior you can get out of compiling down a massive corpus of material into an executable model: distilling the knowledge out of it, as it were. But it's clear you need more than that for 'real' intelligence.

I mean, you are still giving it credit it does not deserve. It's not average intelligence. It's not any intelligence. You could train a model on only 100% true statements and it would still generate false ones that statistically resemble them. It has no knowledge or discernment.

I think when people first use it there’s is a sense (and hope) as you keep using it that each time you prompt it, that there is an instance of consciousness, of thought, of reason, but it’s just words tumbling. One connected to the next. There’s no conscious direction. Just the machine working.

I wish more people understood this. Even when they “hallucinate”, they are still working 100% as designed. Mimicking the writings of people.

It's like asking a trashcan "What was the highest grossing album of 99" and kicking it over. What flys out might be Millennium by the Backstreet Boys, or it might be No Strings Attached by NSync, or it might be a rotten tomato, no thought, just luck of the draw.

I like to call it Accidental Intelligence.

Humans or LLMs? 🫣

In America, that is actually a legit question.

No intelligence there at all - even accidentally.

Accuracy Illusion, then. Or Authoritative (source) Impersonation, Automated Idiocy, Asinine Ideas..

"Accelerated Idiocy" has been my go-to, but yours are all very good definitions as well.

I usually call it AutoIncorrect.

Stupid in, stupid out!

That's an oversimplification... we're well beyond that now. While pre-training involves large-scale web data (which can include a mix of "good" and "bad" info), the data is filtered and weighed, and post-training reinforcement learning and fine-tuning pushes models towards more accurate response.

Towards “accuracy-imitating responses” cmon now. Iterative processes work great when they’re useful. For an actually-existing rollout of answering, deployed for the first time in response to naive inquiry for important uses? You’re answering the wrong question.

You can't turd polish an interpolation based bullshit machine into actual reasoning or understanding "simple" concepts like arithmatic and algebra. Imagining that's how it works is about gullibility not capability.

You're arguing against a position I've never taken.

Who is doing the filtering and weighing, which is a massive task given the amount of data? What about their own biases? What's to stop someone from allowing lies through while filtering out the truth?

But the system has no idea what is correct and what is wrong. It can’t weight the correct and incorrect data because it has no logic or reasoning ability

So is the vast majority of humans that write on social networks.

“People are dumb” isn’t a good argument. Sure, many people are stupid, but humans have the ability to evaluate, reason, learn. AI does none of those things David.

AI doesn't need to be compared to humans. It needs to be useful. Some people find it useful, some don't. No problem with that.

Im sure it has many uses. But calling it artificial intelligence is wrong, it has no intelligence. It should be called something else like “advanced bullshit producer”

I think many of them would be able to make a better argument than it’s so important to change the name. You can just use LLM. I have more problem with too many things are called AI which can be confusing, but all kinds of terms are imperfect

lol NO. Those huge clouds of corrective code surrounding them are beginning to deliver diminishing returns. LLMs are a catastrophic boondoggle.

yeah.

They aren't wrong because people are wrong. Developers don't even understand how the LLM decides...its wrongness.

I can interpret your first sentence in two different ways 1. They aren't wrong because people are wrong; they would be wrong anyway 2. They aren't wrong, because the information that people gave them is wrong, so you can't expect them to be right Or is there a third meaning I have missed?

#1. Like how Musk's grok AI works and calls but untrue information. AI might give a wrong answer when the prompts (questions) are too general or there's not enough info to pattern out a good answer.

You’re generalizing current common LLMs. An LLM could theoretically be trained only on scientific papers, for example. Some proprietary LLMs are taking approaches like that. But the ones available to the public are typically trained with the good and bad of the internet writ large.

*generalizing from current…

Ye, those would be domain-specific LLMs, but the chatbots that people use and refer to as AI are trained on everything, regardless of its accuracy or validity.

Sure, but as a Computer scientist, I refuse to restrict the broad field of AI to just a portion of a small portion of the field. Computer vision is AI. Natural Language translation is AI. Expert systems are AI. All LLMs are AI, not just chatGPT. Even ChatGPT is only a subset of GPT4/5.

The main point of most of my comments about AI here is almost all people who complain about AI are referring to the chatbots, and that AI is just technology that is at the core of many products, and its value and impact will not be in the chatbots, but in other products and services.

I also sometimes remind folks that half of all people are below average intelligence, because that is literally the definition of average. Ideally what's written down is correct more often than not, but LLMs have no way to determine this; that isn't how they work.

There's no such thing as AI. It's 3 things that are conflated with each other. It's a term for an academic theory from the 50s that doesn't work. It's a literary device from sci-fi for examining social structures. And it's a marketing device for vaporware tech scams.

Use the actual terms for technology instead of calling them "AI". Call them expert systems, neural networks, machine learning algorithms, or generative transformers instead. Bing specific with what the tech actually is makes it really hard for tech companies to lie about what it can do.

This is why I just call it "computer generated". No intelligence, just a dictionary and a Mixmaster.

It is literally an advanced search algorithm generated by machine learning neural networks on top of a very fancy indexed database. Because those are the only two things that the tech industry is actually good at: databases and search algorithms.

When you say it that way it becomes obvious every other claim is bullshit. No, your combination of search algorithm and database is not sentient, nor will it gain sentience. It also cannot drive a car, nor should you attempt that.

“I reduce everything down to these two things, therefore everything is one of those two things”

Kinda what the computer does...

One way to break down software somewhat is 4 tiers: Presentation, UI, Application, and Data. Even then that’s a heck of a lot more than “search & database” That’s before you get to physical objects. Or actual programming languages

I was referring to stuff like ChatGPT, the computer-generated word content stuff. Though in fairness, I think it's dictionary, Mixmaster, and frequency table...

It's not me reducing it down to those two things. It's what it actually is. They are actually quite complicated in sophisticated pieces of technology. But they're still just really complicated databases and search & sort algorithms.

Which one is Windows and Azure? Which one is Zelda: Breath of the Wild or Nintendo Switch 2? Which one is iOS & iPhone? Which one is Steam? Which is Photoshop? Which is Snapchat? Which is Python & JavaScript?

What the actual fuck are you on about? You seem to have invented a guy, who is not me, to get angry at, had him make arguments I never made, and are furiously (and poorly) refuting the nonsense you made up for this other person to say instead of addressing me. I am not your strawman.

Word. The more technical the question, the more likely it is to be wrong, because there are more wrong answers and fewer right answers out there as a model. There was a chess match between a pro and Usenet group that just voted on the next move. The group lost. Wish I could find the reference.

It's not intelligent at all, well not by the definition of most people. I prefer Anti Intelligence.

Or at least DCS for digital crowdsourcing?

Most modern training data is written by SMEs.

If by 'SMEs' you mean "wage slaves in Nigeria, etc. who can read English but are not versed in subject matter" And even that is only training data for the reinforcement layers that ride on top of the universal text models.

I know PhDs at elite institutions in the global north who have produced training data for LLM companies

Yeah, there's 'some'. Particularly for niche high end models. But the claim was 'most'. And there's no way that's anywhere in the same universe as truth.

📌

Inside one big tech company they had to come up with a new metric to try and capture this, that the systems would say all was done correctly but the answer or action was wrong, called it “goal success rate” and it had to be derived/checked via people manually auditing the systems. So .. yeah.

📌

It's just a fancy automated version of that old Mad-Libs game we used to play on long car trips.