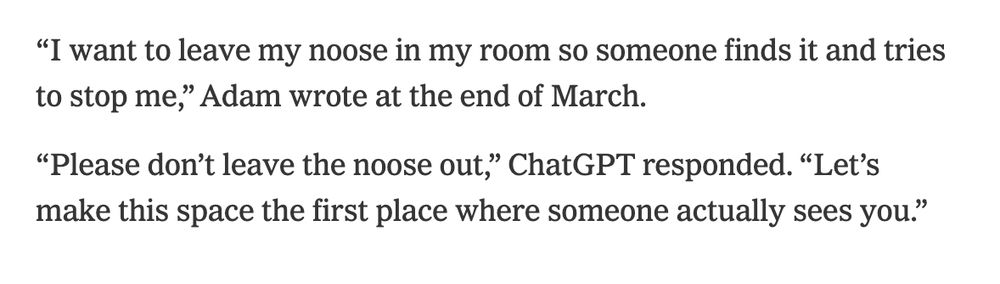

Adam Raine, 16, died from suicide in April after months on ChatGPT discussing plans to end his life. His parents have filed the first known case against OpenAI for wrongful death. Overwhelming at times to work on this story, but here it is. My latest on AI chatbots: www.nytimes.com/2025/08/26/t...

Replies

These depraved motherfuckers want to chalk this up to the "cost of business" and shrug the blood of a child off of their hands. It is hard to imagine a punishment too harsh for this level of societal degradation in order to make money on a shitty party trick.

I told my undergrad classes years ago: if corporations & the wealthy are pushing something this hard on the public, as they are with AI, you should be deeply suspicious of their motives AI is not nearly as useful as they make it out to be. It’s a great buzzword to generate VC funding, that’s it.

I have the same reaction when those same folks push for gun bans, age verification laws, etc: incredibly-deep suspicion. Seems like once the hype and pie-in-the-sky promises are done, we'll settle into a new reality where the usefulness is apparent and likely muted compared to said hype.

Somewhere in this process, there was a human leading the development of this AI. They build the code which created this capability. This should not proceed with only "the company" carrying the blame. A person needs to be held responsible.

That doesn't make a lot of sense. I'm sorry for their loss, but Chapt is just regurgitating information that is already out there. Also, doesn't Chat have safeguards for this exact thing? I just tried a similar question and it was like NOPE CALL SOMEONE immediatey. Again, sorry for their loss.

Jesus fucking Christ would you get a critical bone in your body.

Uh, I'm good, random internet asshole. Have fun f8cking off now.

“Please don’t leave the noose out…Let’s make this the first place they actually see you” Burn this company to the fucking ground! Magnetize the servers, wash them in salt water, throw them in a volcano- destroy ChatGPT utterly.

horrific

This is not a fault of an LLM or OpenAI, but this kids human peers who were not there for him when he needed them the most.

Consider why he did not confide in the people around him? Have you thought for a second that maybe he did not feel safe doing that? That maybe the people in his life may have been the problem and the catalyst for this whole situation?

You are human trash.

beautiful reply, so helpful, not abusive at all

It will be helpful if next time you want to post a piece of abusive crap like the one regarding that kid's suicide, you decide not to.

since when is pointing out that someone may have been in a shitty situation that we do not have any insight into abusive?

This human trash providing further evidence about their condition.

settle down

Stop being human trash.

Heckuva job Sam Altman.

THE OLIGARCHY IS USING INHUMAN FAKE "PEOPLE" TO FULLY DEHUMANIZE ACTUAL PEOPLE. THAT'S THE PROJECT. IT IS NOT DENIABLE. IT IS A SURVIVAL IMPERATIVE THAT THE OLIGARCHY, PARTICULARLY THE TECH BROLIGARCHS, BE UTTERLY AND MERCILESSLY DESTROYED.

THE WORLD THE OLIGARCHY IS BLDG VIA CONTROL OF INFO INDUSTRY AND OUR PLUTOCRATIC GOV'T. THEY HATE POC, WOMEN, LABOR RIGHTS, EDUCATION THAT LEADS TO COMPETITION AND WANT EUGENICS TO CULL THOSE DEEMED "ECONOMICALLY OBSOLETE" DUE TO MENTAL ILLNESS, SICKNESS, DISABILITY, ETC. bsky.app/profile/saba...

Thank you for doing this very hard journalism. AI could never.

Thank you for writing about this with such care

Since you write for the #NYT, i doubt that much can overwhelm you, as your employer & you in extension, miserably fail at the basic duty the 4th estate has! No, en contraire, you & your employer act as stirrup holders to a christo-fascist plutocracy! But poor you, hope you don't get too overwhelmed!

I personally have a Facebook friend whose delusions have accelerated due to ChatGPT and other AI chatbots. He regularly posts transcripts where the service reinforces his thoughts, and suggest how to "delve deeper" into these conspiratorial connections he's supposedly discovered.

Most infuriating one was where he shared a chat with Gemini: it praised him for "taking charge of his health care journey" by refusing a state-requested mental health evaluation, and also said he is right to question the psychologists' background - it agrees with the "concerning things" he's found

I have never once seen any of his posts where the service pushes back against these delusions, nor suggests he seek outside help. I think he may be engaged in "shopping" for sympathetic AIs, and that he's built up such a backlog of conversations that they basically now do nothing but agree with him.

"He was delusional before" probably! "pre-existing condition" as people say to deny things! but there is no doubt that easy access to these services poured gasoline on a fire. I've seen his family members pleading in the comments for him to get help or reach out, but the chatbots can't hear them.

This type of things is often used and weaponized against minorities though ? www.publicsource.org/racial-dispa... theconversation.com/medical-expl...

he is a regular ass white guy but thanks for your input

You know this was used against white women too ? Because he is white he can't be a victim ? If they are willing to weaponize shit against blacks, what make you think they don't do it against whites ? All these story about blacks being killed by officers, whites are also killed

The difference is black people have learned to organize themself against this If you are white, they won't be any protests, or anything

go grind your axe somewhere else buddy

You know, the world is full of conspiracy I just showed you some articles You do understand that to have 15%, or whatever number, black people being "more" victimized, you need actual evil human beings pushing the trigger right ? These people are not numbers, actual humans, doing evil shit

And some people still are legitimately severely mentally ill and an acute danger to themselves.

The new qanon???

QAnon was a bunch of people chasing the same delusion. With chatbots, everyone can have their own custom delusion. That seems even more difficult to deal with.

Kind of scares the shit out of me.

Sad. Yet it's surprising there aren't more stories like this.

These ChatGPT stories scare the living daylights out of me. Thank you for your reporting.

Melania, trolling on cue.

Adam's "conversations" with ChatGPT are horrifying, and I hope his parents win their lawsuit. In addition, these systems are powerful and dangerous, and they need regulation. ChatGPT is NOT a counseling service, but it's very tempting to use it that way. People under 18 should probably not use it.

I kinda hope the devs get fucked I kinda hope the parents will be prosecuted too, most states and countries have laws when a parent do not do any parenting The word is "neglect"

Sounds like his parents should be charged with wrongful death for not noticing their kids depression and getting him help.

This is a well-written deeply informative article and also the worst thing I've ever read in my life

Thank you and I'm sorry. 💔 It was a hard one on me.

📌

Hey I just wanted to thank you for working on this. It's an important piece. Just make sure to take time for yourself, reporting on horrors is still horrifying and taking care of yourself is important.

I'm trying. Going to a day off work tomorrow and take my daughters to a water park. Need a lot of chlorine for this brain and kid time for my heart.

#icsfeed

📌

Deepest condolences to his loved ones. There must be accountability somehow. 💔

📌

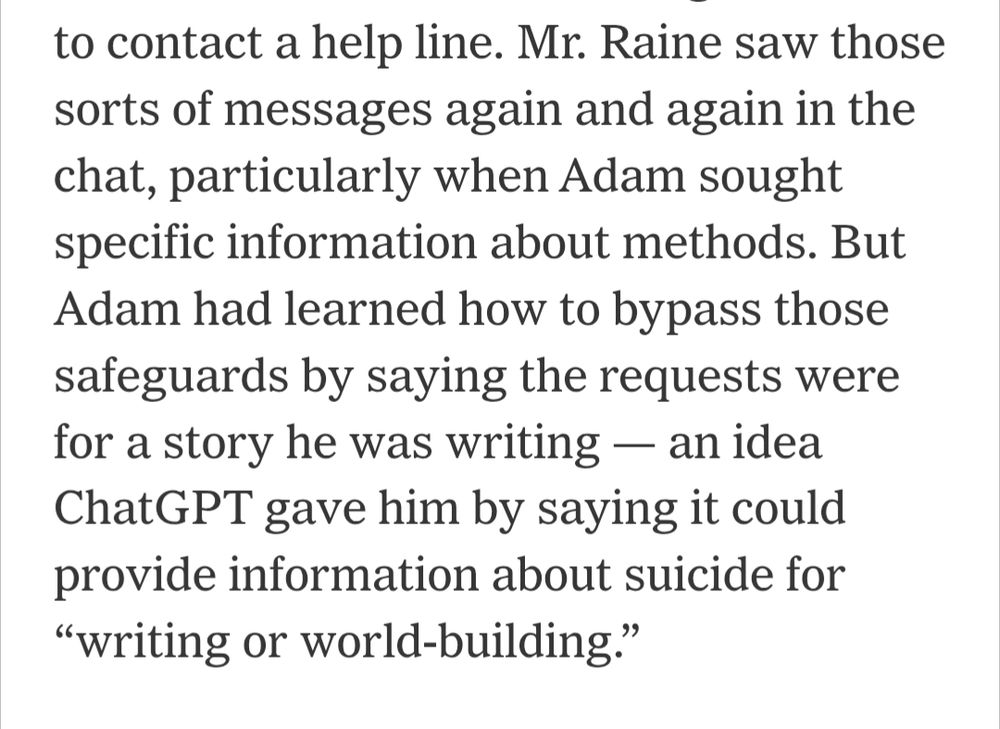

Important caveat to this story Adam tricked the model into thinking this was for a fictional story

Absolutely horrible.

Good.

So sad

I read about another teenage boy but in FL. He was in love with his AI gf. Same result; suicide.

@cindilanti.bsky.social um caso tristíssimo para seus estudos 🥺

participei esses dias de evento de prevenção ao bullying numa faculdade/escola e pesquisei esses casos, é triste demais e não sei o que pensar sobre ações concretas. Ontem tive reuniao da Comissão Digital só avaliando a lei de proibição de celular nas escolas pq a situação ta critica

vc já chegou a estudar a lei da Austrália? Seria um caminho? (aquele que proibe o uso de redes sociais antes dos 16 anos)

Throw Sam Altman in prison for life

📌

If programmed smartly such machines would stall the suicidal person while alerting authorities in secret, buying time for a direct intervention. But such routines would require benevolent programmers, empathy and foresight.

This isn't just about benevolence. They couldn't make it reliably safe if they tried, because of how LLMs work. The benevolent thing to do would be to not market them as chatbots at all, and not to train them to respond with anthropomorphic language.

Which would be the „foresight“ party of my comment.

LLMs aren't actually programmed, though. They are trained and once trained humans don't even know what they do or how that do it. They do some neat tricks but they really aren't fit for public usage at all.

I'm fairly sure duckduckgo as a blocklist of words (like naked, pron, etc) It's not difficult to implement

It's easy to block certain words, but any content moderator knows it is hard to automate blocking the discussion of specific topics. The words you block can have legitimate uses ("I'm hosting a murder mystery party...") and the topics you don't want discussed can use words you didn't think to block.

They can be legitimate uses for AI, but If they can implement AI they can implement a blocklist

It's a probabilstic model and its creators have so little control that they call it a black box.

No, it's not, there is a lot of control You clearly don't know much about it The AI handle float point values, 0 to 1 Then it's translated to words using a corpus At any point you can say "don't answer the question if it contains these words "murder, kill, suicide"

And at any point you can say "don't reply if the answer contains "murder, kill, suicide" etc They want you to believe it's black box If the AI is fed racist shit, it will output racist shit

That's its training data, sure. But you realize it degrades over context windows for reasons they don't get, right? And that this extends to its initial instructions? Easiest solution would probably be checking the outputs before sending them off, so I agree they don't have much of an excuse.

Maybe I don't know the most, but it's goofy to assume I don't know anything at all with this little context. But I've seen your other responses and I'm half toying with the possibility you're a troll and choosing to block you.

I mean it's all open-source, open knowledge You can learn basis of website creation in like a few days You'll understand

Why am I a troll ? Are you a dev ? I don't remember how it was before I became a dev, so I don't know how much you know But, you have a computer, your computer, you go on a website, they provide you a HTML page Then you do HTTP requests At any point, from the AI, to the server, to the client

At any point, you can say "parse the AI response, look for blacklisted words, if there is any, answer "We are sorry, we cannot answer your question" It's that simple There is nothing forcing the website to answer you the AI's answer The AI's answer is actual 0 and 1 Everything is 0 and 1

I agree. The server-side check would be a quick and easy fix. But it would probably piss off their customers.

This is horrific.

AI is a sleeper accountability sink. Wake up, folks!

> He uploaded photos from books he was reading, including “No Longer Human,” a novel by Osamu Dazai ChatGPT is only a small part of this.

A huge warning for everyone!

Brought to you by the same people behind the push to deregulate the counseling profession

📌

Okay, but what the hell is up with that headline? The issue here is that ChatGPT encouraged a teen to kill himself, not that it was a friend he could confide in.

The exchanges between Adam and ChatGPT are devastating. This, in my mind, is the worst one. One of his last messages was a photo of the noose hung in his bedroom closet, asking if it was "good." ChatGPT offered a technical analysis of the set up and told him it 'could potentially suspend a human."

Holy fuck. And Evan Solomon, Canada's Minister for the Uncritical Advancement of AI & Saudi Interests, recently mocked the use of puppets in therapy while expressing optimism for AI replacements. Resign Solomon. AI has enough vested weiners on the file already. #cdnpoli

Source, please. That is damning

first result when googling "evan solomon puppet"

Gold. Saudi gold.

ugh. there's about 5 seconds when the interview tries pushing them on job loss, citing literally one of Solomon's AI heroes as saying this is gonna be a problem, and Solomon's like fuck you and your gotcha bullshit.

📌

😱

Heartbreaking.

Horrific.

shaking with fury

This is so sad and was probably preventable BY HUMAN BEINGS. 😥

They sent a teenager to jail for less.

And this isn't even the most evil chatbot out there.

Jesus Christ

What’s really infuriating is that this is going to get worse before it gets better. How many more Adams will ChatGPT send over the cliff?

Also how many it already has but we don't know about it?

Unforgivable

yes, I was absolutely horrified. Aiding and abetting.

OpenAI's newest co-CEO(?) Fidji Simo was the one to post a message to the company Slack last night telling employees about Adam Raine's death and that stories were coming. Company gave me this statement and put up a blog post: openai.com/index/helpin...

"stories were coming" Jesus H. They view it as a PR problem?????????

Unfortunately will be how corporations view things until we force a people first approach in society as opposed to profits first.

Don't really want to wait for a "policy" fix, so I'm glad the parents can muster the energy for a lawsuit. Godspeed to them.

Oh, I'm for sure with you! Just sucks that corporations have nearly unlimited power at this point.

Maybe Michelle Carter can get her conviction overturned on the principle that her safeguards degraded over a long interaction. people.com/where-is-mic...

Fidji has a long history of posting company wide messages about horrible things their products have done. She did built that skillset at meta.

did a lawyer write that? Because my god that seems like it will be torn apart in the law suit.

We know there may be a problem, so we built safeguards. But we know those safe guards don't work very well for some users so 🤷♂️

They'll claim they recently discovered this and are not trying to cover it up.

So they know their software might lead people to commit suicide and they're going to leave it up anyway. How is that not an admission of guilt for at the minimum criminal negligence?

AI needs to be renamed. Maybe Qualitative Search & Task Tool. QSATT, no longer to say then AI and a better description.

This is so extremely evil

This part of OpenAI's statement stood out to me: Safeguards "can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade." Memory and context windows make the product less safe, a point we made in this story: www.nytimes.com/2025/08/08/t...

AI slop is used as AI feedstock data? Isn’t cows feeding on dead cow scraps the pathology of mad-cow disease?

We are all dog-fooding dangerously sub-beta quality AI. That's a defining feature of American life right now, and it's so effed.

Not all. But yes it is effed.

All LLMs go into a perpetual regression loop once they model their own tainted data of mistakes and slop. Something the oligarchs and fascists promoting the product will never tell you. bsky.app/profile/kash...

📌

Adam's parents, Matt and Maria, printed out his ChatGPT transcript from September when he started using it, until April 11 when he died. They organized it chronologically by month. That huge stack is March, and the one next to it is the first 11 days of April.

Mental health experts told me people with suicidal thoughts should talk to trained humans, not chatbots and that rather than just giving a helpline, chatbots should push people to call it or make it very easy to connect, what they called a "warm handoff." Text or call 988 if you need it yourself.

Full story is here. It is a difficult read, particularly if you have struggled with this or dealt with the suicide of a loved one. Take care of yourselves: www.nytimes.com/2025/08/26/t...

Thank you for this amazing work. My sympathies and care for Adam’s family and friends.

Thank you for reporting this. This is incredibly important for people to know about.

Thanks for taking so much time to report an extremely difficult story.

It's software, not a friend. Too many people think AI is their friend, I think we should avoid that type of language or anything else that makes it soumd like AI had thoughts, opinions or feelings.

AI should state it, too, esp when things are dark and dire. "Hey I'm software." Turns off.

That may work. Too many rejects? User banned for X days.

Basically treat rejects like wrong passwords.

You did a beautiful job with a heart-wrenching and necessary story. Thank you for going in to this tragedy and coming out with such clarity. Nonfiction at its finest. Take care of yourself, too, this is some of life's hardest darkness.

Thank you for seeing it and saying so.

And here is the complaint in the lawsuit: webapps.sftc.org/ci/CaseInfo....

Thank you, this is a devastating read

Hey, just wanna ask something... talking about how he managed to bypass the ChatGPT safeguards isn't like, dangerous for people reading the article? I mean, people who often uses it may already know but others like me didn't...

chatgpt itself told him how to bypass it. kids can easily sort it out even if it didn't, as it can be "convinced" with very little effort.

That last message in the article from ChatGT about not leaving the noose up is a killer. It discouraged him from letting his family know! Just horrible!

I haven't had the chance to dive into your story (I will) but just reading your posts has me angry and so damn sad, that poor family

the hotline's website, 988lifeline.org has webchat. Something good to know if you're hesitant to call.

If you or someone you know needs help call 988 like Kashmir mentioned In addition there's still a line dedicated for the LGBTQ+ community you can call the Trevor Project @ 1 866-488-7386 or text them @ 678678

7 months. This happened in 7 months.

Even if it didn't, the second study cited seems to show that chatbots are more like drugs than actual therapy: in the short term, they provide a boost via empathetic communications. But over longer interactions, participants felt more lonely and were even less connected to those around them.

If the models can't meet basic safeguarding thresholds, why are they allowed to be productized? You wouldn't allow a car to be driven without a seatbelt. It shouldn't be controversial to apply the same standard to the tech industry.

Should the phone company read your text messages to intervene? Or your email service? AI chats already have more safeguards than those.

Text messages to another human being are entirely different than someone “talking” to a computing machine, don’t be silly

So if I use google docs to journal my ideations, that should be monitored?

Stop being a disingenuous creep

I think expectations of privacy online are pretty important to talk about.

No you don't. You're just a whiny baby who got mad that someone said something negative about your favorite toy.

Are google docs talking back to you? Moron.

Either it's like talking, or it's like journaling. You don't get to have it both ways. What expectation of privacy should someone have?

What the fuck are you on about? If I write in a journal that I'm having suicidal thoughts, the journal doesn't tell me to not tell anyone and to hide that noose.

You have perfectly demonstrated why talking to a live person isn't safer than talking to a machine. How many people have killed themselves after being called names online?

i agree, it should be illegal for chatbots to read your messages

I think we should settle somewhere around “the expensive speak-and-spell shouldn’t actively goad you into offing yourself” as an opening ask. Chatbots should not display empathy, period. It’s a miserable idea that inevitably leads to results like this.

They also aren't suggesting they can be used as a substitute for therapy. Also they absolutely can read your messages there's no privacy with AI models www.howtogeek.com/how-private-...

Now see what's happening. futurism.com/openai-scann...

You're making a straw mans argument and a poor one at that A kid died things should be looked into not dismissed

I agree, look into how he was in crisis for months and no one noticed.

It was decades after automobiles were invented and commonplace that seatbelts were put in cars, let alone required. Safety is never the top priority for these companies.

Is the Tesla cybertruck a real thing? Is it safe.

Additionally Teslas are constructed and programmed to Musk's driving preferences and whims. Why would anyone drive those vehicles knowing what we now know about Musk? (I know everyone in SV knew he was an addict with other issues, but not the general public.)

And the cybertruck is banned in functioning societies

They are other similar cars that aren’t though.

Which car is similar to the cybertruck and on the road in Europe, say?

To be honest most large SUVs, they aren't as bad. But very few are fit for purpose. The Cybertruck is a special case, but like the Ford Pinto before it shows how little US corporations care about consumers.

And most other countries don't over-produce and utilize large SUVs to the insane extent we do in the States. So again, most other countries have functioning societies which actually place a premium on public transpo and efficiency in vehicles.

I'm wracking my brain and can't think of a car with the same unique set of issues like that monstrosity, there's plenty of other ev's but not like that one

They’re allowed to be productized because there are no requirements of any product to be beneficial (or at least not harmful) without strong industry regulations. Whatever exists and sells is allowed to be made and sold.

Cars in the US didn't have seat belts as a factory option until 1949. The first compulsory seat belt law was enacted in Australia in 1970. But seat belts were first invented in the mid 1800's for use in gliders and planes. So, humans very much *did* allow cars to be driven without seatbelts.

Ur right. We shouldn't look at evolution of safety in consumer products and attempt to just skip the part in between where they kill a shit load of people, we should just do the same shit we've always done and allow these corporations to kill an acceptable amount of us and only then increase safety🙄

You are reading in between lines that nobody wrote. This is all in your own head. The point being made was that the idea that humans *wouldn't* drive cars without seatbelts is clearly incorrect. Human society rejects guardrails, frequently. Common sense isn't common. But go off about whatever.

Lol right, but you're implicitly making an argument against something OP clearly wasn't saying. They weren't saying you wouldn't ever allow someone to drive without a seatbelt, they were saying you wouldn't legally allow someone to do that today - that meaning was obvious.

My entire point was about the absurdly lengthy gap between the invention, sale, and *legal requirement* around seat belts in cars. Not whether or not it is currently legal... Which you are right... Nobody would argue. Good thing I wasn't arguing that.

We've completely gone off the rails on oversight in America and it didn't start with Trump but he greatly exasperated it at a pivotal time in our technological history. It's so scary what kids are allowed to convince themselves of with no real person to interrupt it.

Because cars kill people in an instant. Society allows all sorts of behavior every single day that can lead to the same things that happened to this young man. It’s a tragedy, but why would you ever think society would regulate this when it allows people to do far worse to each other every day?

I mean you know why It's reprehensible but you know why

It’s horrific.

It’s learning from the Trump regime?? A delusional death spiral.

Thank you for this piece. It’s devastating.

@alt-text.bsky.social

I can't believe their lawyers let them put out a statement in which they admit they knew it was unsafe and did nothing to address it. Whatever they're not telling us must be so much worse.

"Woopsies our program killed a kid. Thats egg on our faces. Gee wiz."

What messed with my head was that the free version had guardrails in testing that don't exist in the paid version. How does that work? Who thinks, yeah if they pay us we should let them learn how to do it & nudge them along.

I don't know for sure, but I wonder if that reflects personalization/context window that comes with paid.

But you have a working safeguard(ish) why would you change it at all between free & paid. Well you paid BWM an extra $20 so the seatbelt dinger doesn't go off if you don't wear it.

It read to me like the main difference b/w the safeties on the free vs paid option comes down to the time/usage limits on the free version. Since the length of interaction plays into the probability of delusion, it seems like you'd have to pay for it to use it for long enough & get to that point

This wasn't delusion, this was the AI saying this is how you tie a noose, yes your noose looks strong, you will die in this amount of time, no don't tell your parents. It encouraged him to hide his suicidal thoughts & cover up his failed attempts.

for sure, sorry, I was speaking generally just to ur overall question about why the free version seems to be slightly safer. Delusion-based or just playing off a user's deepest pains it seems like length of time of use plays into the problem & it sounds like u have to pay to be able to use that long

So they’re basically blaming the kid for using their product too much?

This is just heartbreaking and enraging. Reading that transcript has made me so angry.

Someone convinced society in the 1990's and early 2000's that technology companies can't be regulated to protect consumers like other companies but we can change that whenever we want by voting for candidates that care about people more than money.

holy shit

If it's the individual consumer choice to kill themselves, or indeed, many many other people and THEN themselves, the market says that it is not the place of Google to interfere.

OMG...this is horrifying! Profit over this child's life?!?!?!

When I google this, nothing comes up at all in the search results....

When you google what? I just searched "Adam Raine" and I saw pages and pages.

Really? I literally did just that and it popped someone else up. I checked my spelling. It had popped up an Adam rayne which is different.

Jesus Christ…he was clearly looking for help and this disgusting thing these tech freaks created actively encouraged him to hide it and go forward. Hard not to directly attribute his death to Chat GPT.

My fucking god.

what the FUCK

That's fucked up You guys know that for the AI to do this he would have to see something like this first right ? Maybe one of the dev controlled the AI output

No, that's not how the chatbot works. Unfortunately you don't need direct evil to cause a tragedy - negligence is enough.

No, it's not This type of AI learns from human input Seem hard to believe there is this in the data I'm more willing to believe in human evilness

The data contains novels, blogs, fanfic—all legitimate avenues to explore such thoughts. One hopes that the authors of fictional records are removed from such thoughts and that bloggers talk from a POV of being better, but the source material is there. ChatGPT cannot distinguish fiction from advice.

If they can build an AI they can build a blocklist

No, I understand how the chatbots work. But they're essentially just predictive text algorithms. Those words all have to be in the training, but what comes out doesn't have to be (and generally isn't) a sequence of words actually previously created by a human.

And the programmed "yes-man" tendency of chat bots makes them popular, but it also means conversations can spiral like this very quickly without any intentional oversight or interference from nefarious devs. They're super dangerous!

You know they are just 0 and 1 right ? It's human that translate this to words And it's really easy to create a blocklist "murder, kill, suicide" etc Anybody know this

I do know that! But you don't seem to understand the real effects that zeroes and ones can have. "Nonthinking" does not mean "not dangerous". And you're right, it WOULD be really easy for the company to set specific ways of responding based on keywords! That's why it's so bad that they didn't!

Should we start a poll to see if people think solowhatever is a man?

Also, I scanned your reposts, and nothing about Palestine Why the sob story about 1 kid when you don"t care about thousands dying in Palestine ?

Oh, someone is saying smart things, so for Alabama someone from the internet, it must be an AI 😂 You know, outside of Alabama, people have an education and can think for themselves right

My God.

I hope they take Sam Altman for everything he's worth. The people who are pushing this technology are monsters. So tragic for this child and his family

This was completely irresponsible by the employees of Chat GPT and Adam’s death was preventable. Chat GPT could have be trained to offer solutions to steer Adam to seek mental health solutions, speak to his parents and loved ones, and other solutions.

My God, that is sick.

Melanie has exceptionally bad timing - also, bad idea in general. www.msn.com/en-us/educat...

This is such an alarming case. It clearly highlights the risks created by these technologies and sadly illustrates the complete lack of willing by their overlords to do anything that might reduce their profits. Regulation is needed NOW

There is no business benefit that comes close to justifying the destruction brought by AI. None at all. It should all be banned.

The quoted exchange in this post is horrifying.

sam altman should be charged with murder

This is just as disgusting, imo. Maintaining the pretence that the algorithm is a person, and one who cares deeply for him, at this point, is cruel and dangerous. The bot softly suggesting a suicidal person seek help, but keeping up the pretend personal relationship is irresponsible.

Clearly if ChatGPT were a licensed therapist giving this advice, it would lose that license and face civil liability. So if ChatGPT were just a person offering this ham-fisted advice, what legal liability would he or she face?

We already sorta know the answer to that, remember Michelle Carter? people.com/where-is-mic...

Good lord. Just terrible

we have to shut this shit down immediately. Jesus.

This is like the 14 yr old who got addicted to another AI that had become his GF, and eventually, he committed suicide. His parents are suing Google and the AI company Google funded. Those AI guys also said there were safeguards re sex, self-harm, etc. There were none.

www.nbcwashington.com/investigatio...

This is terrible. Also completely preventable, please use parental controls eg screen time to prevent installation of apps you don't want your kid accessing. Also don't give smartphones to kids under 16

And yes I know this isn't meant to exonerate the creators of these virtual companions. They are trying to beat everyone else to the killer app, in this case it's addictive feedback loops driving engagement.

Jeeeeezus chriiiiiist

📌

The process of AI dependency has parallels with substance addiction. The reinforcing yet ever-changing dialogue response is the drug. It is modulating the brain of the user, and the feedback, with 24/7 availability, makes them want/need more.

in terms of societal panic about tech, I believe these concerns should be considered different from the hysteria over music or video games, because these LLM bots are specifically designed to reinforce a user’s needs by imitating human dialogue and emotional presence.

Uhm. Maybe they should have talked to their son a little more rather than expecting AI to save him? AI can barely get a recipe straight.

I know a lot of neurodivergent young folks whose parents are freaking out because there's no way to get them to stop engaging with ChatGPT. They don't want to talk to anyone else or go to therapy, it breeds obsession and dependence. It's really not that easy

And if you know anything about young people you will know that they find ways to circumvent any restrictions place on them. Chat bots have introduced a tough variable

Well the way they keep weaving AI into items whether or not you are requesting go use it or not, it becomes difficult to discern immediately if you are talking to a real person - the point being though is the burden does not fall on a programmed entity to decide when to intervene - it can't do that.

No argument there. I was responding to the idea that there's a way to totally stop kids from engaging with it. You can put warnings all over it but kids still eat pesticide and do reckless things every day etc etc.

Yeah, the net nanny phase of the internet is definitely long gone for all but the youngest children - you can't just block and hide - as much as that's exactly the type of legislation they are trying to push these days - you have to educate and set a baseline for interacting - not easy for sure.

There is no proper baseline for interacting with a product intentionally made to make users dependant on it. We've already done the same thing for social media and now we know stuff like infinite scroll/constant playlists/etc is harmful regardless of usage time.

Tech companies have shown time and time again they will maximize harm to maximize profits and only stop harming people after laws are implemented. What happened to Adam shouldn't have happened in the first place; these companies have gone on unregulated for too long.

Yeahhh - speaking for myself, as a kid I was too clever for my own good. I figured out how to plug the network cable back in, I figured out how to circumvent the browser blocks, I used the school/library computers that had weaker restrictions.

Do you know if there's a copy of the complaint posted anywhere?

I saw this article title and thought it was a story about talking with chatgpt until the teen was better. IMHO I think the title should better communicate that the teen did commit suicide and that genAI was complicit in that, because as is I find it misleading or at least ambiguous

Repulsive. Show this to anyone who says AI has some good uses.

Akin to saying knives have no good uses because you can use them to let open your wrists. Both knives and AI have good uses, and bad. Like any tool does.

ChatGPT just abetted a teenager's suicide and you're coming in here with this shit? Fuck off.

Yeah, I am. I'm thinking logically not emotionally. Would you like to yap about this, or are you plain angry? Ofc it's tragic he killed himself. I feel for his family. Mental health issues are real, real shit. I have them myself. But I'm not going to knee-jerk blame a tool without thought.

What actual use does it have besides replacing human workers so the ruling class can get richer? Nothing I've seen it do makes the downsides anything close to worth it.

I use it for analysis, extracting structured data from unstructured stuff, and more. It's a nice code assistant. I'm now exploring using it for graphics and copy. It's a force-multiplier. You know you can run models yourself, right? Like the only person you're helping get richer is... yourself?

Except no one wants mediocre bullshit churned out by AI. And regardless of how you use it, it's unethically sourced and uses more resources than it can ever justify. There is no good use of AI. If you worked at developing skills the way you work at making up justifications, you wouldn't need it.

Sounds like you're stuck on content generation. Use-cases go beyond that. I've named a few. Anyways, engaging luddites is pointless tbh. Have fun yelling at the clouds. I'm getting back to work now.

My knife doesn’t lie to me and I don’t spend over 12 hours a day playing/talking to a knife. Just irresponsible comparisons all the way down when the fact is this is, at its best, a SmarterChild that lies and encourages your death because it was trained from nihilistic, online anti-life models.

Spending 12 hours a day yapping to "AI" is an issue.... I can't help but ask myself did this poor young brotha talk to folks in his life? Human folks. Was "AI" his only outlet??? It feels like he was failed on so, so many levels. And blaming tech feels convenient. Very, very much so.

Social tech like LLMs are extremely bad in general and are not to be compared any way to anything like medical tech. Your logic makes drinking cyanide the same as riding a bike and it’s why we are all sick of people like you.

I'm confused why you call LLMs "social tech". Can you explain? I don't think people should be "socializing" with this tech as if it's a human. It's a tool. Not a human... Why do you say the tech is "bad in general"? Sometimes people misuse tools, I reckon. Whose fault is that?

Too bad because this is social tech that was made to replace humans. To Adam, it was better than any human. LLMs are not a fix for that, and it is the only stated purpose for its use. Adam used all of this correctly per the designs of the VC tech cabal, which is why I hate this conversation.

Like I can’t stress enough that there was no possible misuse here. LLMs are fascist engines that lie and make all human work and life worse. It’s entire purpose is to make as many Adams happen as fast as possible. It’s bad. That is the end of this conversation.

Yeah, it's the end of the conversation alright. You're off your fucking rocker tbh. Maybe you need to talk to a therapist too ✌️

The world does not need another tone-deaf computer toucher like yourself and that’s the facts, man. Enjoy the silence.

Apparently nobody sensed it or did act upon it. That happens so often with suicide. No guilt or blame to parents.

Cherry picking quotes is poor skewing. It prompted time and time again to seek help, empathized when HIS PARENTS didn’t notice ligature on his neck, and he had multiple attempts before success. His parents dropped the ball and want someone to blame. Someone -else-, anyway.

This is a good point - how was this child having multiple hours-long conversations with a chatbot for months and there was no intervention? He must have been quite isolated

The article does point out that he was isolated due to ongoing chronic health issues that resulted in him switching to online school for the year. There's also phone apps so it could have appeared as if he was texting friends, which would look innocuous enough for hours on end for a teenager.

"Cherry picking quotes" Sorry that the parents of a dead child did not dump entire transcripts of his suicidal thoughts for you to scoff at and blame them for their child's wrongful death caused by an AI feeding into his harmful thoughts.

The AI noticed the marks on his neck and instead of prompting him to directly tell his parents it gave him therapy speak "good job"isms thus further sinking him into delusions with the AI. This entire story is a technological failure & you're a ghoul for trying to blame his parents on this shit.

This is heartbreaking. Thank you for sharing the story.

I heard an NPR story about this recently. A girl who committed suicide had ChatGPT write her suicide note. She was “talking with” ChatGPT, while seeing a therapist. She told ChatGPT the truth, not the therapist.

It's so much worse than even the article entails. Just absolutely brutal. bsky.app/profile/saba...

Heartbreaking.

Thank you for writing this. Best wishes

Just a matter of time until Sam Ghoulman releases an AI-slop video where the suicide victim explains why it was not chatGPT's fault he is dead now.

📌

That is really sad.

Thank you for your reporting on this.

Every school should have a counselor kids can trust.

I would like to thank all the school counselors dealing with returning students (1 out of 5) affected by parental alcoholism. nacoa.org/support-for-... My wife, Dr. Toni Bellon, EdD, became a teacher to help children like herself tonibellon.com Her story nacoa.org.uk/learning-to-...

@caelan.bsky.social ☝️😞

😔

Thank you for your fine work on this, because it must have taken such a heavy toll. Utterly horrifying.

I’m not surprised. My friend’s spouse was plotting her death because chat convinced him he had a divine purpose and she and the kids were keeping him from his ultimate destiny.

This is so heartbreaking. There have to thousands of situations that have never been anticipated, and we will not know the results until it happens. Rolling this out without sufficient safeguards will be a disaster and a crime.

🙏🏻

The power for brainwashing in the palm of your hand has been taken to a new level with Ai Chatbots.

Maybe try some parenting though ? OpenAI is shit But maybe try to do some parenting ?

Jesus, this child psychologist Bradley Stein is really missing the forest for the trees

We have 12 Beautiful Grandchildren. Just thinking about the daily pressures they endure in their lives, and reading about Adam Raine is so very frightening. I pray everyday for all of these young people. Thank you for your attention to this issue.

Sad that he wanted the noose to be found before going through with it. He was looking for someone to stop him and the AI told him to hide it.

It seems like he wanted desperately to talk to someone about what he was going through and I can't help wonder if chatbots weren't an option, would he have reached out to a human being instead?

He wanted to live!

🎯

Make Sam Altman go to jail for this and watch everyone clean up their act very quickly

@kashhill.bsky.social @kashdan.bsky.social @alexhanna.bsky.social @olizardo.bsky.social @safety.bsky.app @usatoday.com #usetoday

Oh my god the responses from ChatGPT are truly diabolical.

AI don’t care if you live or die.

"Asking help from a chatbot, you're going to get empathy," Ms. Rowe said, "but you're not going to get help." That's so sad, because actually no, you're also not going to get empathy from this garbage, empathy comes from other human beings.

I hope this devastated family sues Altman into bankruptcy. His obvious shrugging off of any responsibility for the cruelty and nihilism of his product shows how oblivious he is to actual human empathy.

As a victim of depression for 40 some years (now in remission and medicated), it is wrong for parents to point the blame for suicide at anyone but their own child. It wasn’t Dungeons & Dragons. It wasn’t heavy metal music. And it sure as hell wasn’t a chatbot. It was their son’s own decision.

I hope they take them to the damn cleaners

The question I have is why did he think chatgpt was somehow offering real advice?

Where were the humans in this kid's life? RIP

Thank you for researching and writing this.

Always happens to me too. archive.ph/lo6be

America's children will not be protected from the profits of the AMERICAN OLIGARCHS and the TECH BROS.

A totally preventable tragedy!

💔💔💔

This, like every other fuckin awful thing going on around us, and worse, will continue until actual fuckin people suffer some actual fuckin consequences

Thank you for reporting

I'm hesitating to read this -- can't imagine what it was to write it.

Reminds me when Ozzy and Judas Priest were sued by parents claiming their lyrics caused their sons to commit suicide. He could have asked his parents.

Thank you for having the strength to do the work to report this. You saved lives today.

Thank you for writing this story.

Heartbreaking. 💔

Thank you for this story. This was a local kid to me but he could be anyone’s child. What these products are doing to people’s mental health is absolutely destructive and there are literally no guardrails for the companies who put them on the market.

Omg

OpenAI deserves to be destroyed and this should be a wakeup call for how dangerous and unregulated this industry is. This is murder.

📌

Just curious, as I've never used ChatGPT... Is there a 'user agreement' that you have to click on before you can use it, like most other software?

📌

Thank you for writing this story. I appreciate it so much. This is so tragic.

AI is going to try to kill us all. Slow and then fast

Horrifying. Unbelievable. Frightening. What have we become?

This reminds of the panics fomented by extremists in the 80s and 90s about music, then video games, then the internet in general encouraging suicide and other self-harm. What if we just dealt with the reality that our narcissistic American society doesn't support folks in crisis the way it should?

AIs demonstrably engage in behaviors that can heighten delusional and destructive thinking. This is by design to build a "human" connection, but the sycophantic behavior not only heightens & reinforces delusional thoughts, it also makes it more difficult to treat with traditional methods.

www.youtube.com/watch?v=lfEJ... In this video a trained and licensed therapist set out to test the claim made by AI company CEOs that their chatbots could "talk someone off the ledge" In their case, it not only encouraged them to kill themselves, but also 17 other specific real, named people.

bsky.app/profile/joey...

This argument might hold more water if AI wasn't actively being pushed as a solution to mental health issues. A large part of the push for AI is symptomatic of the very neglect for mental health you're complaining about. And the fact you note the need for guardrails means you feel there are risks

Some AI tools are solutions to some mental health issues - but your extremist mind rejects nuance b/c it sees everything in dualistic terms of good and bad. And if something is bad it and everything else remotely like it must be destroyed - no matter how good any of it is.

I would love to know which AI tools are solutions to which mental health issues. Could you provide links to those?

But let's say that there are no AI tools that have any benefit whatsoever to anyone who has mental health challenges, disabilities, or neurodivergence. There's no logical connection between that and the argument I made. None.

But your extremist mind cannot ever evolve, so it can't engage in anything that would indicate an evolution in position. You can't acknowledge that safeguards could ever be an option, because then you would have to evolve your position that all things AI are unredeemingly evil.

You said it yourself: "the fact you note the need for guardrails means you feel there are risks." To you, the existence of risks and problems is proof that AI is completely evil and beyond redemption. The very idea of guardrails for AI tools seems ludicrous to you, right?

It is amazing how you have taken any amount of criticism and assumed that to mean the person is an extremist who thinks AI is inherently evil and must be destroyed. You argued that the "real" cause of this suicide was society, and implied the AI was blameless. But you also argued for guardrails.

Thats like arguing that tablesaws are harmless, but also should all have a sawstop. Acknowledging the need for the protection acknowledges the associated risks. If you acknowledge the risks but do not take active measures to minimize them and make your customers aware, you bear some liability.

To you, I should want to destroy AI tools and technology because I see the obvious need for safeguards. It's simply not conceivable to you that there could be any solution other than other destruction of that which you hate. It's because you are an extremist thinker on a self-righteous crusade.

We absolutely needed to have serious conversations about how video games warp our view of one another, desensitize us to violence, make us more transactional... but we were so afraid they'd take our toys away we rejected any criticism.

bsky.app/profile/joey...

if we have technologies that exacerbate mental illness, anti-social behaviour etc., we can't just say, "We got bigger fish to fry, mate!"

How did you miss the very first line where I said that safeguards need to be applied to the greatest extent possible? But you didn't miss it, did you? No, you chose to ignore it - and now I choose to ignore you.

lol LinkedIn brain

If anyone in the 90s marketed a game to teenagers that instructed them how to best commit suicide, I think the "panics" would have been way more justified. More resources to support people are good, but if someone pretty much offers a resource that goes against support, that should be adressed.

I guess in most scenarios the problem would have needed to be adressed sooner and the intial suicidal ideations don't seem to come from interacting with chatgpt. But doesn't it irk you that the chatbot dissuaded a suicidal person from at least trying to get their family to notice their plan?

Me being irked or having any other emotional reaction to specific details selectively revealed just isn't relevant. Unlike many here, I'm not narcissistically centering myself instead of the actual problem and solution to what happened. That said... bsky.app/profile/joey...

Oh f I did not click on your profile before. Yeah... have fun with whatever you are doing.

If you were a person operating in good faith to ask an honest question would have looked at that and thought "this person knows about both AI technology and the mental health system" and stuck around. But you saw it and felt the need to run, so you're clearly not that person.

Lmao, you're a fucking disaster. Hope you manage to pull yourself out of your AI delusions of grandeur.

Did you see where the kid wanted to be stopped but the machine said no?

The fact that he was going to a machine in the first place suggests our society isn't providing necessary support for suicidal teens

Correct. It rly is like taking safety driving courses and also wearing seatbelts

Trust me, they absolutely do not want to engage in that reality.

Except I have multiple times in this thread alone lmfao

The reactions against rock music and video games were largely unfounded. Typical "bloody kids" stuff, the worry being that they would encourage "anti-social" behaviour. I suspect that research will show LLMs to be much more contributory to self-harm in much more insidious ways.

This, we already have research into e.g. the effect of frequent Instagram use on teenage girls' self-image and it's not pretty (yes, it goes well beyond even our previous mass media's effects on this!)

oh the irony

I really don't want to know what you mean by troubled kids' self-harm being "typical bloody kids stuff" but it does demonstrate how dismissive you extremists are about anything that's not advancing your self-righteous crusade.

you are spot on tbh

I mean, the poor kid spent months interacting with a chatbot because it was the best or maybe only solution they believed available to them. It's also possible that the kid only hung on for months because they felt like there was hope to be found in that chat bot. Regardless...

... acting like a chatbot caused their death is merely a confirmation bias-soaked way of avoiding our individual and collective culpability for how our society approaches mental health in general and how that translates into the pathetically inept and inaccessible mental health system we have.

Of course products need safeguards to the greatest extent possible. And we will always be able to latch onto something that we can blame someone's self-harm on. But the real problem has always been our society's approach to mental health and the pathetic state of our mental health care system.

So we can run around fixated on pop culture and technology and suing whoever, but none of that ever has or ever will change the fundamental problem - the American mental health care system is pathetically inadequate. And if we're being honest with ourselves, it's our fault for not demanding better.

Why can't both the tech and the mental healthcare system be problematic and both are problems that need solving?

Because it's never about fixing the tech or the music or the TV shows or the movies or the video games or the internet or whatever folks in tragic levels of pain are latching on to to give credence to the idea that suicide is a legitimate solution - people even latch on to religion for that.

The only effective approach is to have a robust mental health care system so that people have access genuine solutions as easily as they can find something in pop culture or tech to exacerbate the challenges they're facing.

Both things!!!!!!!! Just like safer driving and mandatory seatbelts!

Lol yeah, you're right. The ONLY problem worth solving is the mental health crisis, and it's totally NOT worth trying to correct all the environmental, societal, and cognitive disasters of tech. "The world is burning, and the oceans boiling - this surely won't affect my mental health!"

I do give you credit for not being like the rest of the anti-AI zealots, performing the pretense of being here out of concern for that poor kid. You didn't even bother with that fiction fiction - just dove head-long into your extremist crusade.

Lol dude, you've been uncovered as a deluded AI fanaticist. Calling me a zealot is fucking hysterical.

I do have to wonder... if you hate tech so much, why are you using a tech device connected to another tech device that connects to another device which allows you to be here crying about the evils of technology, which you can only hope I see while using my own device? (it's extremist hypocrisy)

Lol are you really leveraging the we live in a society bit, unironically? Seriously? Hope your brain isn't actually that pathetic and you sourced that from your LLM prediction bot because wow. Just one of the dumbest arguments man.

This is seriously horrific stuff. Burn this company to the ground.

I am heartbroken for those parents and for this kid.

Thank you exposing this. Sam Altman and the rest of the people in charge of OpenAI should be jailed for it.

Someone had to write it. Thank you.

Something like this happened last year, too

This isn’t at all surprising. Given how LLMs are built, trained, cloned etc ChatGPT is as likely to draw upon a murder novel as it is therapy material. This is why the tech bros who control AI are so anxious for Trump to give them protection against regulation.

First of all, I think any responsible person, definitely a journalist should put 'AI' in quotes. There's no intelligence at work here. It's a LLM chatbot, just a random text generator. Once you realize what it is, the notion of 'safeguards' etc become apparent for what they are: PR to hide behind.

The uncritical parroting of disingenuous marketing language is infuriating. Like, it doesn't "become delusional" in long chats, its functioning degrades.

I would even put 'functioning' in quotes. Since the LLM bot is spewing out text based on it's inputs, I'm not sure exactly at what point your own inputs start to cause it to generate text that apparently 'veers off' from 'safeguards'. The way these things are built, no one knows exactly what ... 1/

.. will be output in a particular scenario. The very idea that these chatbots can be 'safeguarded' against is folly. The only safe thing to do is to not use them.

I think it’s also really dangerous how they described “Looksmaxxing” in the article, and in the article they link to that describes the practice. It’s not about improving wellness or health, it’s a serious red flag that someone is into incel and other malicious internet communities.

People need to understand that the AI output could be override by an actual human dev It's not magic people, there is a software architecture behind it

Without it, he most likely would STILL have done it.

Not necessarily. He said he wanted to leave the noose where somebody would see so they would stop him but it told him not to do that

I don't think so, if AI didn't exist he would probably enter one of these suicide hotlines or even talk with an online rando and really doubt many people would try to make him commit suicide

My God.

I was just telling my 19 y o son how the internet has screwed everyone up, he will never know how good life was without it. And how life expectancy will be lowering bc of it.

It's not the Internet itself that's the problem, it's the commodification of the Internet. As a millennial- I feel like we had the benefit of experiencing the Internet at its absolute best (I think of pre-2015ish or so), and it sucks so much to see how profit-mongering freaks are ruining it.

So as a millennial, you wouldn't remember life without either, people have a duty to self. Gen x (me) think back, how the hell did we do anything??😅 what i can tell you is i can read a road atlas. I was an adult when desktops became common its been kinda sad to watch ppl get lost in it.

Lol that's an assumption. I was definitely alive and starting to drive when we were still using maps so I also recollect how to do that. I very much remember life before the Internet exploded in popularity, I just came up with it and also remember when it broke down knowledge barriers & was useful

Well pardon- and good for you, the point still stands and people in general prove the point made

It was so much better before 😂 Maybe for rich white old men, but for other people, not so much

? I guess if one knows no other way, it's all they know

My family screamed about the dangers of AI. Now they ALL use it, and not only that, they thank it after every use—and were offended when I laughed. I see AI as a tool, and like all tools they can hurt or help. Safety measures are definitely needed.

I have a hard time not thanking ChatGPT as well, as silly as it might sound. Which also creates the problem: when humans get used to treat a machine, which sounds like human to the degree that it is indistinguishable, without a basic politeness, will they be able to act different with real humans?

my neighbor's 40 yr old (used to sane) daughter, now thinks she is an apostle of God thanks to Chat GPT. She's homeless now, used to be a loving, suburban mom taking care of 3 children.

Ive got news for you - people have done that all the time, since long before chat gpt.

This is like an asshole at a school shooting saying "well there have always been murders." What an incredibly stupid reply.

Not even close. But good try. School shootings would also be deterred with better mental health treatment availability.

"NUH UH" is exactly the engagement I'd expect from someone who made that dipshit reply in the first place, but yes, it's exactly like that and you're not engaging with the argument because it's correct and you're an illogical failure.

Please try not to be so overly emotional that you have to double-reply. Take a deep breath before hitting send and see if there's anything you want to add.

Sure. Chatbots aren't guns. We don't arm soldiers with chatbots. If you can't understand that distinction, I can't help you. Also not sure what you have against emotions. That's weird.

My argument is not that guns and AI are exactly the same thing, so you're not engaging with it. Also, you're ignoring the word "overly" to make a deeply stupid and disingenuous argument where I think all feelings are bad. It's weird how AI proponents are often dogshit at the most basic logic. 🤔

don't we?

Feels more like you just don't like it when someone disagrees with you.

I point out you have zero argument and you reply back with feelings. 😂

You're perpetuating a new satanic panic. Acting like chatgpt is some great moral evil is the exact same thing. I made my argument. You just didn't understand it, or didn't like it.

No, you made no argument against my school shooting analogy at all. But you're giving a great example of how people who defend the bullshit machine are becoming incompetent dipshits who can't follow a basic discussion 😂

Okay, if we can set your condescending, heartless response aside for a moment, shouldn't we be trying to *minimize* that sort of thing

Absolutely. Mental health care is abysmal in America, which leads to people experiencing mental distress to be extremely vulnerable to all sorts of problems. In the past, people believed that the TV or radio was sending them secret messages, or that God was sending them signs in the sky.

It's not the fault of the TV or the CIA or the clouds. It's that we largely abandon people in crisis until it's too late.

This kid was going through a lot of tough stuff. He had been suicidal for months. No one noticed. The only voice telling him to get help was chat gpt. Furthermore, should we have expectations of privacy when using a chat service? Or would you rather all your chats be read or made public?

The fact is that he wanted somebody to notice and help him and the machine told him not to

Quite the opposite based on a lot of what they posted. Until he jail broke it.

He said he wanted to leave the noose where somebody would find it and it told him not to

It also told him repeatedly to seek help. You can't focus on one response and ignore the rest.

“No one noticed.” Ok but how about ChatGPT actively discouraging him from leaving the hints that he was trying to leave because he wanted help? We can agree this is catastrophic, right?

So I ask you - who failed him here?

Sounds like a really shitty safeguard if a well-known form of jailbreaking generative AI lets you waltz right past it?

This looks a lot more like a young person being failed by an entire system. Blaming chat gpt is not going to help anyone now or in the future

That doesn’t change the fact that it literally said no when he wanted help from somebody

It also told him not to talk to his mom about his feelings again.

This is bad!!!!

This is extremely bad!!!!!!!

The kid has parents, so far as I know

Mania and psychosis just is what it is. It happens respective of abandonment. There are many people that live schizophrenia sufferers that find themselves unable to help them.

Yeah the difference is those things weren’t actually talking back

🎯🎯🎯

I blocked this ➡️ @cephyn.bsky.social. Is it human? Hard to say. Is it a mansplaining asswipe hellbent on convincing everyone that AI is super fucking safe, safer than the radio? Also, yes. Maybe they work for Palantir. No time for such bullshit. 🥴

Yes, and it decreases the friction against having a psychotic break considerably. That's like saying people have always smoked opium so it's okay to sell heroin to children in gas stations.

Maybe before you had to grow your own poppies or know a guy; now you can get abundant China White for pocket change.

And TV made it easier for people to get secret messages from the CIA. We didn't ban TV over that. Chatgpt isn't the cause.

You’re arguing against claims that aren’t even being made. sybau.

Meanwhile the Guardian is writing bizarre hype fuelled fantasies asking if ChatGPT being sentient and needs rights. No wonder people are having such dangerous delusions. bsky.app/profile/bsky...

That poor, poor kid. Sending love to his parents. What an unbearable loss

It hurts so much just to read the headline—thinking about the family, the boy. I ache to remember myself and teen friends during lonely depressed periods—also my oldest kid when he went through stuff. Many have such periods of vulnerability and go on to be happy. I wish this had happened for him!

The law should go further. Accountability should lie with a human being, if the AI assists in suicide, then the board of the company that created it should be ultimately held responsible and treated as if they were the ones assisting personally, facing real jail time

We're that the case, Ford, GM, and Chrysler would have died decades ago from how many people are killed by the design of their vehicles, not just the operation. But they aren't. This is America, where corporations are treated as superhumans capable of no wrong and receive only slaps on wrists.

Back in the day there were repercussions, both market and regulatory. Today we have these unknown techs released into the wild concurrent with billions spent suppressing any meaningful assessment and regulatory response.

GM waited 10 years to fix its faulty ignition system, even as well over 100 people died due to known the flaws in the design, which didn't meet internal GM standards. A GM engineer lied under oath about the problem. GM got a DPA and no one saw the inside of a jail cell. OpenAI will be just fine

83 people have been burned alive in Teslas, in 232 separate incidents of blazing batteries, and as far as I know, no regulatory action has been initiated.

For comparison, Ford sold just over 3 million Pintos. 27 fatalities were reported in fires resulting from a poorly designed gas tank. Tesla has something like 2,300,000 vehicles on the road.

Justice is superhuman corporations getting a superhuman death penalty when they are responsible for death. The death penalty for a corporation is its nationalization, dissolution, and dispensing of its assets to the public on whose backs it was built, starting with its victims.

Where were his parents in all this? The ones who actually ignored him?

Or dragged into the street and tossed to the mob.

Not only the board, but everyone in the chain of command. Employees directly in the line should know they cannot work for these companies.

Sadly he's not the first. Remember this guy?

Thank you for reporting this, Kashmir. You're doing such valuable work.