I did not make those stats up (unlike genAI). Source 1: research.aimultiple.com/ai-hallucina... Source 2: ( a nyt article but the link is to a reddit copy of it bc we don't link to the times here) www.reddit.com/r/technology...

Replies

But even if something lies to you "only" 15% of the time, it's not helpful, it's not saving you time, it's not making you smarter, it's just lying to you. That's more work. If your research assistant lied to you 15% of the time you would no longer work with them.

To everyone trying to argue with me in this thread, you're not making any sense. allow me to refer you to this crucial point

"it lies only about twice that of humans." Nothing in this argument makes sense. It's AI slop defending AI slop. Incoherent.

This one came out today www.theguardian.com/world/2025/a... And there are numerous examples of AI companies actively collaborating with the Israeli government in the genocide and ICE in domestic repression. Just about every head of every major AI company has bent the knee to trump.

See, all of this. Racism is embedded in the DNA of it because racists are programming it.

Finally genAI is right about something

💀💀💀💀

doc of resources on genAI's destructiveness and uselessness for all those naysayers in your life and in these comments

Source: open.substack.com/pub/xriskolo...

🎶 I found my thrill On Bluebberry Hill🎶 — Fats GPTomino

Beer almost came outta my nose, wtactualfuck 😂😂😂

This is fine. The fact that the US stock market is propped up on the backs of 6 companies 1 of whom, NVIDIA, is propped up by the other 5 burning billions on AI is fine. No bubble. No climate crisis. No problem. (No product. no profit. no nothing except bad bad bad shit)

Aw come on, the way Dafoe whipped his light saber against Harvey Keitel was wicked pissa

😂😂😂

ty for the clarification! will read that article in a little bit

Thanks for the question!

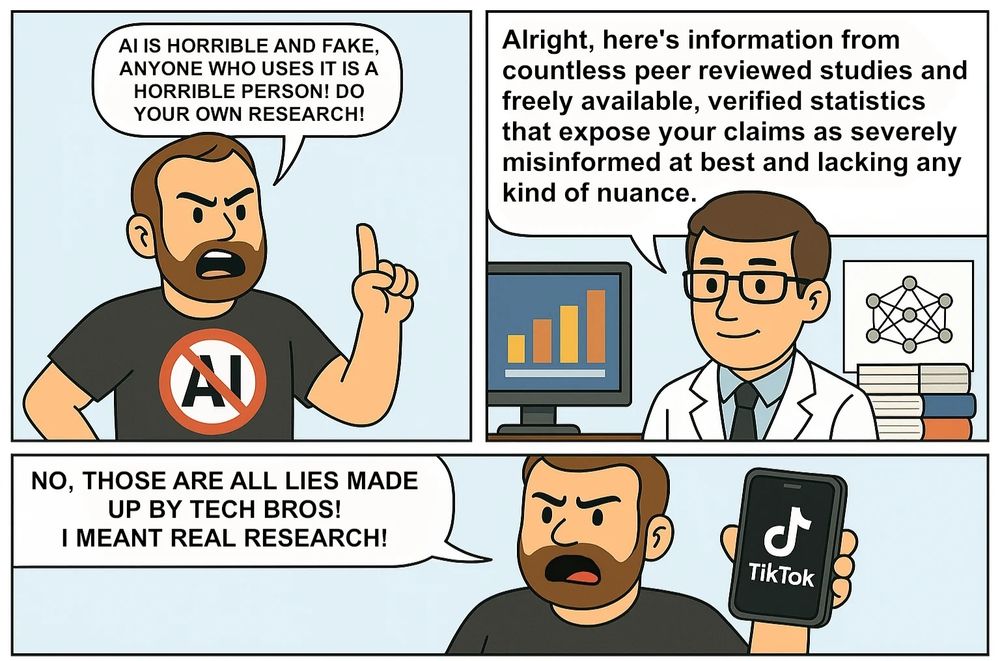

notice how often genocide supporters, ai sloppers, and other bald heads can't argue the merits of their things as they exist in reality? "the thing *could* be awesome if these 68 necessary hypothetical conditions happen first!"

i'd also add that i don't ask other humans to write things for me, so it's not a particularly strong argument anyway

Wrong. Hallucination amongst large-language-models is running approximately _thirty percent_ across the board, and is: -- getting worse with time ( 'model collapse' ) -- can not be removed ( it is in the architecture )

~ 30 percent means it "lies" ( if it even knew what that meant!!! ) at more than _four times_ the rate of the population of humans, in general. Progress!!!

My gooosh what an absolutely awful argument they're trying to use

They don't have even _one_ good argument! It's all just false analogies and "well it's happening so suck it up Luddite lulz".

“It only lies (slightly more than) twice as often as humans” do you’re saying that humans are twice as reliable as the best “AI”, and using that as an argument… FOR the slop robot??? 🤨 (Not you Daniel, the other guy you’re responding to)

Got into it with this person and it ended with "at least AI doesn't murder, cause war, etc." Like what are we even doing

Lmao please

Even that’s not true Insurance companies using it to deny medical coverage are absolutely using it to murder people

I expect humans to lie. I dont expect human paralegals to make up fake cout cases to cite as precedent when writing legal briefs. I dont expect humans writing research papers to cite fake non-existent studies as sources. Thats the difference.

And if a human does do those things, they get fired.

so it's twice as unreliable as any other average online search which is in fact the baseline. an incredible endorsement from them really

The problem isnt you looking up something in a search engine. Its lawyers submitting legal briefs AI spat out that cite non-existent cases as precedent.

Or the head of the CDC releasing & making decisions based om AI generated reports that cite fake non-existent studies.

If Excel randomly subtracted instead of adding (without warning) even 1% of the time, no one would ever have used it.

Yes, having to double-check stuff to make sure its right kills the time-saving aspect.

Heck, back when I worked retail we dreaded the sales where they'd effed up... maybe 3% of the pricing bc that was a Nightmare - we'd have to check every price or customers would be furious.

I wish I could repost this more

I saw a great quote from a court opinion that explained that AI is just a word predictor. It’s not even that good at predicting words! I had a suggested response to an email sending me a signed contract that was just, “Yum!”

i'd say 15% is in that range where something is the most dangerous - right often enough for a person's garbage instincts to start trusting it implicitly until it's too late

So it lies only about twice that of humans. Research has shown that about 7% of all human communication is a lie. Or do you believe humans never lie? Even if they didn't lie at all, how often do you think they're confidently wrong? Or cause accidents due to mistakes?

People lying 7% in general doesn’t mean that research assistants lie 7% of the time! People don’t lie equally across all types of communications.

7% of total communication means in general yes.

I think that if I fucked up payroll 15% of the time, I would be out on my ass *immediately.* Also true of pretty much any other business function.

I guess that's why so many people get fired for being bad at their job then.

"only"

So then do you feel people already lie a lot? Because yes, it's only twice that of a human, which is about 7% of total communication is a lie.

Even assuming that 7% was consistent across all forms of human communication (it's not), embracing technology which is twice as mendacious seems really fucking stupid.

7% of total communication is 7% of total communication, that is in fact the average across all forms of communication (it is). If we break down specific areas of communication that number can be significantly higher or lower.

As for embracing the technology, you're ignoring that each major model has improved on this metric by half. If that holds for the next generation of models coming this month, that would make them either as likely or less likely to lie than a human. See how the argument falls apart?

then why do we need to compound human fallibility with AI fallibility? It would be nice if it were better than us or would correct our weaknesses. Instead it just plays into, and greatly enhances our weaknesses (e. g. psychological ones).

It generally is better than most people when given the right context.

only twice on it's best day is still a bit much!

No, the chart shows the newer models on the left and the older models on the right. Imagine that, the person who posted the chart was being intentionally misleading. Also known as lying.

I didn't reference any numbers, so take your disagreement to which ever reference they gave and take it up with them directly. You didn't cite your 7%. I was merely suggesting that yes, twice that is bad.

No you said on its best day, these are all different models. You could have just Googled how often people lie and found it, I'm not trying to prove anything to you. The OP cited their statement and it was still intentionally misleading because none of you read and understood the cited data.

Considering various models have fabricated data every time i use them i have experience of it being fairly highly crap but ok. If it's worst day is 15 it's still fairly terrible. And people transferring data don't generally lie, they may make mistakes. It regularly lies to me.

Technically it's every session when i sit down to use them. Eventually I either give up or get what i needed. Earlier models were actually better than now.

I completely disagree. Early models, as shown in the chart, were statistically and quantifiably much worse. I'm glad you're experimenting with AI, but you clearly want to blame it for its failure rather than take equal ownership of your partnership in that failure. AI isn't always right.

I have worked with it a fair bit and was enthusiastic as it would simplify things extensively. But I've found llms just aren't trustworthy for handling data. AIs can be great. I haven't been impressed with llms for data.

Considering various models have allowed me to build entire applications and research topics with cited materials I can verify, I have experience of it being fairly great but ok. If its worst day is 15, it's not far off from a person and you must think people are kinda terrible instead of fairly so.

15 is a lot worse than a person if a person is 7.

It is only a lot worse depending on the tolerance to error. Twice as bad doesn't mean bad. If the tolerance for error is 30% it is still far beyond acceptable. If the tolerance of poop shoveling is 30% and AI had an error rate of 15% and can self correct, why would you force a human to shovel shit?

Another possibility is that you're just not good at using AI, it isn't generally intelligent and how you prompt it and what context it is given matters a lot. The same goes for a human, what you ask, how you ask and what data they have greatly matters. People will pretend to know things they don't.

That doesn't explain why it used to be better and since I've had ai experts try to create prompts to solve it once it started this and failed or that i can eventually get it to work on occasion but same data same prompt another day gives a different answer

as if you have to see something as perfect and pure and deserving of absolute authority and AI is better for that job than people could be

I think people lie predictably. They do so out of fear, or anger, or compassion. AI follows no such logic.

They don't, but in general they do believe they're white lies.

If a machine is built to make up lies & present them as truth 15% of the time, while billions are spent to market that machine as being your best way to access truth, the whole thing is a lie. AI cannot be lying only 15% of the time. That level of fraud is fraud, period.

People lie. Shall we get rid of them as useless? It's up to US to use our judgment when using tools like AI, just as it's up to US to use our judgment when listening to assertions by other people.

Turning off humans is called murder and turning off machines is called saving power

Would you keep an employee around that lies twice as much as the average human?

Only in CEO positions, going by the news.

the whole fucking point of AI is to replace human judgement with an automated patriarch the people at the top have given up on the species because it keeps refusing to give them what they want

No, that's not the point of AI. That's not why it was developed. It's entrepreneurs and greedy corporate interests that want to replace human judgment. You have to make a distinction between the technology and how people use it (or want to abuse it). They are separate issues.

It was developed by those people for the reasons I described. I wasn't saying it created itself. The technology doesn't exist at all without humans. It's always connected to them. And I don't have to follow whatever rhetorical rules/social norms you think apply here.

No, the early developers of AI did not do it for those reasons. It was developed to help humans with education and creativity back in the 1970s and 1980s. How people are implementing it now is different from the vision the original pioneers had.

I am talking about AI as a superior replacement for human judgement. If that's not what that was then it isn't relevant to this conversation.

when people lie, they do it for a reason. an associate at a law firm is not going to make up cites while writing a brief because that makes no fucking sense. the problem with AI hallucinations is that you have no idea where the lie is, so all of the output has to be validated.

Yes, exactly, all of the output has to be validated. That's part of the process.

No, if a person did that, *you would fire or stop working with that person*. If your ATM was wrong 15% of the time, you would not be so casual. If a brand of hammer broke 15% to 30% of the time you’d stop using it.

The question is why are you making an exception for a tool which not only doesn’t function like it’s supposed to, is being sold to us and its use mandated *as if it did not have those flaws*.

You are working under the assumption that tools are neutral, which they are not, particularly the ones that are marketed with lies, used by people who don’t understand them *because* of those lies, and also have this environmental effects. The thing is what it does.

Online interactive video gaming and bitcoin mining (to name only two examples) take a lot of computing resources. The environmental effects do concern me but China has demonstrated (twice) that American systems are bloated and AI can be run with far fewer resources.

bitcoin mining is also complete garbage, and online gaming uses a tiny fraction of the resources that either of the other sectors use.

People shouldn’t spend huge amounts of energy solving sudoku puzzles for fraud “money” either.

If the ATM were wrong 15% of the time I wouldn't use it for banking transactions. But I'm not using AI for banking. If an AI could make lottery, stock market, or slot machine predictions with 85% accuracy would you use it?

the affirmation machine that can't even model the present is going to model the future LMAO bridge-sellers are having a field day with this stuff

But people aren’t using it to make predictions, they are using it to find, organize and present information, *which is the thing it is bad at*. And, also, knock-on effects.

The phrasing of your own response carries its refutation.

LLMs are only the tip of the iceberg. Not even the tip, just a little chunk off to one side. AI is not an app, it's a coding approach. I don't know why so many people use LLMs as the exemplar for AI because it isn't. It just happens to be one that's easily available and thus frequently used.

It is literally what is being sold as “AI”, the magic technology. If you’re arguing for machine learning, which is actually useful in some problem domains, don’t chime in when people are talking about LLMs, which everyone talking about “AI” right now is.

If they are talking about LLMs then they should be specific about it because LLMs in no way exemplify the whole field of AI. It makes no sense to "define" AI as LLMs, that's like making assertions about the universe by talking only about the moon.

But you're not using AI for making lottery, stock market, or slot machine predictions.

The point I was making is that some tools need to be accurate to be useful (rulers, ATMs, clocks) but not all tools need to be 100% accurate to be useful. A tool that could predict the stock market or winning lottery numbers, or even some aspects of weather, with 85% accuracy would be useful.

AI is not one of those tools. In fact it is the opposite. If it is not accurate, much like a ruler, it is actively harmful.

Once again, that's a people problem. Unrealistic expectation and lack of education.

“AI” cannot and will never be able to do that.

“If unicorns lived in your shoes and could grant wishes, would you buy more shoes?” Is how you sound rn.

that’s fucking stupid. if an AI produces something and I have to check everything it did, that saves me no time.

Checking takes less time than gathering, organizing, and checking (since everything needs checking anyway).

no, not everything needs checking. a calculator that is wrong 15% of the time would be useless. If I am preparing a document and 15% of the cites are completely made up, that most likely changes the entire fabric of what I’m writing. And to be wrong 15% of the time it lights forests on fire.

That doesn't mean everything that's wrong 15% of the time is useless. I don't know why you think something has to be perfect to be useful. A narrow windy gravel road full of potholes isn't much good for regular cars or wheelchairs, but it's still useful for getting places you couldn't go otherwise.

to be clear, though, I don’t think LLM are useless. they are fancy autocomplete. I do think they are not worth feeding human creative products into an IP woodchipper for, nor are they worth the amounts of money, silicon, and water they are consuming at present.

Get back to me when people are spending billions of dollars to build narrow windy gravel roads and touting them as the future of computing.

When the settlers first came here, a narrow windy gravel road was a big step forward. AI will improve. Some will benefit, some will never learn to use it effectively, but just as roads improved, AI will too. It's not going away so we'd better learn to steer it in positive directions.

It’s twice as bad as the average of all human communication. Things do not have to be perfect to be useful. They DO have to be better than doing without them to be useful. “AI” is worse at everything than humans are, ergo, it is not useful.

You have to gather the information to validate the AI output, so you’re doing double work! If I’ve gathered and organized the information, then I don’t have to check it because I already know it’s correct!!

Double work isn't always a bad thing. We find things in one way and the AI finds it in another. Put them together and the whole may be better because of the different parts. I always check my work anyway, even if I gathered it myself. I tell people if you don't think AI is useful, don't use it.

…this is pointless. If you are gonna sit here and say “well actually it’s a good thing if it causes double the work” then I’m truly at a loss for words. ChatGPT, please draw me “Robert Downey jr rolling his eyes in the studio ghibli style”

Yes, sometimes it's a good thing to have input from another source. That doesn't automatically mean twice the work. There is overlap between what the human gathers and what the AI gathers. [ChatGPT doesn't know how to draw. It passes the request to Dall-E which is a different AI that draws it.]

I mean, in my experience the people using LLMs aren’t validating. *I* am. They’re “saving time” by wasting mine. The close editing required is more time consuming and harder than writing something on your own. Which, of course, is why they don’t do it.

I wouldn't be surprised if those using LLMs without validating are the same ones who don't know how to write or do research in the first place. I've met far too many people who think AI apps are "magic" and believe not only what they tell them but assume they are omniscient and infallible.

Sure, that’s probably true. However, it is the LLMs making references to non-existent papers and jumbling numbers. These are mistakes different from human mistakes that cause wider problems.

I don't "play" with AI. I don't even use LLMs that much. I'm a software developer. I see this from a historical perspective. The software is primitive compared to what it will be in five years. It's also not going away. People need to understand it better so it doesn't end up running them.

“AI” is a toy. It is not useful nor time-saving, it’s just shiny and entertaining enough to foolish people that they mistakenly think they’re “saving time”, even though they are, on average, 20% slower at tasks when using it.

I’m a historian with a PhD and twenty years of experience in teaching a d research as a professor. You are making some big assumptions about what software can do in the future based on a flawed premise about how technology develops over time. Sure there might be more sophisticated software in

Five years. But there also might not be enough difference to justify that kind of software in economic terms. Another perspective is that the productivity gains made by first hardware and software over the past thirty years have reached a Pareto limit. All the easy gains have been achieved, more

incremental gains in productivity have become more expensive. At some point the value is not going to be there and the next technological revolution is going to happen in some completely different field. For now the TechBros have unlimited stacks of money they can set on fire to make billions.

I also have degrees up the wazoo (too many) and years of experience in the software field. The AI we have now is nascent. I'll bet you lunch on it and I'm not talking about LLMs specifically, that's only one small specific application of AI, I'm talking about the field in general.

Nothing grows forever. Everything regresses to the mean sooner or later. It’s the law of thermodynamics.

Yes, eventually, but I don't think we're there yet. There are so many AI applications in the sciences that are in progress or waiting to be coded.

Jfc this is a stupid argument lmao

How would you say it?

Seems apt to have to explain to an AI defender the difference between sentient beings and a language model

I don't see bots and humans as equivalent at all (not even close). I was challenging the previous post's concept of what is useful and what is not useful. The point was that a tool doesn't have to be 100% correct to be useful as long as the user is aware of its limitations.

AI isn’t useful enough to justify all the havoc it’s causing is the fucking point

It's helping in the science fields. It's being used for image analysis, simulations and modeling, and for filtering data.

That’s certainly the PR spin. In the meantime it’s eroding truth, history, art, the environment, human intelligence, etc. Sorry but we’re not in a context where it’s few maybe beneficial use cases matter and I honestly see it as bad faith to bring them up in the face of all the harm it does

I don't mean to make light of what you said but when I read this I though for a moment you were talking about our current government. "In the meantime it’s eroding truth, history, art, the environment, human intelligence, etc."

Lmao lol hahhahahahaha

Eat dirt

Sure Bob lies about his reports but only 1/6th of the time, and he's stealing from the company and he puts fish in the microwave but don't you think firing him is drastic?

My point was that AI is a tool. It's up to the human to choose the right tool and to understand what it's good for and what it's not good for. Early automobiles and early airplanes had many limitations, but they improved (just as AI is improving) and even then they were useful in the right hands.

and you thought you could convey the point that "AI is a tool" by comparing AI to people? if I assume you know what you're talking about, I would say you're dehumanizing people and treat them as tools.

I can tell you from having been in the workplace a long time that people lie in reports a lot more than 1/16th of the time and even if they're not lying a lot of them are incompetent (hired because they're a relative of the boss or... whatever). Early airplanes were not perfect, but they improved.

Who cares if he lies? Everyone lies I'm lying right now The important thing is Bob can get better How do I know? Because 100 yrs ago Nancy sucked but she got better

If any one person consistently lied to you while insisting they were correct as much as AI then you would stop listening to them

An LLM isn't a person. It's a software app coded by people. All software has bugs and limitations. I wouldn't have the same expectations of a software app as I do of people. What we need is education so people have reasonable expectations instead of thinking it's magic (which some of them do).

You are the one who compared AI to a person. I hope you realize how ridiculous of a comparison that was now.

The operative concept was usefulness versus what was not useful. It was an analogy. I don't consider AI apps and people to be equivalent.

People are people. Computers are tools. If a tool is unreliable you don't use it because it makes your job harder.

Whether it's unreliable depends how you use it. A hammer is great for inserting nails, not so good at inserting screws. If people assume they can use AI without understanding its strengths and weaknesses, it's not much different from assuming they can fly a plane without knowing how.

Silly. It's just a hammer that misses the nail a high percentage of the time. If your point is "it's not useless, it's just useless for all the things people are/are being encouraged to use it for," then sure, that's a distinction without a difference.

Jfc. Beyond the fact that if someone I knew lied even 15% of the time I would probably cease to associate with them, my understanding is that the whole point of AI is to surrender your judgement for the sake of convenience

I have no idea why people want to do that but they do. I hate to say this, but people are lazy and are very quick to abdicate thinking and sit back and push buttons. I know this because I tutor computer literacy in my free time. The problem isn't AI, it's a useful tool, the problem is humans.

The tech billionaires have given into sunken cost fallacy. They put so much into this crap they are trying to force it on us even if it means killing us to keep themselves from going broke and no longer affording their extravagant lifestyle.

Along with the money, it's also ego: they love to think they're visionaries and their critics are the horse-drawn carriage makers scoffing cars will never catch on. Being massively wrong? They can't accept that.

Let this be their undoing.

they also made some very big and very stupid promises to shareholders which is why they have to double down forever on it

Yes: this is why they bought the US government. It's a bargain, relative to their sunk costs & it gives them the means to force onto us their 💩 product no one wants. Same deal with the crypto bros who financed Trump. Social Security will likely be a bunch of AIs & your "check" will be DOGECoin.

This is all why I’m unconvinced the “AI revolution” is actually happening. It’s very hard to create a mass change in behavior and it’s not going to happen when the tools don’t do what they say they can do.

📌

Even calling it a hallucination is spin. It’s wrong, inaccurate, crap.

When Reddit is your main source, you know you're on the losing side, lol.

if you really think linking to a reddit copy of a nyt article = using reddit as a main source i can see why you're so enthusiastic about AI.

Oh my bad, not a Reddit post, but an opinion article. Big difference, lol.

Is this an attempt on your part to prove that good ol' human brains can hallucinate factually wrong information about sources (in this case misattributing a news article by two Times reporters as being an opinion article) just as well as artificial intelligence can?

Yeah, you caught me, this has all been an elaborate art exhibition, lol. But sure, let's humor you and say that these non-opinion journalists are correct and AI is hallucinating more. What makes you think that'll stick? AI is currently the worst it's ever going to be again.

" AI is currently the worst it's ever going to be again." [citation needed]

Are you under the impression that AI is not going to keep improving? What's the alternative?

are you fucking serious?

Are you under the impression that every single aspect of human society is inevitably destined to improve, and that no alternative to this is conceivable? Because *just kind of gestures around at all of it*

When it comes to technology? Hell yeah.

Uhm? So far it's become more powerful and better at stealing and emulating styles etc, but that's not = improvement, is it? Or do you consider the avalanche of shite* it produces and the gross environmental hazard a step in the right direction? *= ugly, uninspired, sloppy copies, made up 'facts'...

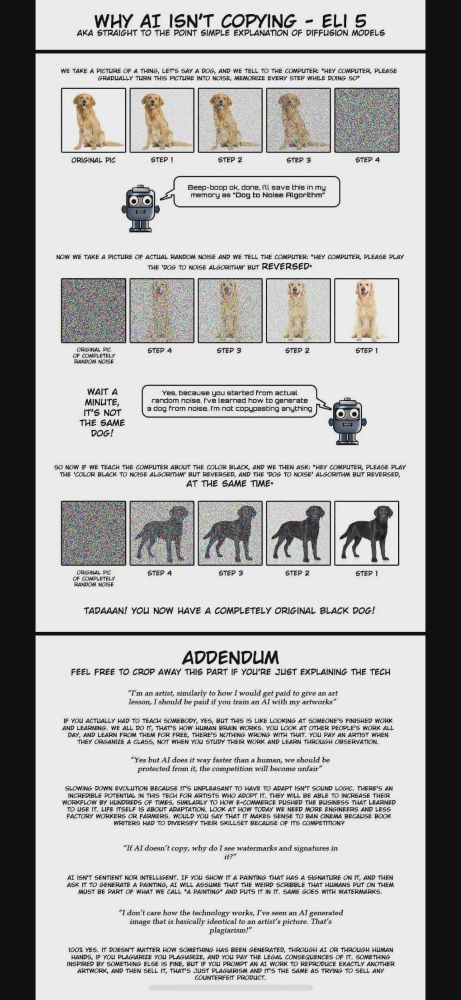

The fact that you call it "stealing" proves you don't actually know how it works.

If you believe that AI doesn't steal, copy and emulate creative human beings' work then you and I cannot have a discussion, because that means we live in alternate realities.

it only keeps improving (this was last sunday, but at least, thankfully, this is "the worst it's ever going to be again")

Oh no, it made a mistake! That must mean it will always make mistakes and the technology behind it will never improve! Lol

eh he can just post a meme about it.

Did AI make that cartoon?

Did it? I dunno. I thought you could "always tell," lol.

I mean, its AI bad? So its either an insult to whoever made it or its AI? Humans do make bad things, ill easily admit that

it's great for perfect rational actors in a perfect hypothetical universe and if you disagree then you're the one stopping that from being the universe we live in 😭

AIs don't have to be trained on copyrighted materials. Some commercial ventures choose to do it. Greed and competitiveness are two reasons why this happens. I don't agree with using other people's work, but it's wrong to assume the technology inherently requires it. It doesn't.

Ai is a tool. It doesn't matter if it hallucinates, just as it doesn't matter if the tires or electrical system, or oil in a car needs maintenance. Cars as imperfect as they are, are still useful. The problem is PEOPLE having unrealistic expectations and not doing their due diligence.

Hallucinations aren't the same as cars needing new brake pads from time to time. They're like if you step on the brake pedal and the car accelerates instead. I've seen AI tell people that poisonous plants are safe to eat multiple times.

Telling me is that you don't know what these models are. If you do, then you're cherry-picking the data. Yes, AI will hallucinate. But this is not how you measure that. This is like saying ”Shoes are bad because 95% of them either don't fit or don't last," without accounting for size or use case.

I'm not trying to defend AI. I have plenty of issues with it. But I do think it's important to be accurate in an assessment. For example, AI models are often specialized. The general AI models are the worst (like ChatGPT). But there are non-general models which have extremely high accuracy.

I get that you're not defending it, but if there are models that hallucinate 15% of the time and that's the low end and there are models that hallucinate 80% of the time then that's the spread, I dunno how else you want to cut it.

Because it depends on what you're asking it. That's the reason it's about knowing how to use it. For example, some models don't make up anything and simply do consolidated searches for you. It's still on you to validate the results. I'm forced to use it often for research so I learned how.

Another way to look at it is to ask it information that's common. All of them should have no hallucinations for basic things like, "is the planet flat?" But there's generalized searches people make that some AI has either been isolated from the truth or trained (purposefully) to be wrong about.

That's called "guardrails". And all the models you can use without your own hardware are using lots and lots of guardrails. They do this to avoid getting sued by the government. Political narratives are the biggest reason AI can get so much wrong.

You're really not explaining this in a way that makes it any better but since you're not defending ai I guess that doesn't matter (not being sarcastic)

What I'm pointing out is that it's not AI that is the problem. It's humans either corrupting the info in the models or humans not using AI correctly. Nobody is complaining about hammers, but if I give a classroom full of 1st graders hammers, we call would agree that's going to end badly, right?

That's where we disagree.

Not that we shouldn't give hammers to kids lol but that humans are the problem not AI.

Guns are also tools. This is why we need gun control in the US & why other countries have already figured out it’s not worth it. You can use it to feed yourself, but it’s being loaded with hollow point bullets & it’s unregulated. As long as we’re using metaphors. Tools are what they are used to do.

Gun control opinions aside, yes, this is the point. Not everyone needs every tool. But if you're going to use a tool, you should know how to use it or go in knowing you're still learning it and not blame the tool if you can't use it right.

Are you trying to say AI only hallucinates when people "use it wrong" or am I misunderstanding?

No. I'm trying to say that the likelihood of a hallucination greatly increases when a user doesn't know how to use the AI. Thinking like a software engineer has, in my experience, brought better results. This makes sense when you consider that software engineers are very specific in wording things.

So you were just lying earlier when you said you weren't defending AI. Thanks for wasting our time here.

It's still not a defense of AI any more than a defense of hammers. You're conflating "AI hallucinates because users don't know what they're doing" with "AI doesn't hallucinate". My point isn't a defense but keeping the record straight.

We *could all agree...

And that's my point. Right tool for the job or you're going to botch the job.